APIPark

Open siteCoding & Development

Introduction

Open-source portal for managing AI and API services.

APIPark Product Information

APIPark – Open Source Enterprise API Developer Portal

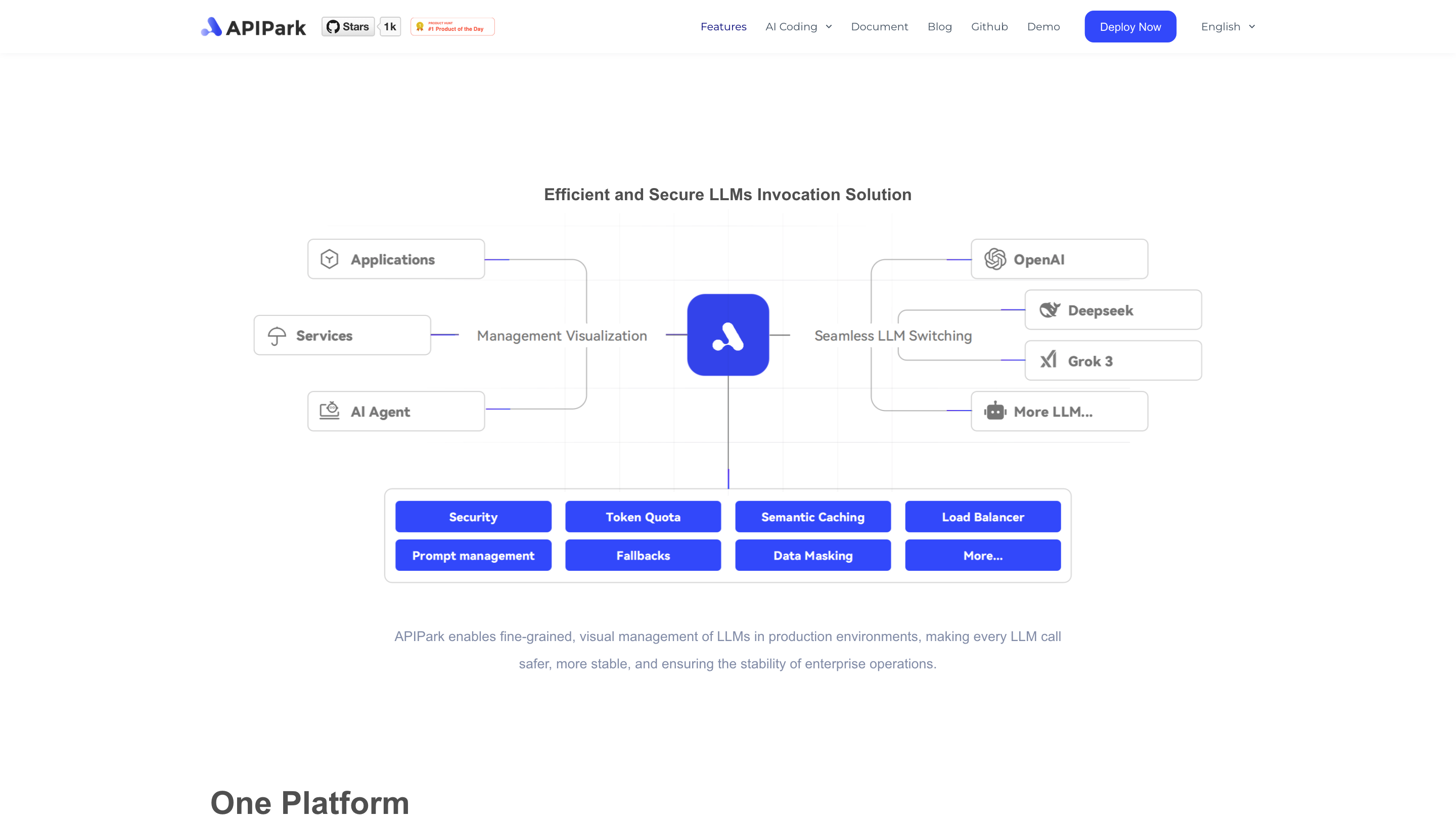

APIPark is an open-source, all-in-one LLM gateway and API developer portal designed to help enterprises manage and orchestrate large language models (LLMs) safely, efficiently, and at scale. It provides visual, fine-grained control over LLM calls, load balancing across multiple models, traffic quotas, caching, flexible prompts, data masking, and a developer-friendly portal for API sharing and monetization. The platform emphasizes stability, security, and ease of integration with existing tech stacks.

Key Capabilities

- Unified API signature for multi-LLM connections without changing existing code

- Load balancing across multiple LLM instances for reliability and performance

- Fine-grained traffic control to manage quotas and prioritize specific LLMs

- Real-time monitoring dashboards for LLM usage and performance

- Semantic caching to reduce latency and LLM usage

- Flexible prompt management with reusable templates

- Convert prompts and AI into reusable APIs for quick sharing

- Data masking and privacy safeguards to protect sensitive information

- AI agents and extensible plug-in ecosystem for expanded use cases

- Built-in API portal features for internal/external API sharing and collaboration

- Billing and access control to support enterprise governance

- High-velocity deployment: deploy your LLM gateway and developer portal in minutes

How APIPark Works

- Connect multiple AI models via a unified API signature, enabling calls to different LLMs without code changes.

- Route and balance traffic across LLMs using load balancer, with support for quotas and prioritization.

- Apply semantic caching to accelerate frequent queries and reduce upstream LLM calls.

- Manage prompts with flexible templates, turning AI prompts into reusable APIs.

- Enforce security and privacy through data masking and access control.

- Monitor usage with dashboards and insights to optimize performance and cost.

- Expand capabilities with AI Agents and a growing catalog of integrated tools.

Core Features

- Unified API signature for connecting multiple LLMs without code changes

- Load balancing across 200+ LLMs and future models

- Fine-grained traffic control: quotas and prioritization for tenants and models

- Real-time dashboards for LLM traffic, performance, and health

- Semantic caching to boost response speed and reduce LLM usage

- Flexible prompt templates for managing and modifying prompts in production

- Convert prompts and AI into APIs for easy sharing with developers

- Data masking to protect privacy and mitigate LLM attacks

- AI Agent ecosystem for expanding application scenarios

- Open API portal to securely publish internal/external APIs

- Built-in API billing and usage tracking for monetization

- End-to-end access control and security features

- Quick deployment: deploy LLM gateway & developer portal in 5 minutes

Use Cases

- Enterprise LLM orchestration: manage multiple models across departments with controlled access

- Cost optimization: quotas, prioritization, and caching to lower LLM spend

- Rapid API prototyping: turn prompts into APIs and share with teams

- Secure AI deployments: data masking and policy-driven access control

- Developer experience: a single portal for API documentation, testing, and collaboration

Getting Started

- Install or deploy APIPark in your environment

- Connect your preferred LLMs using the unified API signature

- Configure load balancing, quotas, and prompts

- Enable data masking and access controls

- Use the API portal to publish and monetize APIs

Safety and Compliance

- Data masking helps prevent leakage of sensitive information during LLM calls

- Access controls ensure compliant sharing of APIs and data

- Monitoring and logging support governance and auditability