Arize AI

Open siteCoding & Development

Introduction

Increase model velocity and improve AI outcomes

Arize AI Product Information

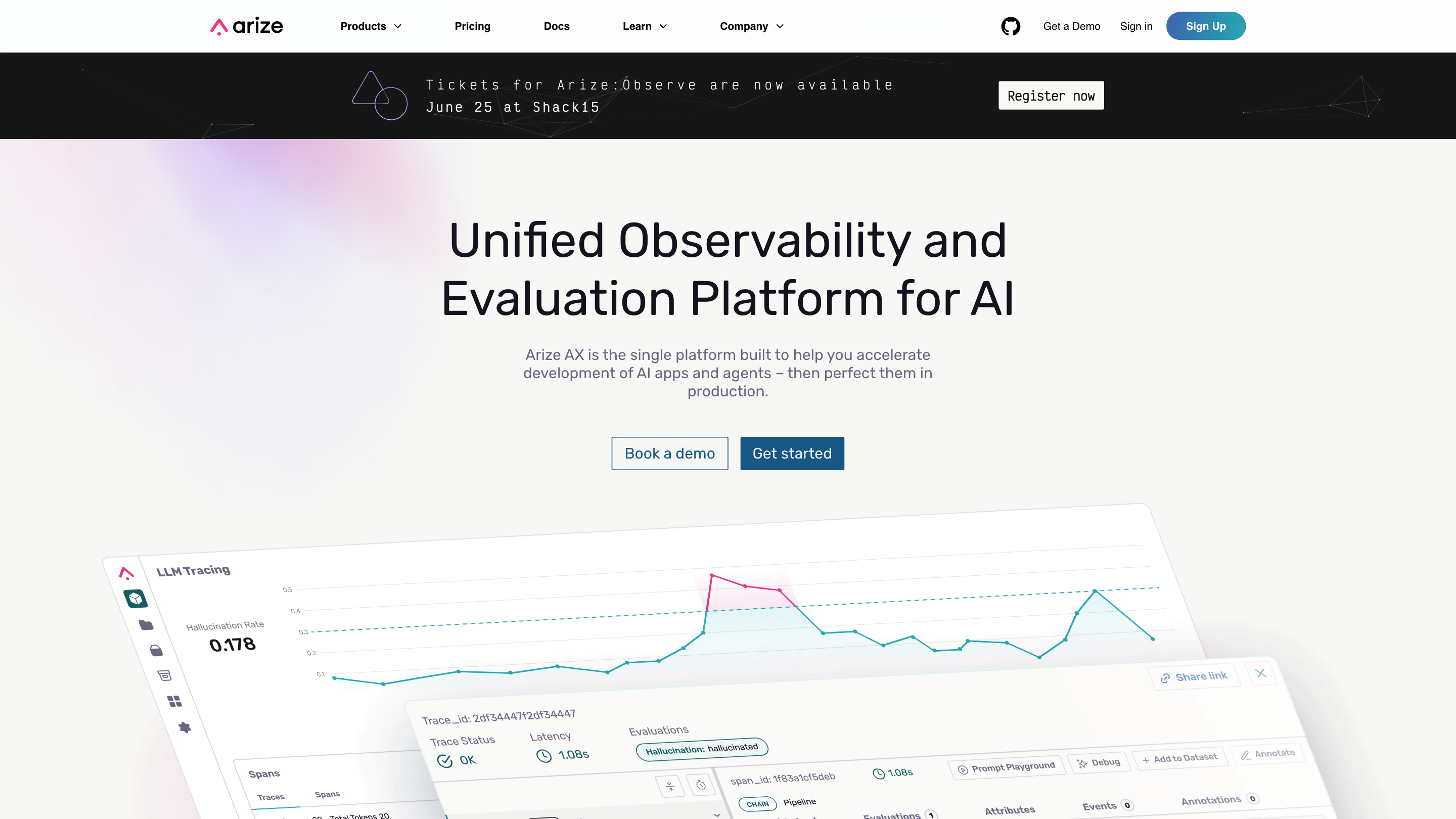

Arize AI Observability Platform: Unified AI Observability and Evaluation" is a unified observability and evaluation platform designed to accelerate the development and production refinement of AI applications and agents. It pairs end-to-end observability with automated evaluation to close the loop between development and production, enabling data-driven iteration, reliable monitoring, and quality annotations. The platform emphasizes interoperability, open-source foundations, and vendor-agnostic tooling to give AI teams clear insight into model and agent performance in real production data environments.

How it works

- Observability across AI systems: Integrates with AI frameworks via OTEL instrumentation to provide end-to-end visibility of prompts, variables, tool calls, and agent interactions. Automates instrumentation for rapid setup and comprehensive tracing.

- Offline and online evaluations: Supports continuous evaluation at every stage—from development to production—using LLM-as-a-Judge insights, code-based tests, and evaluation dashboards. Run checks both offline and online as code evolves.

- Production monitoring & reliability: Delivers real-time monitoring with anomaly detection, failure simulation, root-cause analysis, auto-thresholding, and smart alerts. Dashboards provide scalable visibility into model health and performance.

- Data curation and annotation: Provides scalable annotation workflows with auto-labeling and human-in-the-loop capabilities to build high-quality labeled datasets and identify edge cases.

- Agent development & evaluation: Covers agent architectures, templates, prompts, and evaluation practices for single-function agents to complex multi-agent systems, with best practices and examples.

- Open standards & interoperability: Built on OpenTelemetry and open-source conventions to ensure interoperability, data portability, and avoiding vendor lock-in.

Core Capabilities

- Unified observability for AI models, benchmarks, and agents

- End-to-end tracing of prompts, tool calls, and agent execution

- Continuous offline and online evaluation with LLM as a judge insights

- Real-time production monitoring, anomaly detection, and root-cause analysis

- Automated thresholding, smart alerts, and customizable dashboards

- Dataset curation, annotation workflows, and auto-labeling

- Experiment tracking, eval hubs, and prompt/agent IDEs

- Open standards, OpenTelemetry integration, and OSS tooling

- Built for GenAI, ML, and CV workflows with scalable deployment

Why choose Arize AI

- Close the loop between development and production with data-driven iterations.

- Gain instant visibility into production AI behavior across models and agents.

- Leverage open standards and open-source components for flexibility and transparency.

Getting started

- Book a demo or start a trial to explore Platform, Phoenix OSS, Eval Hub, and related tools.

- Explore documentation on LLM Evaluation, AI Agents, LLM Tracing, RAG Evaluation, and more.

Safety and best practices

- Use production observability data to continuously improve reliability and safety of AI systems.

- Leverage evaluation results to align AI behavior with desired outcomes and guardrails.