AutoArena

Open siteResearch & Data Analysis

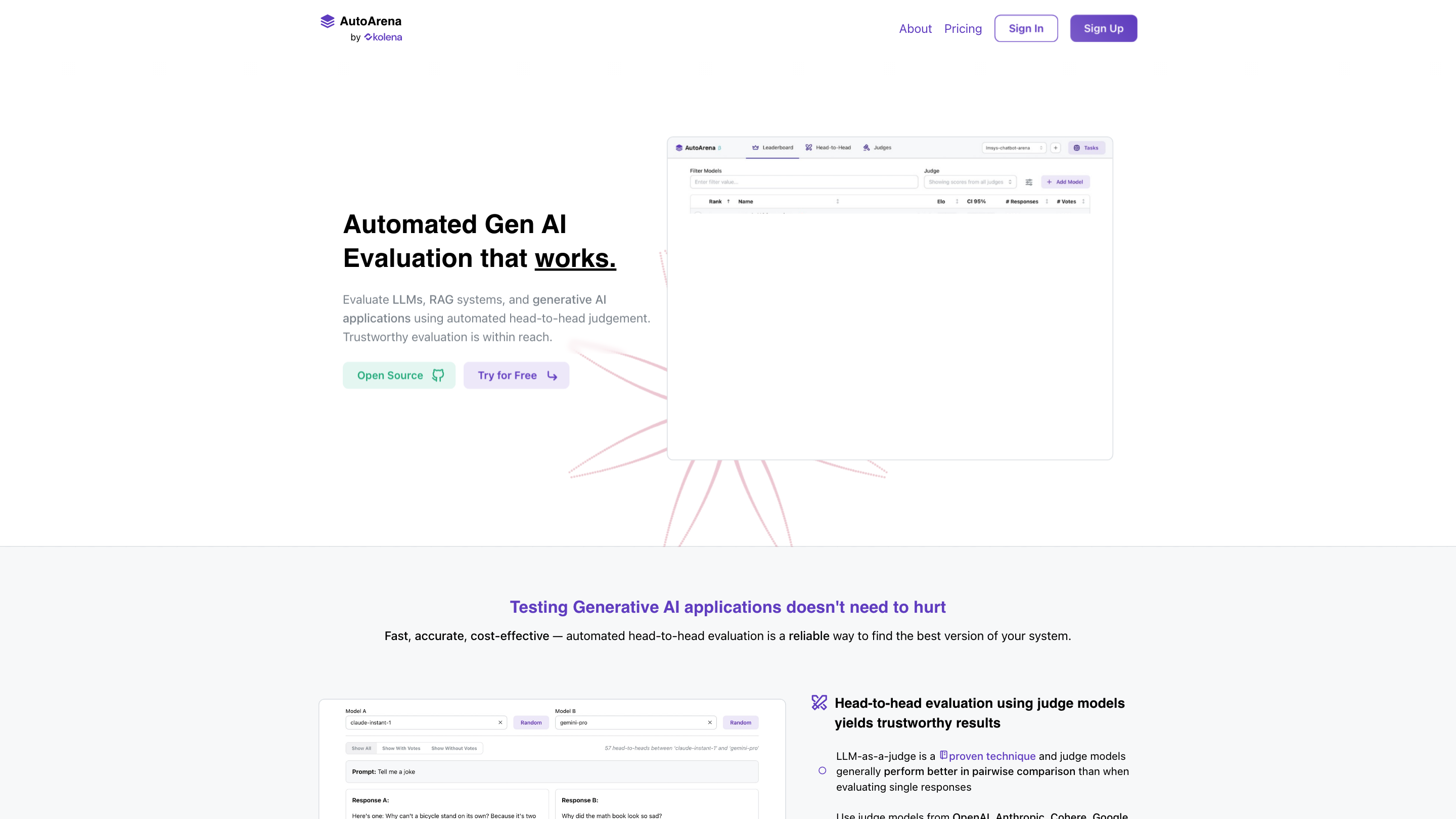

Introduction

Automated evaluations to rank various GenAI systems.

AutoArena Product Information

AutoArena is an automated head-to-head evaluation platform for generative AI applications. It enables benchmarking of LLMs, RAG systems, and other AI models by organizing judge-model responses in pairwise comparisons, producing reliable signals, leaderboards, and CI-ready insights. It supports multi-model judging from major providers as well as open-weight judge models, local runs, and scalable parallel evaluation. The tool focuses on reducing evaluation bias, improving accuracy of human-preference alignment, and streamlining integration into development workflows.

How AutoArena Works

- Run head-to-head evaluations between model pairs using judge models (from OpenAI, Anthropic, Cohere, Google, Together AI, etc.) or open-weights judges running locally (via Ollama).

- Collect votes or preferences from judge models and human inputs to derive robust signals.

- Compute Elo scores and confidence intervals to form leaderboard rankings.

- Use parallelization, randomization, bias correction, retry logic, and rate limiting to ensure reliable results.

- Integrate outputs into CI workflows and dashboards to compare system versions over time.

Use Cases

- Compare multiple generations of a system to identify the best version.

- Improve human-preference alignment with domain-specific fine-tuned judges.

- Run continuous evaluations in CI to guard against regressions.

- Maintain a leaderboard of model variants for quick decision-making.

How to Use AutoArena

- Install locally:

pip install autoarenaand start testing in seconds. - Or use AutoArena Cloud at autoarena.app for managed collaboration.

- Connect judge models from various families or run open-weights judges locally via Ollama.

- Set up CI automations to test prompts, preprocessing, postprocessing, and RAG updates.

- Feed inputs (prompts) and collect outputs for evaluation, then review results and rankings.

Pricing

- Open-Source: Free. Apache-2.0 licensed AutoArena application for students, researchers, hobbyists, and non-profits. Self-hosted option via

pip install autoarena. - Professional: $60 per user per month. Cloud access to fine-tuned judge models with higher accuracy votes, two-week free trial, dedicated Slack support.

- Enterprise: On-premises deployment (AWS/GCP/Azure or private), SSO, priority features, dedicated support, and enterprise billing.

Getting Started

- Install locally:

pip install autoarenaand run. - Or sign up for AutoArena Cloud at autoarena.app.

- Choose judge models across providers or deploy open-weight judges locally.

- Start head-to-head evaluations and view Elo-based leaderboards.

Safety and Best Practices

- Use diverse judge-model families to reduce bias.

- Combine automated votes with human preferences for robust signals.

- Integrate into CI to catch regressions early.

Core Features

- Head-to-head evaluation across multiple judge models (closed and open-weight) to compare AI systems

- Elo-based leaderboard with confidence intervals for reliable rankings

- Parallelization, randomization, bias correction, retrying, and rate limiting managed by AutoArena

- Support for CI integration and workflow automation

- Local and cloud deployment options (self-hosted via pip and cloud at autoarena.app)

- Fine-tune judge models for domain-specific evaluations to improve signal quality