Ava PLS

Open siteCoding & Development

Introduction

Local language models for your desktop.

Ava PLS Product Information

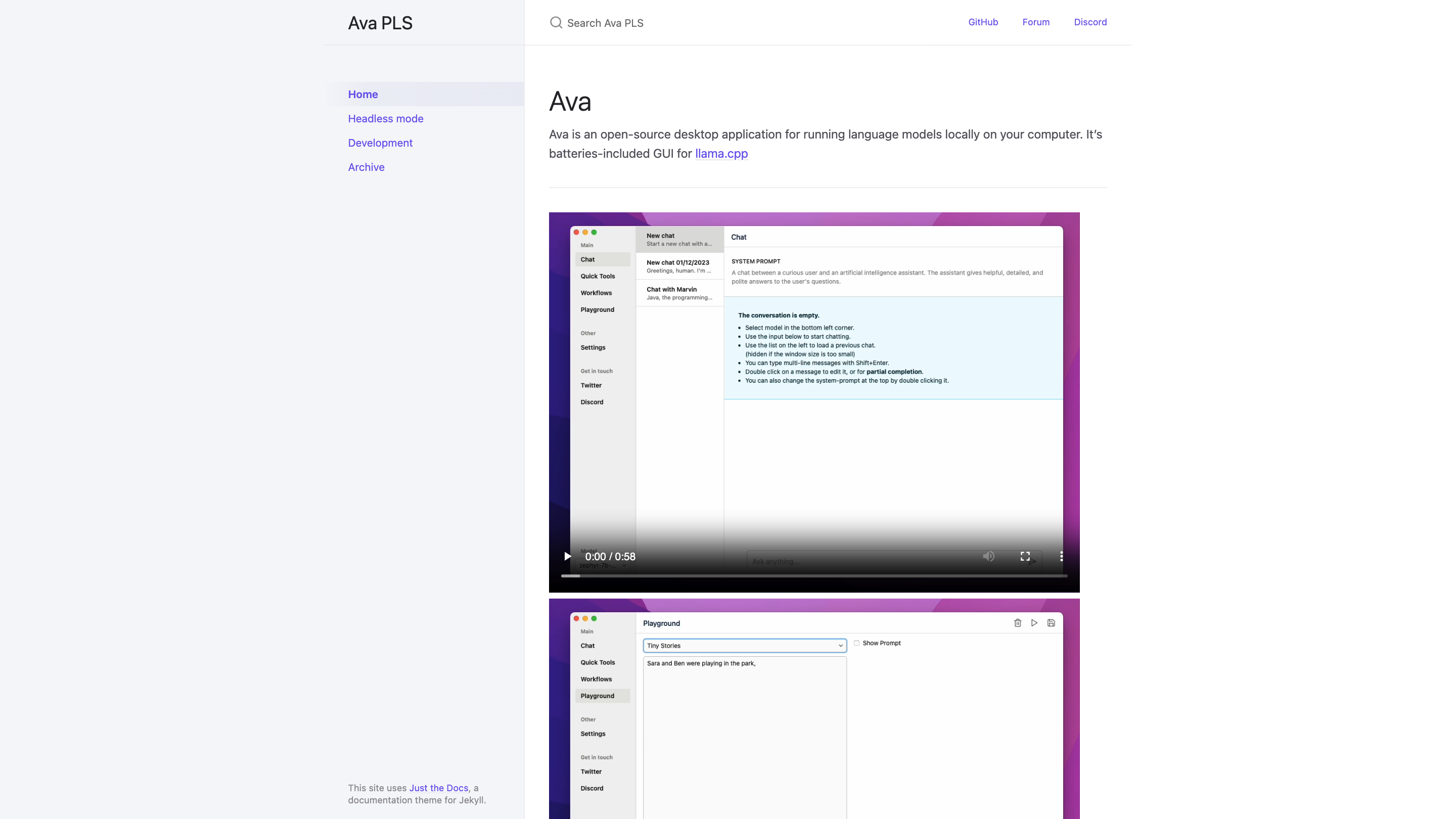

Ava is an open-source desktop application for running language models locally on your computer. It provides a batteries-included graphical user interface (GUI) built around llama.cpp, enabling users to run language models offline with a convenient local workflow. The project uses Zig and C++ (llama.cpp), with SQLite for data handling and a modern frontend stack (Preact, Preact Signals, Twind) for a responsive UI. Ava can be downloaded as prebuilt artifacts or built from source, including a headless mode option for non-GUI usage.

How Ava Works

- Local model execution: Run language models directly on your machine without requiring cloud access.

- GUI-based workflow: A user-friendly desktop interface to configure and execute models, manage sessions, and view outputs.

- Build options: Download prebuilt binaries or build from source using Zig; supports standard build commands.

- Headless mode: Use -Dheadless=true to run Ava without the GUI for automation or server-style tasks.

Getting Started

- Download or build Ava. Obtain prebuilt artifacts from GitHub Actions or build locally with Zig.

- Launch Ava. Open the GUI to start configuring models, selecting weights, and issuing prompts.

- Run models locally. Execute inference tasks directly on your computer, with results rendered in the app.

- Optional headless run. Build with -Dheadless=true to operate Ava in headless mode for scripting and automation.

Tech Stack

- Zig programming language

- C++ (llama.cpp) for model execution

- SQLite for data management

- Frontend: Preact, Preact Signals, Twind

Safety and Licensing

- Ava is MIT-licensed and intended for local, offline use of language models. Respect model licenses and data privacy when handling prompts and outputs.

Core Features

- Local execution of language models with a GUI

- Open-source MIT-licensed project

- Built around llama.cpp for efficient on-device inference

- Zig-based build system with support for zig build run and zig build run -Dheadless=true

- Integrated frontend stack (Preact, Preact Signals, Twind) for a responsive UI

- SQLite for lightweight local data management

- Prebuilt artifacts or source builds from GitHub Actions

- Headless mode for automation and scripting