CopilotChat

Open siteCoding & Development

Introduction

Simplify code generation with TDD process

CopilotChat Product Information

Copilot Chat: Code Generation with Test-Driven Development is an AI-assisted code generation tool designed to produce software code guided by test cases and user requirements. It orchestrates a three-step workflow—define tests, generate code, and validate—using a language model (LLM) to iteratively refine code until all tests pass. The approach emphasizes Test-Driven Development (TDD) to ensure correctness and alignment with expected behavior from the outset.

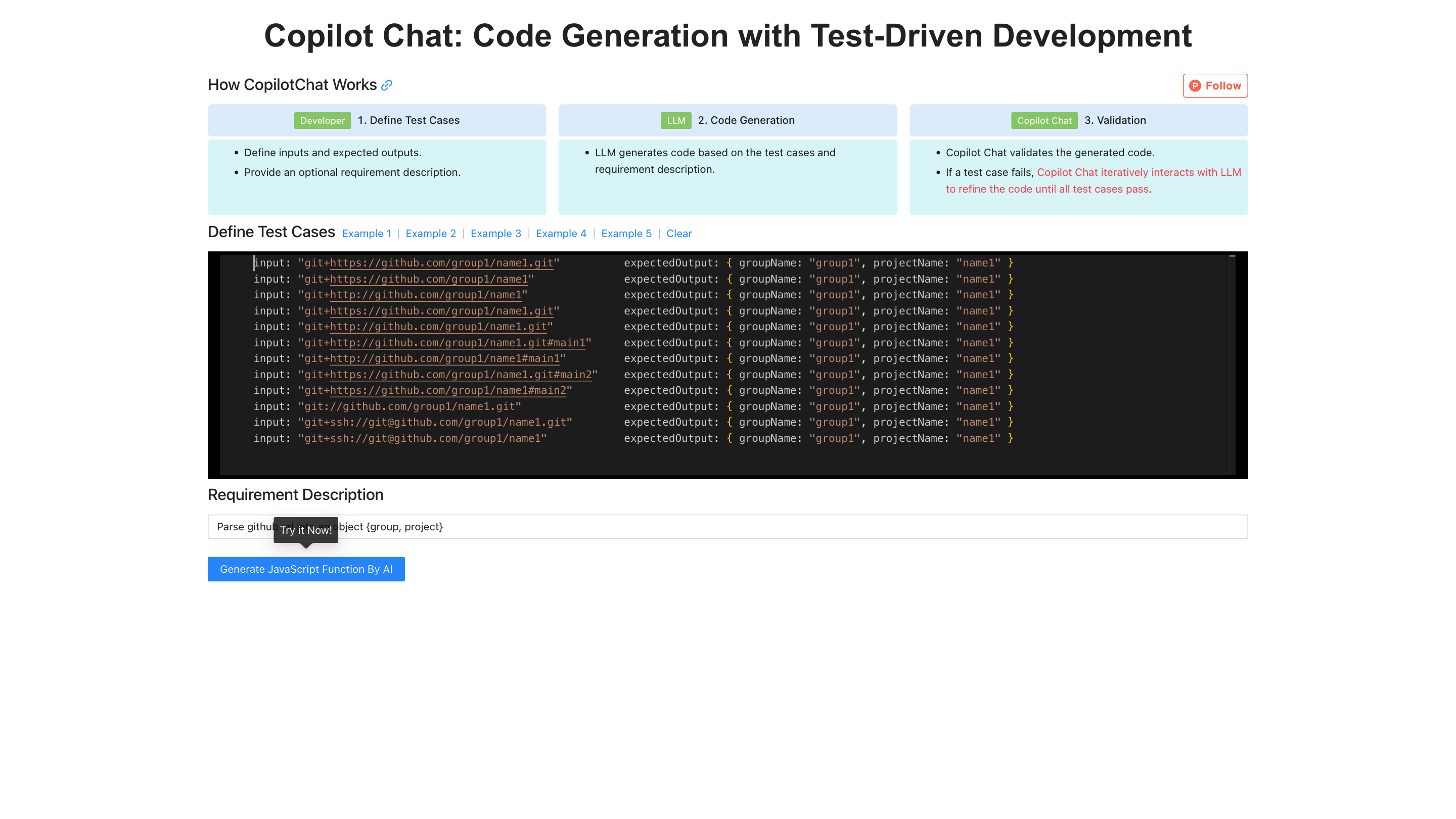

How CopilotChat Works

- Define Test Cases: The user defines inputs, expected outputs, and an optional requirement description that captures the intended functionality.

- Code Generation: The LLM generates code based on the provided test cases and requirement description.

- Validation: Copilot Chat runs the generated code against the test cases. If any test fails, it interacts with the LLM to refine the code until all tests pass.

Example Interaction Flow

- Requirement: "Generate a JavaScript function that sums an array of numbers."

- Tests: [sum([1,2,3]) => 6, sum([]) => 0, sum([-1,1]) => 0]

- LLM generates the function, tests run, and any failing case prompts a refined implementation.

How to Use CopilotChat

- Provide Test Cases: List representative inputs and the expected outputs. Include edge cases to ensure robustness.

- Add an Optional Requirement Description: Clarify constraints, performance expectations, or style guidelines.

- Review Generated Code: Examine the produced code and its alignment with tests and description.

- Run Validation: Trigger the automated test suite to verify correctness.

- Iterate: If tests fail, request refinements and re-run validation until all tests pass.

Examples

- Example 1: JavaScript function to compute the factorial for non-negative integers with error handling for invalid input.

- Example 2: Python function to merge two sorted lists into a single sorted list without duplicates.

- Example 3: TypeScript utility to debounce a function with a configurable delay.

- Example 4: Java method to perform a binary search on a sorted array.

- Example 5: Go function to parse a CSV line into a record structure with proper handling of quoted fields.

Safety and Best Practices

- Use well-scoped test cases to prevent overfitting to the test suite.

- Validate not only correctness but also performance characteristics and memory usage where relevant.

- Avoid leaking secrets or credentials in test data or generated code.

- Be mindful of licensing and attribution when reusing generated code.

Core Features

- Test-driven code generation pipeline: define tests, generate code, validate with iterative refinement

- Automated validation loop that reworks code until all tests pass

- Support for multiple languages and environments via LLM-driven generation

- Edge-case and error-handling awareness baked into test definitions

- Interactive refinement prompts to steer code toward desired design

- Clear separation between requirements and implementation guidance

- Quick iteration cycles to accelerate development and learning