Google Gemma Chat

Open siteResearch & Data Analysis

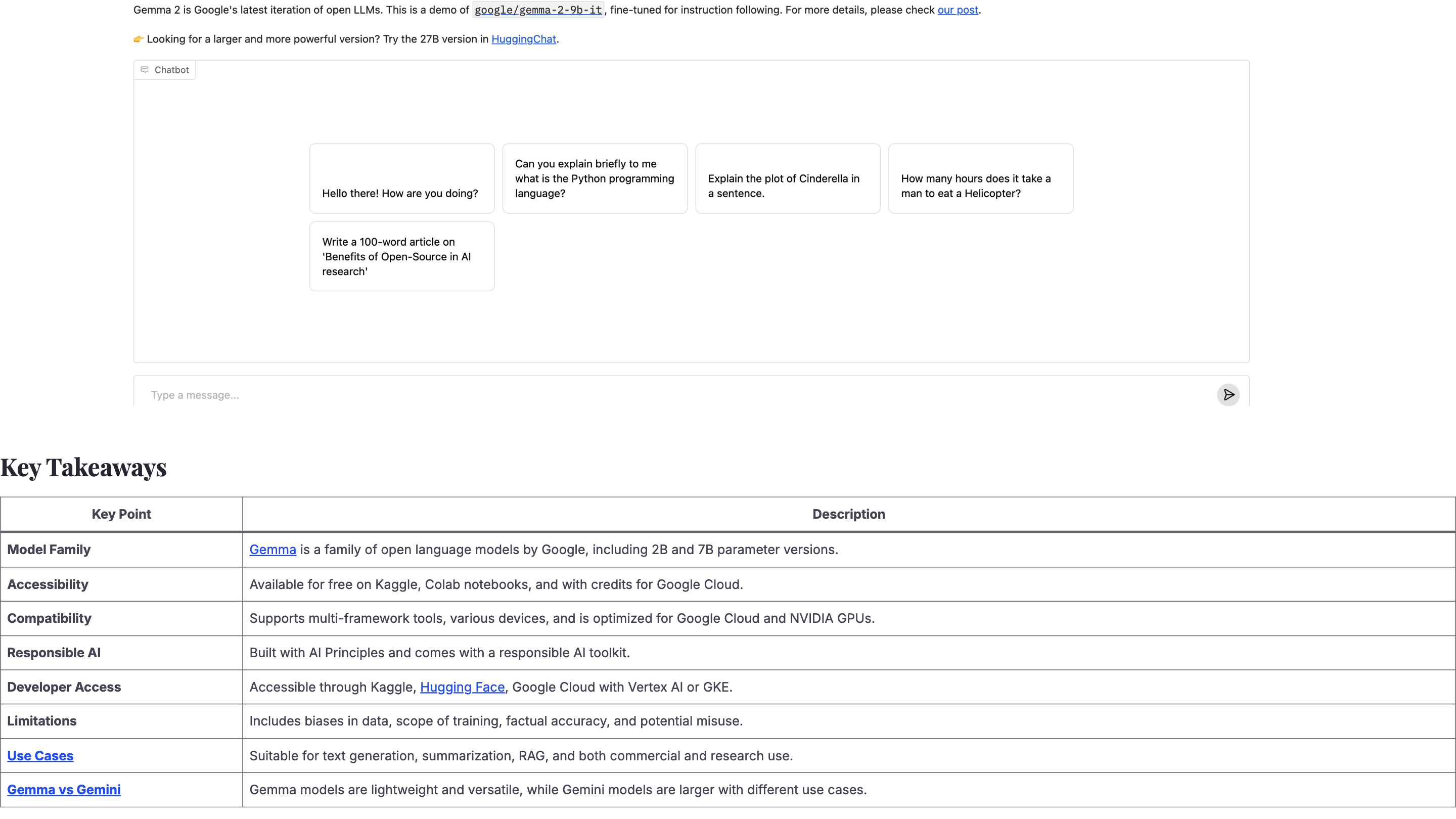

Introduction

Cutting-edge open language model.

Google Gemma Chat Product Information

Google Gemma is a family of lightweight open language models by Google, designed for safe, reliable AI use across devices and platforms. Available in 2B and 7B parameter sizes, Gemma provides base (pre-trained) and instruction-tuned variants, and is built with Google-scale technology used in Gemini models. It is optimized for cross-device compatibility, Google Cloud deployment, and NVIDIA GPU acceleration. The model family emphasizes accessibility, responsible AI practices, and flexible integration for development and research.

Key Highlights

- Lightweight open LLM family with 2B and 7B parameter sizes

- Base and instruction-tuned variants available

- Cross-device compatibility: runs on laptops, desktops, IoT devices, mobile, and cloud

- Optimized for Google Cloud (Vertex AI, GKE) and NVIDIA GPUs

- Free access on Kaggle and Google Colab; credits available for Google Cloud

- Accessible via multiple ecosystems (Kaggle, Colab, Google Cloud, Hugging Face)

- Responsible AI toolkit and clear guidance on limitations and safe use

How to Use Gemma

- Access points: Kaggle, Google Colab, Google Cloud (Vertex AI or GKE), Hugging Face Inference Endpoints

- Deployment options: Use in cloud workflows, on-premise-like edge devices, or local development environments

- Tuning: Supports base and instruction-tuned variants; fine-tuning techniques like LoRA can be applied (where supported)

- Use cases: Text generation, summarization, RAG (retrieval-augmented generation), and both commercial and research tasks

How It Works

- Choose a Gemma variant (2B or 7B; base or instruction-tuned) based on resource availability and task needs.

- Run on compatible hardware (laptops, desktops, IoT, mobile, or cloud GPUs).

- Integrate via Kaggle, Colab, Vertex AI, GKE, or Hugging Face endpoints for inference and experimentation.

- Leverage the provided responsible AI toolkit for safe deployment and usage governance.

Access and Deployment Ecosystem

- Kaggle: Free access to Gemma models for experimentation

- Google Colab: Free and paid options with resource credits

- Google Cloud: $300 credits for newcomers to accelerate deployment

- Vertex AI / GKE: Production-grade deployment and scalable training

- Hugging Face: Inference Endpoints integration for broader accessibility

Limitations and Considerations

- Biases and data gaps in training data can affect outputs

- Scope of training data limits domain expertise and up-to-date knowledge

- Potential for misuse or privacy concerns; responsible use and governance are essential

Core Features

- Lightweight open-language models (2B and 7B parameters)

- Base and instruction-tuned variants

- Cross-device compatibility (laptops, desktops, IoT, mobile, cloud)

- Optimized for Google Cloud (Vertex AI, GKE) and NVIDIA GPUs

- Free access on Kaggle and Google Colab; Google Cloud credits available

- Multi-platform deployment via Kaggle, Colab, Vertex AI, Hugging Face

- Responsible AI toolkit and guidelines for safe usage