GPUX

Open siteCoding & Development

Introduction

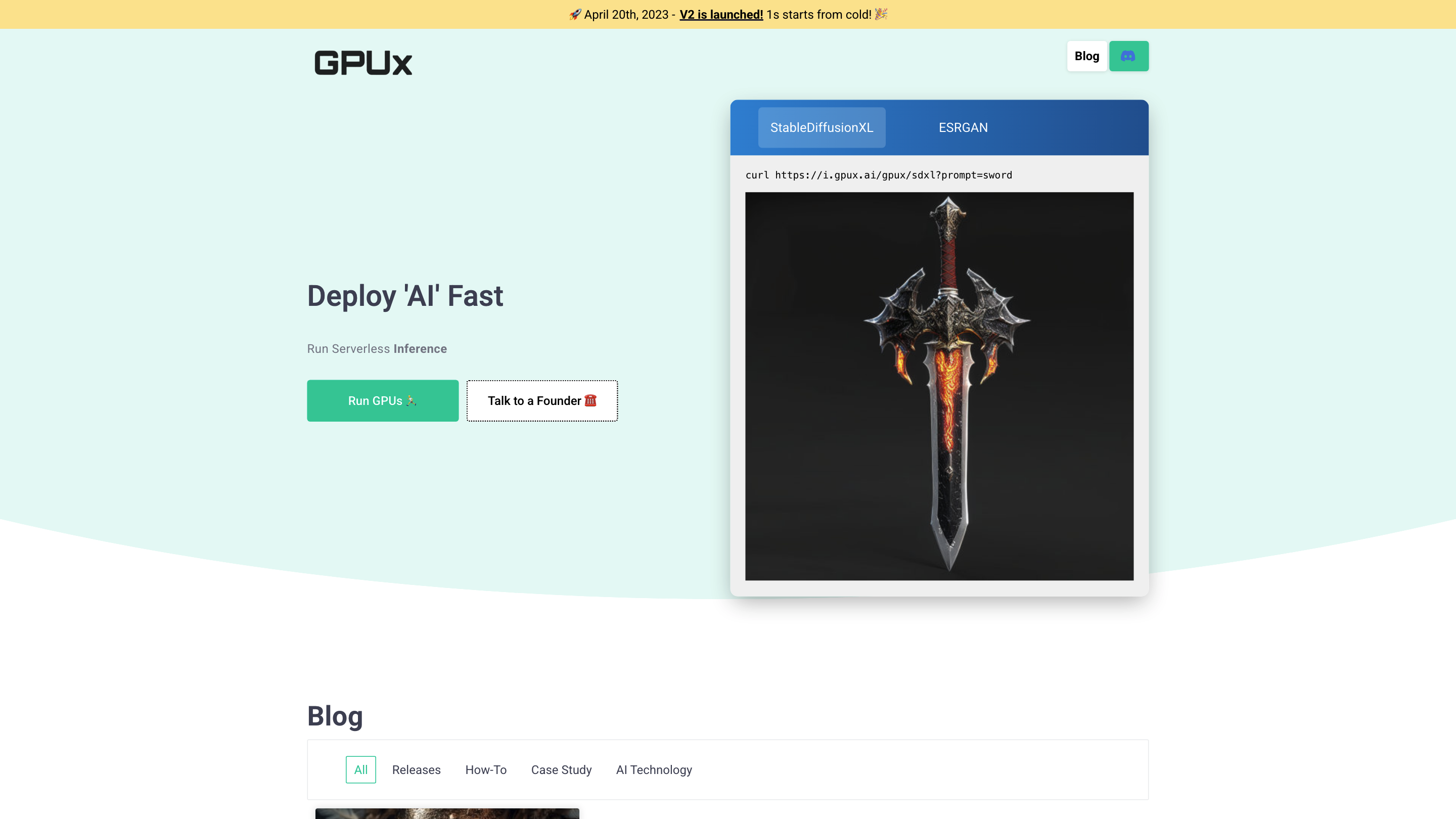

GPUX is a platform for AI and machine learning workloads with fast GPU resources.

GPUX Product Information

GPUX is an AI acceleration and inference platform that emphasizes fast, serverless GPU-based runs for AI models. Since its launch, the project highlights 1-second cold-starts, cloud-native inference, and optimized runtimes for models like Stable Diffusion XL, ESRGAN, and Whisper. The platform promotes rapid deployment, scalability, and private model hosting with peer-to-peer (P2P) capabilities and a focus on choosing the right hardware fit for demanding ML workloads.

Key Focus

- Serverless inference with GPU-backed runtimes

- Support for image and video generation (Stable Diffusion XL), upscaling (ESRGAN), and audio transcription (Whisper)

- Speed optimizations (e.g., 50% faster StableDiffusionXL on RTX 4090) and cold-start performance

- Private model hosting and P2P sharing of model requests

- Organizational focus with a small team and regional presence

How It Works

- Deploy AI models (e.g., Stable Diffusion XL, AlpacaLLM, Whisper) on GPU-backed runtimes.

- Run in a serverless fashion to achieve quick startup times and scalable inference.

- Optionally enable private model sharing or P2P requests for collaboration or usage with other organizations.

- Access resources and tooling through the GPUX ecosystem, including blogs and technical case studies.

Use Cases

- Fast image generation and upscaling

- Voice and audio transcription with Whisper

- Private, collaborative AI model usage with P2P sharing

Safety and Legal Considerations

- Ensure responsible use of AI models and compliance with licensing terms for deployed models and data.

Core Features

- 1s cold-start serverless GPU inference

- Optimized runtimes for Stable Diffusion XL and related AI models

- Support for ESRGAN image upscaling and WHISPER transcription

- Private model hosting and P2P model request sharing

- Move fast: rapid deployment and iteration with GPU-accelerated pipelines

- Team and partner ecosystem with contact points and regional presence

- Documentation, blog posts, and case studies to guide deployment