Hugging Face

Open siteSocial Media

Introduction

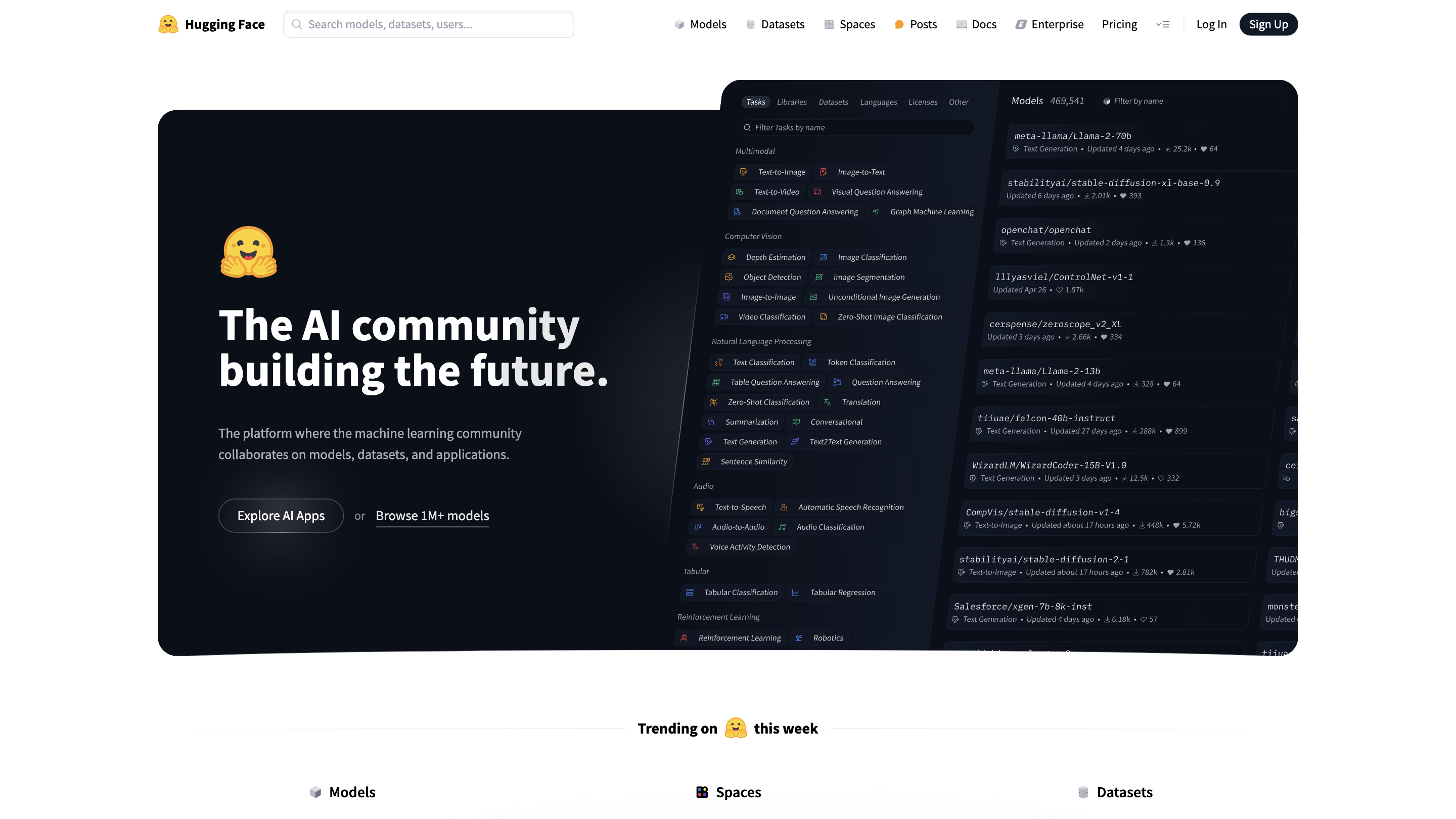

AI community building the future

Hugging Face Product Information

Hugging Face – The AI Community Platform is a collaborative platform that accelerates the creation, discovery, and deployment of AI models, datasets, and applications. It provides a centralized ecosystem for researchers and developers to share state-of-the-art models, datasets, space-hosted apps, and enterprise solutions. The platform highlights openness, interoperability, and scalable tooling for ML projects across text, image, audio, video, and 3D modalities.

How to Use Hugging Face

- Browse Models, Datasets, and Spaces. Explore the catalogue of models (pre-trained and fine-tuned), datasets, and interactive Spaces (web apps) to find suitable resources for your task.

- Sign Up / Sign In. Create an account to upload your own models, datasets, and Spaces, or to access enterprise features.

- Publish and Share. Upload artifacts (models, datasets) with metadata, licenses, and usage guidelines; publish as public or private to collaborate with others.

- Deploy and Inference. Use provided inference endpoints or deploy on managed hardware for scalable prediction serving.

- Enterprise Solutions. If needed, leverage enterprise-grade security, access controls, SSO, audit logs, and dedicated support.

What You Can Build

- State-of-the-art ML models (Transformers, Diffusers, PEFT, etc.)

- Data processing pipelines and datasets for training and evaluation

- Interactive web apps (Spaces) that demonstrate or test AI capabilities

- End-to-end ML workflows from data to deployment

Safety and Legal Considerations

- Respect licenses and terms of use for models and datasets.

- Be mindful of data privacy and licensing when deploying or sharing artifacts, especially in enterprise contexts.

Core Features

- Extensive catalog of models, datasets, and Spaces for rapid experimentation

- Open-source tooling and community-driven contributions (Transformers, Diffusers, Datasets, etc.)

- Spaces: browser-based apps to demo and test AI applications without heavy setup

- Enterprise offerings with security, access controls, SSO, and dedicated support

- Easy publishing and collaboration workflows for ML artifacts

- In-browser and remote inference capabilities with scalable deployment options

- Strong emphasis on interoperability and ecosystem integration