IntelliBar

Open siteOffice & Productivity

Introduction

IntelliBar helps users achieve productivity goals effortlessly.

IntelliBar Product Information

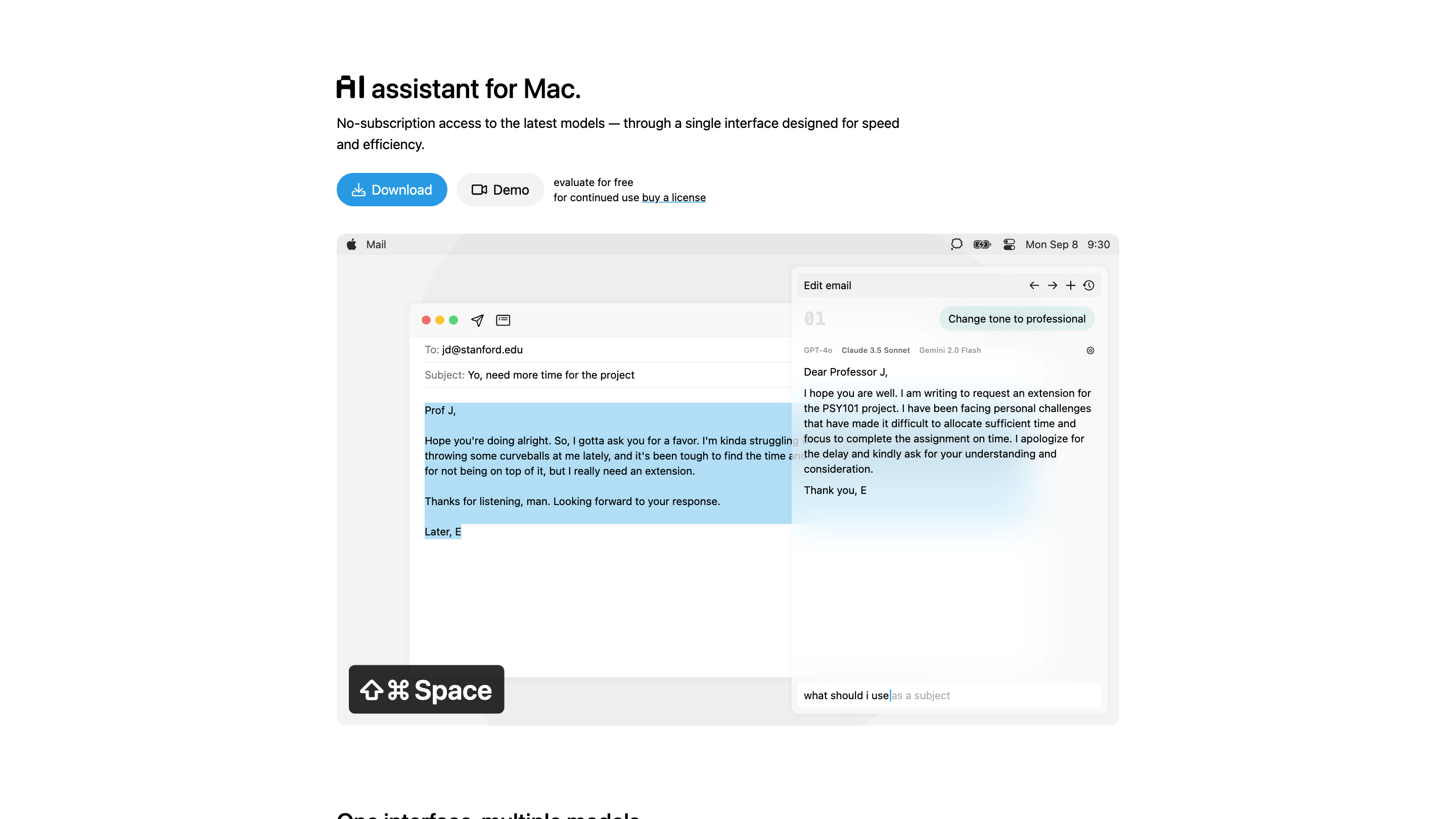

IntelliBar – AI assistant for Mac is a single-interface AI toolkit that lets you access the latest models (GPT, Claude, Gemini, and more) without a subscription. It provides fast, streamlined access to multiple models and supports local models via Ollama, all from one app. The platform emphasizes speed, privacy, and straightforward usage with a one-time purchase model. Users can connect to preferred AI providers or run local models, and pay only for what they actually use when using remote providers.

How IntelliBar Works

- One unified interface to interact with multiple AI models (GPT-4o, Claude Pro, Gemini Advanced, etc.).

- Access to both cloud-based models and local models (via Ollama) from a single app.

- Prompts are sent to the chosen model provider directly; data does not flow through intermediaries.

- Local models keep prompts and results on your device, enhancing privacy.

- Pricing is usage-based for cloud models and a fixed, upfront cost for the app itself, with no ongoing subscription.

How to Use IntelliBar

- Install IntelliBar on your Mac.

- Connect to your preferred AI providers (GPT-4o, Claude Pro, Gemini Advanced, etc.) or switch to local models via Ollama.

- Type prompts and run them against the selected model. Compare responses across multiple models if needed.

- Pay only for the usage of cloud models or opt for the local models to avoid recurring costs.

Pricing & Licensing

- 100% user-funded: no ads, no investors, no data selling.

- No subscription: one-time purchase for the app.

- Connect to paid models directly and pay them for usage, or use local models at no additional cost.

- Example remote model pricing (per use): GPT-4o, Claude Pro, Gemini Advanced vary by model.

Privacy & Data Handling

- Chats with remote models are sent directly to the model provider; IntelliBar does not route data through intermediaries.

- Local models keep data on your device; no data leaves your machine unless you choose to send it to a provider.

Core Features

- Single app to access multiple AI models (GPT-4o, Claude Pro, Gemini Advanced, etc.)

- Support for local models via Ollama for offline/private usage

- No subscription model; one-time purchase for the app

- Pay-per-use for cloud models; no usage limits when using local models

- Direct, provider-to-provider prompt handling to avoid data leakage through intermediaries

- Clear, upfront pricing for the app plus transparent per-use costs for cloud models

- Lightweight, productivity-focused UI designed to help you stay in flow

- Cross-provider comparison: run prompts across multiple models in one session

- Privacy-first: data remains on-device unless you opt to use a remote model