Label Studio

Open siteResearch & Data Analysis

Introduction

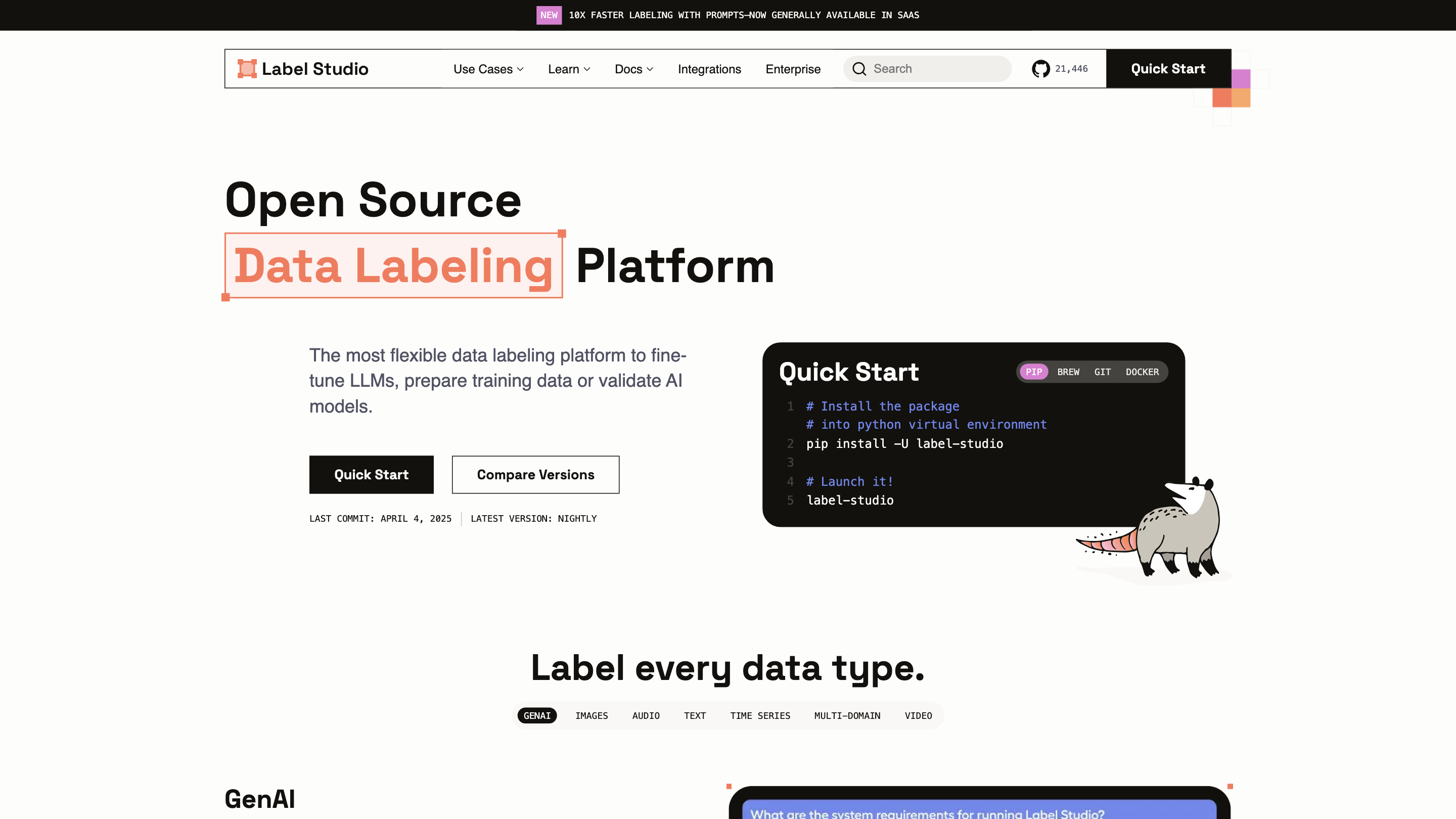

Label Studio: open-source tool for labeling data in various models.

Label Studio Product Information

Open Source Data Labeling | Label Studio is a flexible data labeling platform designed to help you fine-tune LLMs, prepare training data, or validate AI models. It supports a wide range of data types and labeling tasks, offering ML-assisted labeling, pipeline integrations, and scalable collaboration across projects. The platform emphasizes configurability, extensibility, and the ability to integrate labeling directly into your ML/AI workflows.

Key Capabilities

- Multi-type data labeling: images, audio, text, time series, video, and more (GenAI-ready overlays for images, audio, text, time series, and multi-domain data)

- ML-assisted labeling: leverage model predictions to accelerate labeling with human-in-the-loop review

- Rich labeling tasks: classification, object detection (boxes, polygons, circles, keypoints), semantic segmentation, OCR, transcription, named entity recognition, Q&A, sentiment analysis, timeline/event labeling, and more

- Collaboration & project management: multiple projects, multi-user support, templates, and scalable workflows

- Integrations: Webhooks, Python SDK, and API to authenticate, import tasks, manage predictions, and connect to your ML/AI pipeline

- Data management: connect cloud storage (S3, GCP), dataset preparation, filtering, and data manager for dataset exploration

- Flexible deployment: quick start with Python, Docker, or Docker Compose; supports local and scalable deployments

- Extensibility: customizable tags, labeling templates, and templates for repeated workflows

- Evaluation & fine-tuning: use labeled data for supervised fine-tuning, RLHF, or evaluating model outputs

Quick Start (Examples)

- Install via Python:

pip install -U label-studioand launch:label-studio. - Docker:

docker run -it -p 8080:8080 -v <path>/mydata:/label-studio/data heartexlabs/label-studio:latestand visithttp://localhost:8080/. - Quick setup commands include migrations, static assets collection, and startup steps as documented in the quick start guide.

Supported Use Cases

- Computer Vision: image classification, object detection (boxes, polygons, circles), semantic segmentation, pre-labeling for faster labeling

- Audio & Speech: classification, speaker diarization, emotion recognition, transcription

- NLP & Documents: classification, named entity extraction, question answering, sentiment analysis, multi-label taxonomy (up to 10,000+ classes)

- Time Series & Multi-Domain: event labeling, segmentation, time-series classification, and multi-domain data labeling

- Video: labeling and annotation workflows compatible with video data

- RAG & Evaluation: integration for retrieval-augmented generation evaluation and human-in-the-loop scoring

How It Works

- Create projects and connect data sources (local files or cloud storage).

- Define labeling tasks using customizable templates and tags.

- Label data with built-in tools or leverage ML-assisted labeling to pre-label items.

- Review, refine, and export labeled data for model training or evaluation.

- Integrate with your ML/AI pipeline via API, SDK, or webhooks.

Safety & Governance

- Supports structured labeling workflows to ensure traceability and reproducibility of labeled data.

- Designed for enterprise-grade collaboration and data governance across teams.

Core Features

- Multi-type data labeling: support for images, audio, text, time series, video, and more

- ML-assisted labeling: predictions to speed up labeling with human-in-the-loop review

- Rich labeling tools: classification, object detection (boxes, polygons, circles, keypoints), segmentation, OCR, transcription, QA, NER, sentiment, and more

- Prompt-driven templates and customizable tags to fit your workflow

- Data management: cloud storage integration (S3, GCP), dataset preparation, and advanced filtering

- Project & collaboration: multiple projects, users, and scalable labeling teams

- Pipeline integrations: Webhooks, Python SDK, and REST API for automation and integration

- Deployment choices: local, Docker, or cloud-ready deployments

- Evaluation & fine-tuning support: generate supervised data for fine-tuning, RLHF, and model evaluation