LiteLLM

Open siteCoding & Development

Introduction

LiteLLM simplifies LLM completion and embedding calls with an open-source library.

LiteLLM Product Information

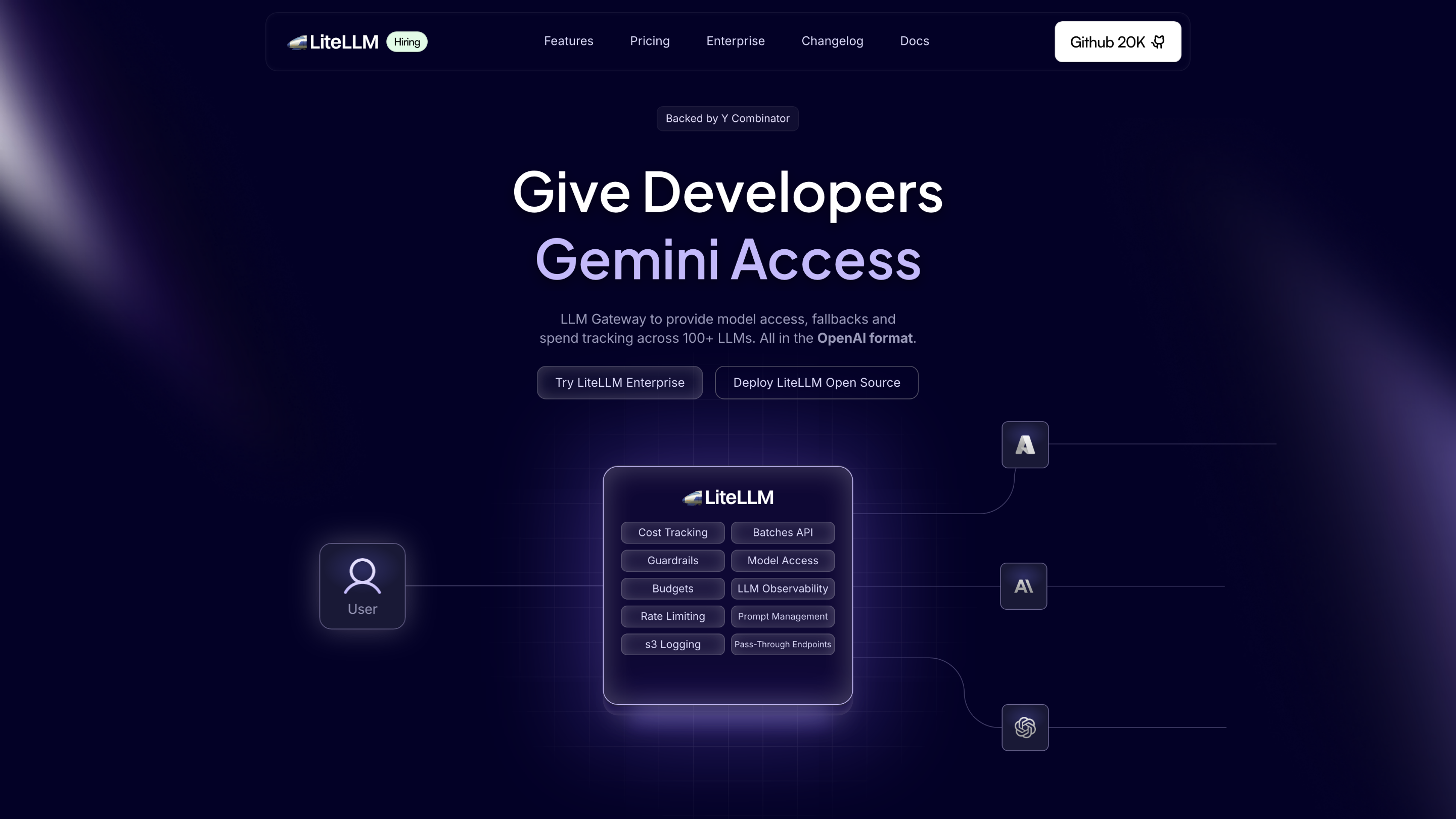

LiteLLM: LLM access, spend tracking, and fallbacks across 100+ providers

LiteLLM is a platform that simplifies model access, spend tracking, and fallback handling across 100+ LLM providers (OpenAI-compatible format). It is available in Open Source and Enterprise offerings and is designed to help platform teams give developers day-0 access to the latest models while centralizing cost tracking and governance.

Key Capabilities

- Unified LLM access to 100+ providers (OpenAI-compatible)

- Accurate spend tracking and budgeting across providers (OpenAI, Azure, Bedrock, GCP, etc.)

- Tag-based spend attribution to key/users/teams/orgs

- Open-ended fallbacks across providers to ensure reliability

- Prompt formatting support for HuggingFace models

- Logging and observability integrations (s3/gcs logging, Langfuse, OTEL, Prometheus)

- Configuration for rate limits, budgets, and guardrails

- Enterprise features: JWT Auth, SSO, audit logs, SLAs

- Availability as Open Source or Enterprise deployment (Cloud or Self-Hosted)

- Real-world validation from customers like Netflix and Lemonade

How LiteLLM Works

- Define access for developers and teams to a large catalog of LLM providers.

- Automatically track spend per key/user/team/org across all providers.

- Apply tag-based spend accounting and log usage to your storage (S3, GCS, etc.).

- Route requests through OpenAI-compatible interfaces with robust fallback policies when a provider is down or rate-limited.

- Provide developers with transparent usage, budgets, and governance controls.

Use Cases

- Day-0 LLM access for large developer teams without manual provider integration

- Centralized cost governance across multi-provider LLM deployments

- Rapid integration of new models without refactoring inputs/outputs

- Enterprise-grade security and compliance with single sign-on and audit logs

Core Features

- OpenAI-compatible LLM access across 100+ providers

- Automatic spend tracking and attribution by key/user/team/org

- Tag-based budgeting and logging to s3/gcs and other storage

- Rate limits, budgets, and guardrails for safe usage

- Open-source and Enterprise deployment options (Cloud or Self-Hosted)

- JWT Auth, SSO, and audit logs for Enterprise

- Fallbacks across providers to maximize availability

- Prominent observability integrations (Langfuse, Langsmith, OTEL, Prometheus)

- Prompt formatting support for HF models

- Customer success and references (Netflix, Lemonade, RocketMoney)