LLM Token Counter

Open siteCoding & Development

Introduction

Token counter for popular LLMs.

LLM Token Counter Product Information

All in one LLM Token Counter is a client-side tool designed to help users manage token limits across a wide range of popular language models (e.g., GPT-3.5, GPT-4, Claude variants, Llama variants, and more). It runs entirely in your browser using Transformers.js, performing token counting locally to protect prompt privacy while providing fast, accurate estimates. The tool supports an extensive gallery of models and is designed to assist maximizing prompt efficiency and staying within model-specific token constraints.

How to Use All in one LLM Token Counter

- Choose a model. Select from the supported models gallery (e.g., GPT-4o, GPT-4, GPT-3.5 Turbo, Claude 3.x, Llama 3.x, etc.).

- Enter your prompt. Type or paste the text you intend to send to the model.

- View token count. The counter shows the number of tokens your prompt would consume for the selected model, helping you adjust as needed to stay within limits.

Disclaimer: Token counts are calculated client-side to ensure prompt privacy and prevent data leakage to servers.

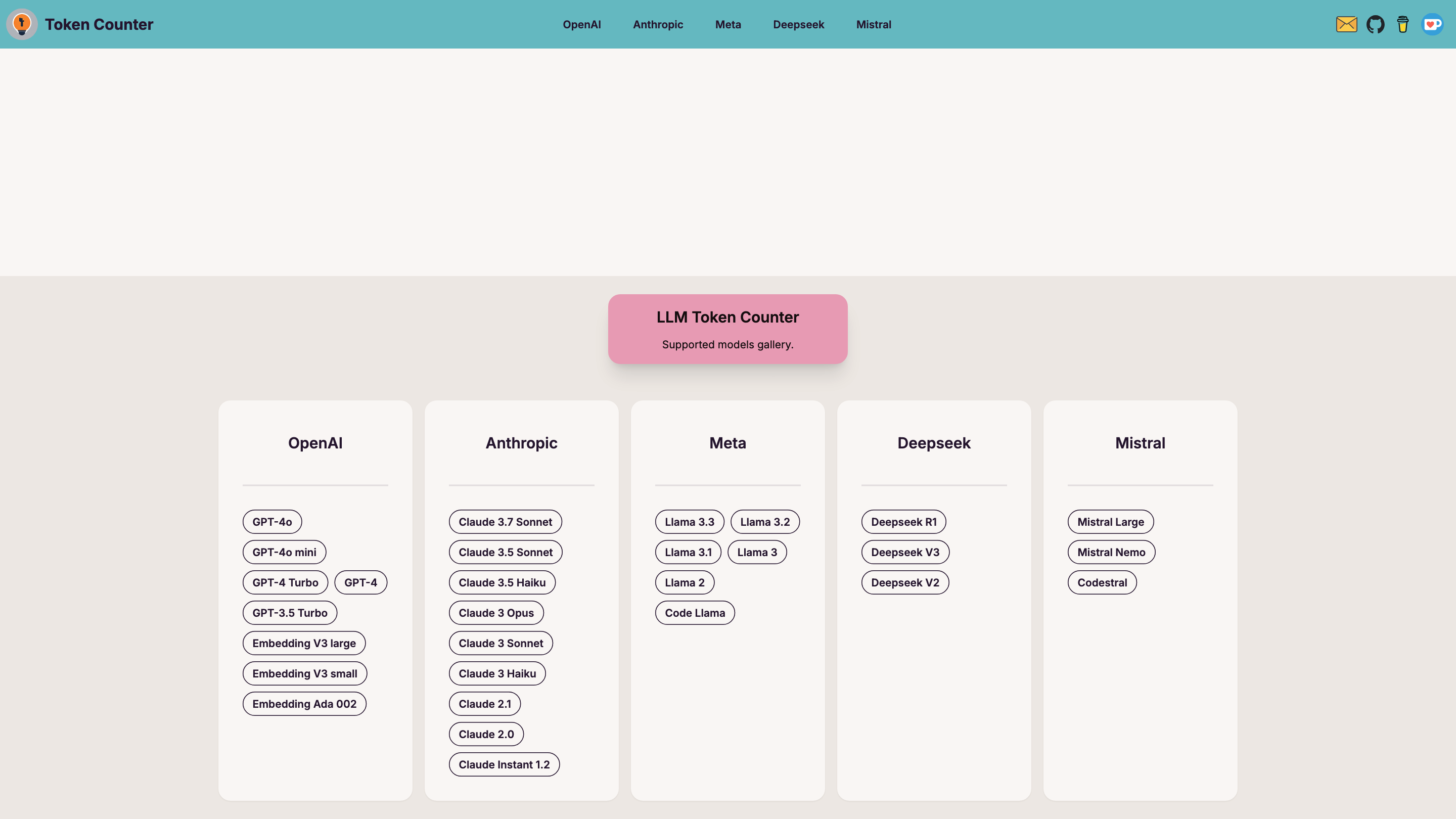

Supported Models

- OpenAI: GPT-4o, GPT-4, GPT-3.5 Turbo

- Anthropic: Claude 3.7, Claude 3.5, Claude 3 Opus, Claude 3 Haiku, Claude 2.1, Claude 2.0, Claude Instant 1.2, Claude 3 Sonnet, Claude 3 Haiku

- Meta: Llama 4, Llama 3.3, Llama 3.2, Llama 3.1, Llama 3, Llama 2, Code Llama

- Mistral: Mistral, Mistral Large, Mistral Nemo

- Deepseek: Deepseek R1, Deepseek V3, Deepseek V2

- Codestral: Codestral

- Other: Embedding V3 large, Embedding V3 small, Embedding Ada 002

How It Works

- The tool uses Transformers.js to load tokenizers in your browser and computes token counts locally with a fast, Rust-based backend.

- It supports multiple models with distinct tokenization schemes, enabling accurate planning of prompts and completions per model limits.

- No data leaves your device; prompts are never sent to a server during counting.

Safety and Privacy Considerations

- Client-side processing ensures prompt privacy and data confidentiality.

- No prompts are transmitted or stored by the service.

Core Features

- Client-side token counting for a wide range of models (GPT-4o, GPT-4, GPT-3.5 Turbo, Claude family, Llama family, Mistral, Deepseek, Codestral, and embeddings)

- Immediate, model-specific token estimates to help manage prompts and completions within limits

- Large gallery of supported models with easy switching

- Privacy-first: all counting occurs in-browser with no data sent to servers