LLMWare.ai

Open siteLegal & Finance

Introduction

AI tools for financial, legal, and regulatory industries.

LLMWare.ai Product Information

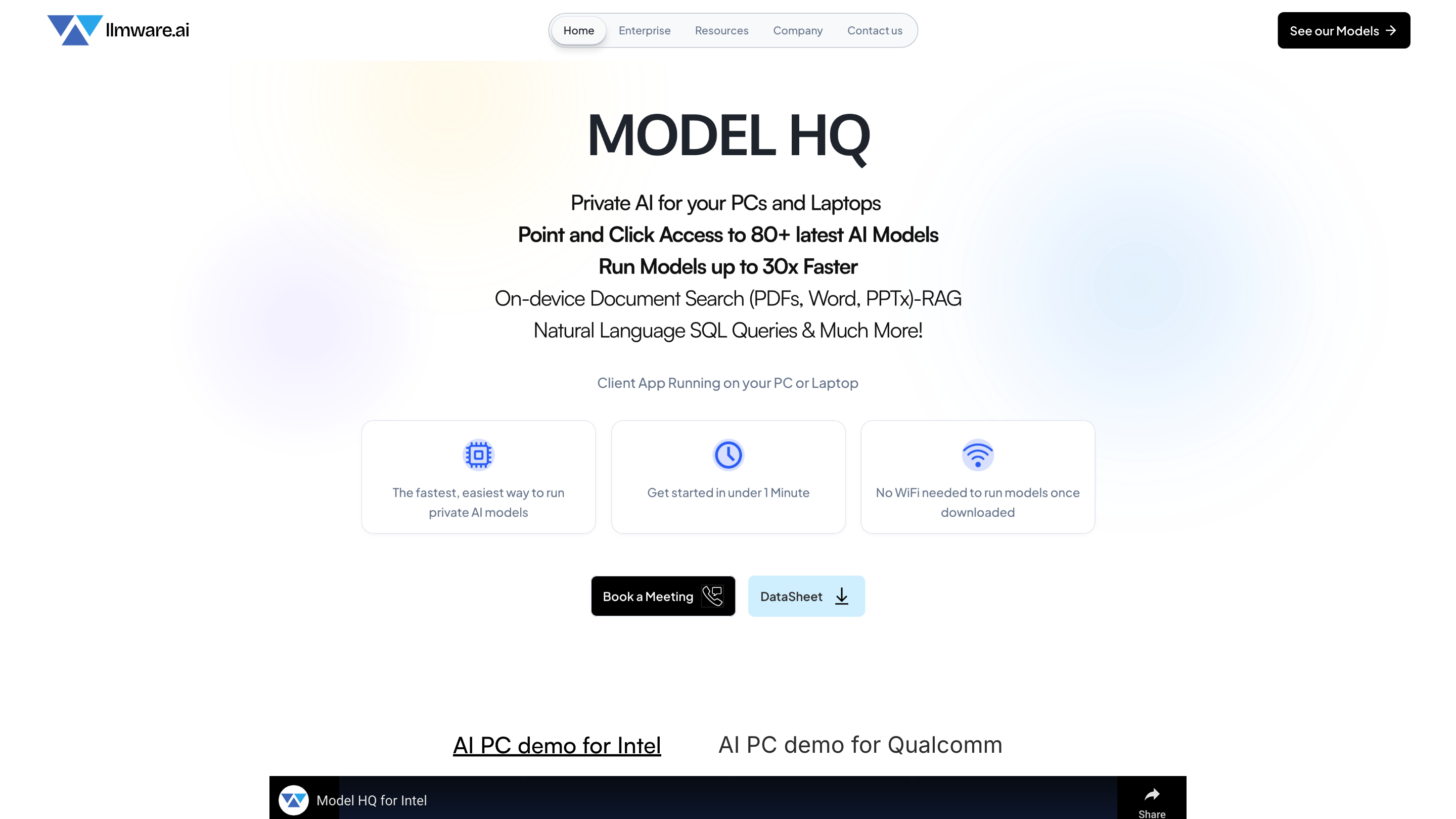

LLMWare.ai MODEL HQ is a private AI deployment platform designed to run AI workflows locally on hardware (PCs, AI PCs, data centers, and private cloud). It automatically optimizes AI model deployment for your hardware, enabling secure, low-latency, on-device inference and end-to-end AI management across multiple environments. The solution emphasizes privacy, ease of use, and scalable performance for enterprise AI apps, RAG workflows, contract analysis, SQL queries, and more, without requiring constant cloud connectivity.

How it works

- Install the MODEL HQ client on user devices (less than 100 MB) to unlock 80+ models and on-device capabilities.

- Run AI workflows securely, locally, and at scale from a single unified platform.

- Automatically optimize model deployment for your hardware (including AI PCs) for fast, private inferencing.

- Support for on-device RAG, contract analysis, SQL queries, voice transcription search, and more.

- Deploy AI apps organization-wide with centralized control, monitoring, and lifecycle management.

Use Cases

- Private, zero-WiFi AI workloads on end-user devices.

- Local inference for enterprise-scale AI apps with strong data governance.

- RAG-enabled search, document analysis, and contract intelligence.

- SQL-based AI querying, voice transcription, and knowledge extraction.

- Batch processing and lightweight micro-apps across teams.

Getting Started

- Download the <MODEL HQ client> agent on the user device.

- Install to unlock 80+ models and on-device capabilities.

- Begin point-and-click AI workflows with offline or private cloud deployment.

Core Features

- On-device AI: Run AI models locally without requiring continuous internet access.

- Automatic hardware optimization: Tailors model deployment to your devices for efficiency.

- End-to-end AI management: Full lifecycle management of private AI apps.

- Enterprise-grade security: Local execution with built-in safety tools and privacy controls.

- Seamless deployment: Deploy workflows across PCs and IT environments with centralized control.

- On-device RAG, SQL queries, and voice transcription search integrated into workflows.

- Supports 80+ models and scalable inferencing for diverse workloads.

Why LLMWare.ai MODEL HQ

- Private data stays in your security zone; no exposure to external servers.

- Simplified deployment for enterprises needing secure AI at scale.

- Hardware-optimized performance to maximize efficiency and throughput.

- Comprehensive governance including safety, compliance, and auditing tools.

Safety and Compliance

- Built-in safety tools for explainability, PII filtering, toxicity and bias monitoring, and hallucination detection.

- Compliance-ready audit reporting and enterprise-grade governance.