Luma AI Video Generator

Open siteVideo & Animation

Introduction

AI video generation from text and images

Luma AI Video Generator Product Information

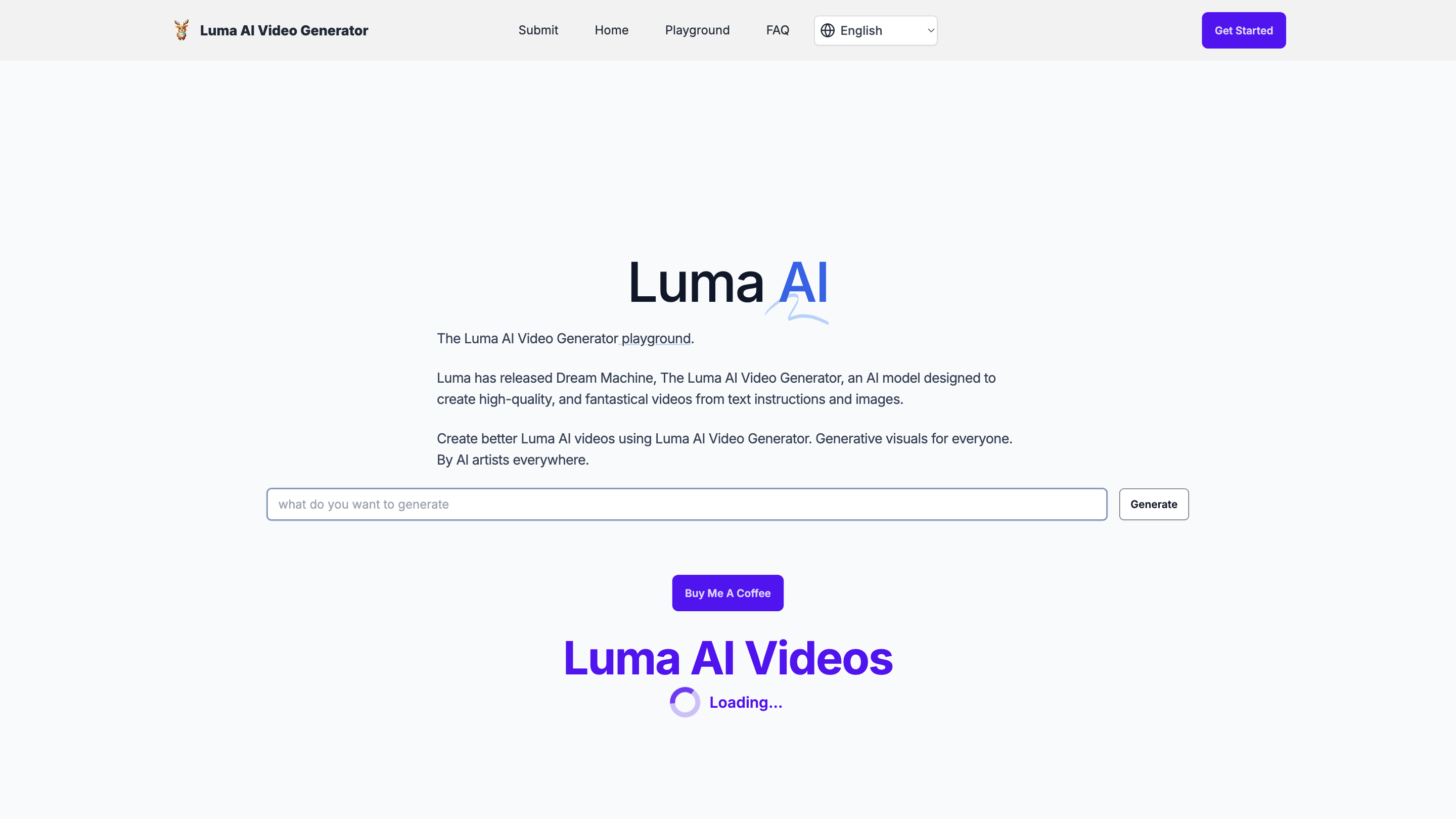

Luma AI Video Generator — Dream Machine

Luma AI Video Generator, powered by the Dream Machine model, is an AI tool designed to create high-quality, realistic videos from text instructions and images. It enables rapid generation of cinematic clips with physically aware motion and scalable performance, aimed at AI artists and creators.

What it does

- Converts textual descriptions and reference images into short, high-quality video clips.

- Emphasizes realistic motion, cinematic cinematography, and believable physics.

- Supports rapid iteration by producing up to 120 frames (about 5 seconds) in ~120 seconds per run.

- Includes diverse camera movements and scene composition to match the emotion and content of the prompt.

- Scalable and efficient transformer-based generation trained directly on video data for consistent characters and physics.

How it works

- Input: Provide text prompts and/or reference images.

- Generation: Dream Machine analyzes the inputs and renders a video with realistic motion and camera work.

- Output: Downloadable video clips that can be used for concept ideation, storyboarding, or final renders.

Key benefits

- Fast generation (roughly 2 minutes per ~5-second clip).

- Realistic movements and cinematography for life-like results.

- Physical accuracy and consistent behavior across frames.

- Wide range of camera motions to convey mood and narrative.

- Scalable and efficient processing suitable for iterative workflows.

Usage notes

- There is no official public Luma AI Video Generator API yet, though shell APIs exist from third parties.

- The platform clarifies licensing terms: free usage may come with quotas and usage restrictions; commercial use requires appropriate licensing.

- The service emphasizes that it is designed for content creation and exploration; users should ensure compliance with copyright and consent where applicable.

How to Use Luma AI Video Generator

- Provide a text description of the scene you want to generate.

- Optionally attach reference images to guide visuals, characters, or objects.

- Submit to render; wait for approximately 2 minutes for a ~5-second clip (up to 120 frames).

- Preview, iterate, and download the result.

Features

- High-quality, realistic video generation from text and images

- Fast generation cycle suitable for rapid iteration (approx. 120 seconds per 5-second clip)

- Realistic motion, cinematography, and dramatic scene composition

- Physical accuracy and consistent character/object interactions

- Diverse camera movements and framing options

- Scalable transformer-based model trained on video data

- Free access with quotas; paid plans may remove queuing and offer commercial licenses

- Downloadable outputs for personal or professional use

Safety and Legal Considerations

- Respect copyright, consent, and licensing when generating and using videos.

- The platform notes there is no official API yet and that third-party shell APIs may exist; verify terms before integration or distribution.