Omnibot

Open siteChatbots & Virtual Companions

Introduction

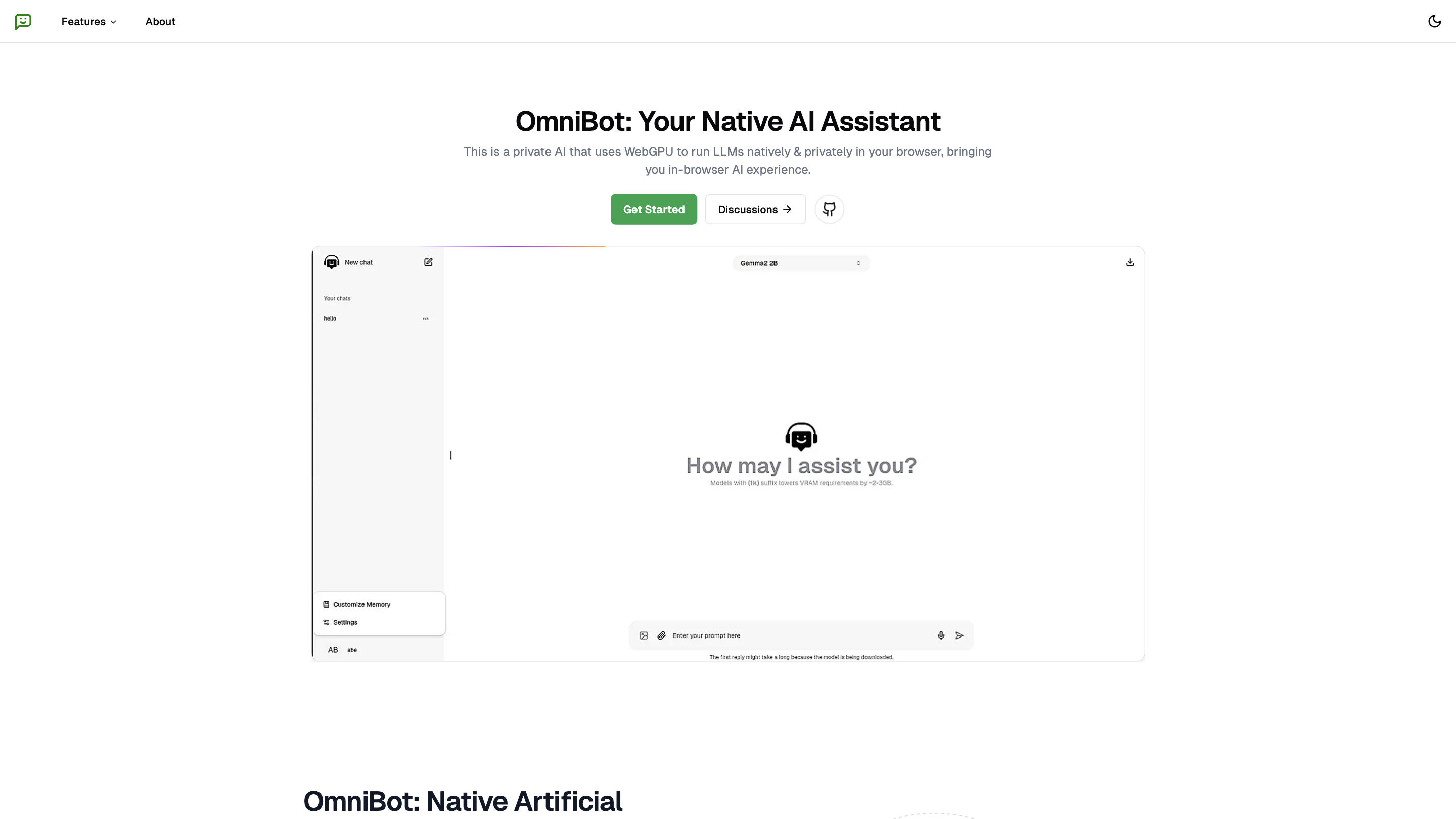

Native AI assistant with offline and private capabilities.

Omnibot Product Information

OmniBot is a private, in-browser AI assistant that runs large language models locally in your browser using WebGPU. It provides an offline, privacy-focused AI experience where all AI computation happens on the user's device, without sending data to external servers. The platform emphasizes native integration, custom memory, and offline capabilities, enabling users to interact with AI models directly in-browser and even without an internet connection after the initial model download.

Key highlights:

- In-browser, offline AI: All AI models run locally on the user’s hardware for privacy and offline use.

- WebGPU powered: Leverages WebGPU-enabled hardware acceleration to run LLMs efficiently in the browser.

- Custom memory: Ability to add custom instructions or memory to personalize responses.

- Tech stack: Built on high-performance frameworks and tools including Hugging Face, LangChain, WebLLM, TypeScript, Next.js, and Open-source multilingual AI models.

- GPU requirement: Recommends a GPU with sufficient memory (e.g., 6GB for 7B models, 3GB for 3B models).

- Accessibility: Supports offline chat after model download; mobile devices with WebGL support can use OmniBot similarly to PCs.

- Output formats: Generates and saves chat messages in JSON or Markdown formats.

How OmniBot works

- Model download: Users download a model once to enable offline use.

- Local inference: All subsequent LLM inference runs locally in-browser on the user’s device.

- Personalization: Custom memory support allows tailoring responses to user preferences.

- Interoperability: Utilizes LangChain and other frameworks to integrate LLMs into apps.

What you can do with OmniBot

- In-browser AI conversations without server round-trips

- Offline operation after initial model download

- Customizable memory and instructions for personalized interactions

- Multilingual model support and open-source components

- Generate and save chat transcripts in JSON or Markdown

Safety and requirements

- Requires a compatible GPU and enough RAM for the selected model size.

- Privacy-centric: data processed locally; no server data storage for AI interactions.

How to Use OmniBot

- Check compatibility and hardware requirements.

- Download a supported model (e.g., 7B or 3B variants).

- Use OmniBot in your browser with WebGPU support; enable offline use after download.

- Optional: Add custom memory/instructions for personalized responses.

- Save chats as JSON or Markdown.

What’s Included

- In-browser, offline LLM inference using WebGPU

- Local-only data processing for privacy

- Custom memory and instructions support

- Multilingual, open-source model ecosystem

- Next.js-based frontend with TypeScript

- Integration-ready via LangChain and related tools

- GPU-aware performance guidance (6GB for 7B, 3GB for 3B models)

- JSON/Markdown export of chat transcripts