Open Source AI Gateway

Open siteCoding & Development

Introduction

Configurable API gateway for multiple LLM providers

Open Source AI Gateway Product Information

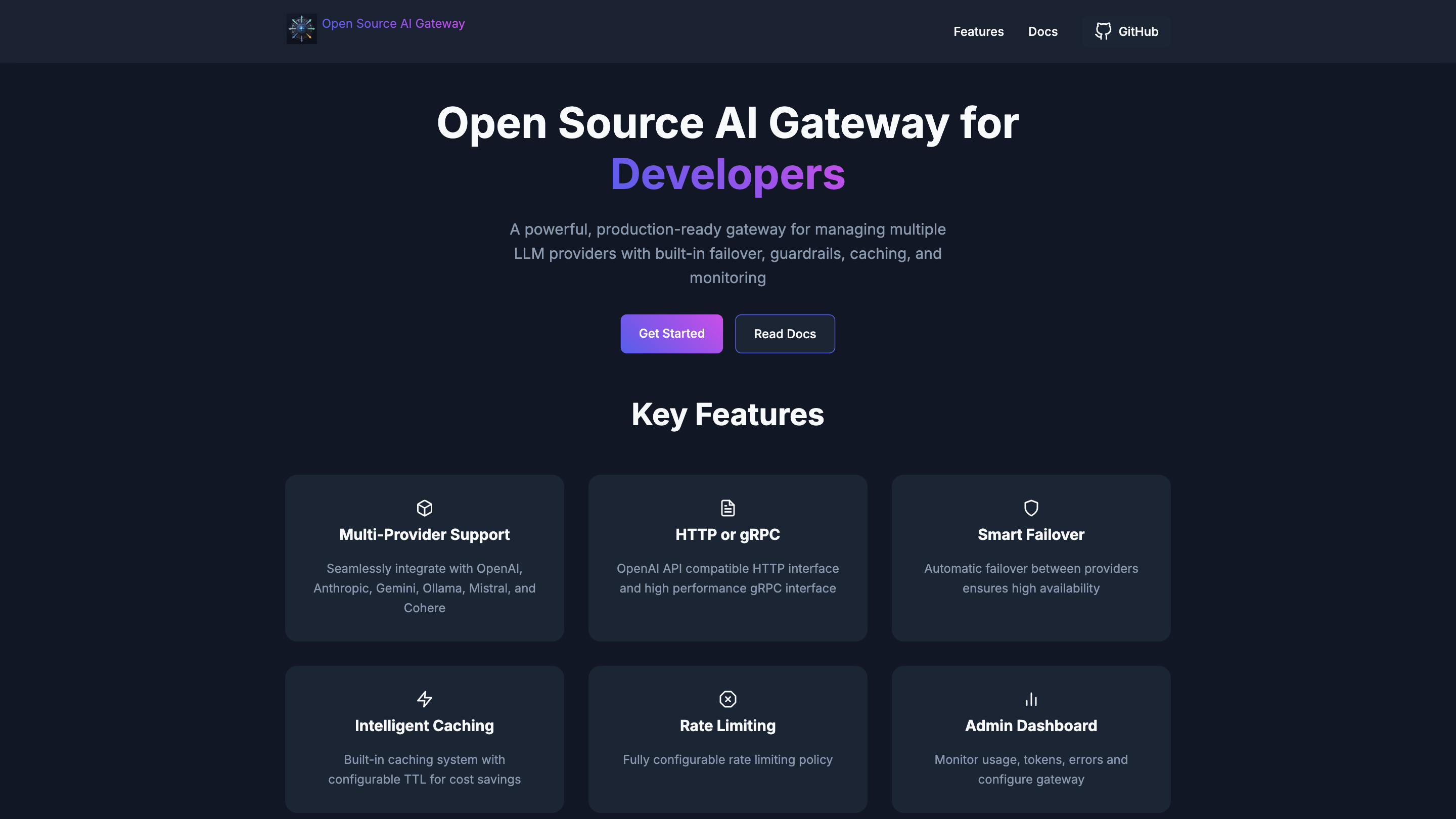

Open Source AI Gateway is a production-ready gateway that manages multiple LLM providers with built-in failover, guardrails, caching, and monitoring. It supports multi-provider integration (OpenAI, Anthropic, Gemini, Ollama, Mistral, Cohere) and exposes HTTP or gRPC interfaces that are compatible with the OpenAI API.

Key Features

- Multi-Provider Support: Seamlessly integrate with OpenAI, Anthropic, Gemini, Ollama, Mistral, and Cohere for flexible provider choice and redundancy

- HTTP or gRPC Interfaces: OpenAI API-compatible HTTP interface and high-performance gRPC interface

- Smart Failover: Automatic failover between providers to ensure high availability

- Intelligent Caching: Built-in caching with configurable TTL for cost savings and faster responses

- Rate Limiting: Fully configurable rate limiting policies to protect backends

- Admin Dashboard: Monitor usage, tokens, errors and configure gateway

- Content Guardrails: Configurable content filtering and safety measures

- Enterprise Logging: Integrations with Splunk, Datadog, and Elasticsearch for centralized observability

- System Prompt Injection: Intercept and inject system prompts for all outgoing requests

Getting Started

- Configure

- Create a Config.toml file with your API configuration

- Example snippet: [openAIConfig] apiKey = "Your_API_Key" model = "gpt-4" endpoint = "https://api.openai.com"

- Run

- Start the container with your configuration mounted:

docker run -p 8080:8080 -p 8081:8081 -p 8082:8082

-v $(pwd)/Config.toml:/home/ballerina/Config.toml

chintana/ai-gateway:v1.2.0

- Use

- Example request:

curl -X POST http://localhost:8080/v1/chat/completions

-H "Content-Type: application/json"

-H "x-llm-provider: openai"

-d '{"messages": [{"role": "user","content": "When will we have AGI? In 10 words"}]}'

How It Works

- Create a gateway configuration to connect to multiple LLM providers.

- The gateway routes requests to the configured provider(s) via HTTP or gRPC.

- Built-in failover automatically switches to a healthy provider if one fails.

- The caching layer stores frequent responses to reduce costs and latency.

- Admin dashboard provides visibility into tokens, usage, and error metrics.

- System prompts can be injected or modified for all outgoing requests.

- Guardrails and content filtering help enforce safety and policy compliance.

- Enterprise logging integrations enable centralized monitoring and observability.

Safety and Compliance

- Use responsible configurations for rate limits, guardrails, and monitoring to prevent misuse and ensure reliability.

Core Components

- Gateway runtime with multi-provider orchestration

- Caching layer for response optimization

- Guardrails and content filtering module

- Admin dashboard and observability integrations

- System prompt injection mechanism