OpenLIT

Open siteResearch & Data Analysis

Introduction

Open-source GenAI and LLM observability platform

OpenLIT Product Information

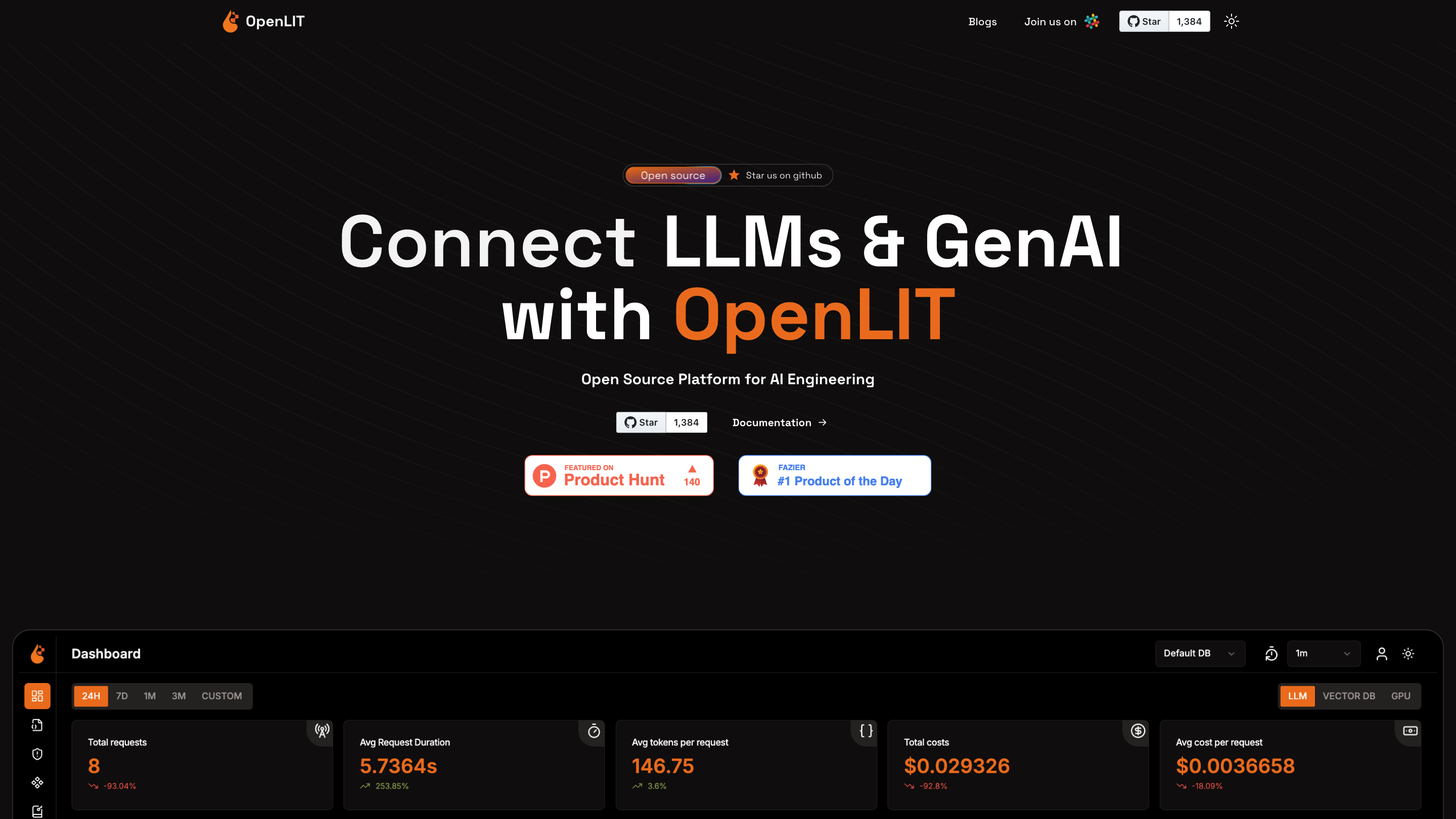

<# OpenLIT: OpenTelemetry-native GenAI and LLM Application Observability #>

OpenLIT is an open-source observability platform designed for GenAI and LLM applications. It helps you understand, monitor, and optimize AI-powered workloads by providing end-to-end tracing, exception monitoring, cost analysis, prompt management, secret management, and seamless integration with OpenTelemetry. It supports running locally (docker-compose) and can be hosted yourself, offering transparency into what your code does and how it performs across different providers and models.

What OpenLIT Provides

- End-to-end tracing of requests across different providers to improve performance visibility

- Detailed span tracking for response time and efficiency

- OpenTelemetry-native instrumentation for AI apps

- Cost tracking and detailed reporting to aid budgeting and decision-making

- Automatic exception monitoring and detailed stack traces to diagnose issues

- Integration with Traces to capture exceptions within request flows

- A playground to compare LLMs side-by-side on performance, cost, and metrics

- Centralized prompt repository with versioning and dynamic variables

- Secrets management (Vault Hub) with secure storage, access, and environment integration

- Easy-to-use SDK integrations for Python and TypeScript

- Real-time data streaming for quick visualization and decision making

- Observability platform integrations (Datadog, Grafana Cloud, etc.)

- Open source: easy onboarding with docker-compose and self-hosting capabilities

How to Use OpenLIT

- Run locally with Docker:

- docker-compose up -d

- Initialize the OpenLIT client in your project:

- Add openlit.init() to start collecting data from your LLM application

- Instrument your code to emit traces, metrics, and logs via OpenTelemetry-compatible mechanisms

- Explore:

- Side-by-side LLM comparisons in OpenLIT PlayGround

- Cost analysis dashboards and detailed reports

- Centralized prompts and secrets management

- Connect to your favorite observability tools (Datadog, Grafana Cloud) for export and visualization

Core Features

- End-to-end tracing of requests across LLM providers via OpenTelemetry

- Detailed span tracking for performance and efficiency analysis

- Automatic exception monitoring with detailed stack traces

- Side-by-side LLM comparison in PlayGround (performance, cost, metrics)

- Centralized prompt repository with versioning and dynamic variable substitution

- Secrets management via Vault Hub with secure storage and environment integration

- Environment-aware secret access and secure key management

- Real-time data streaming for immediate visibility into AI workloads

- OpenTelemetry-native integration for seamless instrumentation

- Cost analysis and comprehensive reporting for budget optimization

- Easy onboarding: self-hosted solution with docker-compose

- Observability platform integrations for export to Datadog, Grafana Cloud, etc.

How It Works

- Instrument your LLM applications with OpenTelemetry and OpenLIT SDKs (Python/TypeScript)

- Collect traces, metrics, logs, and secrets to obtain full observability across models, prompts, and calls

- Use the PlayGround to compare models on real-time performance and cost

- Store prompts and secrets securely; substitute variables at runtime

- Export data to your preferred observability stack for dashboards and alerts

Safety and Privacy

- OpenLIT is an open-source tool designed for transparency; ensure you do not log sensitive data in traces or prompts

- Use Vault Hub and secure environment handling to protect credentials and API keys

- Follow best practices for data minimization and access controls when instrumenting your applications

Getting Started Quick Reference

- Start locally: docker-compose up -d

- Initialize: openlit.init() in your app

- Instrument: add tracing and metrics via OpenLIT/OpenTelemetry

- Analyze: use PlayGround and cost reports to optimize

- Secure: manage secrets with Vault Hub and environment variables

Target Audience

- AI engineers and MLOps teams building GenAI/LLM-powered apps

- Teams needing end-to-end observability, cost control, and reliability for AI workloads

- Open-source enthusiasts seeking a transparent, self-hosted observability solution