RLAMA

Open siteIntroduction

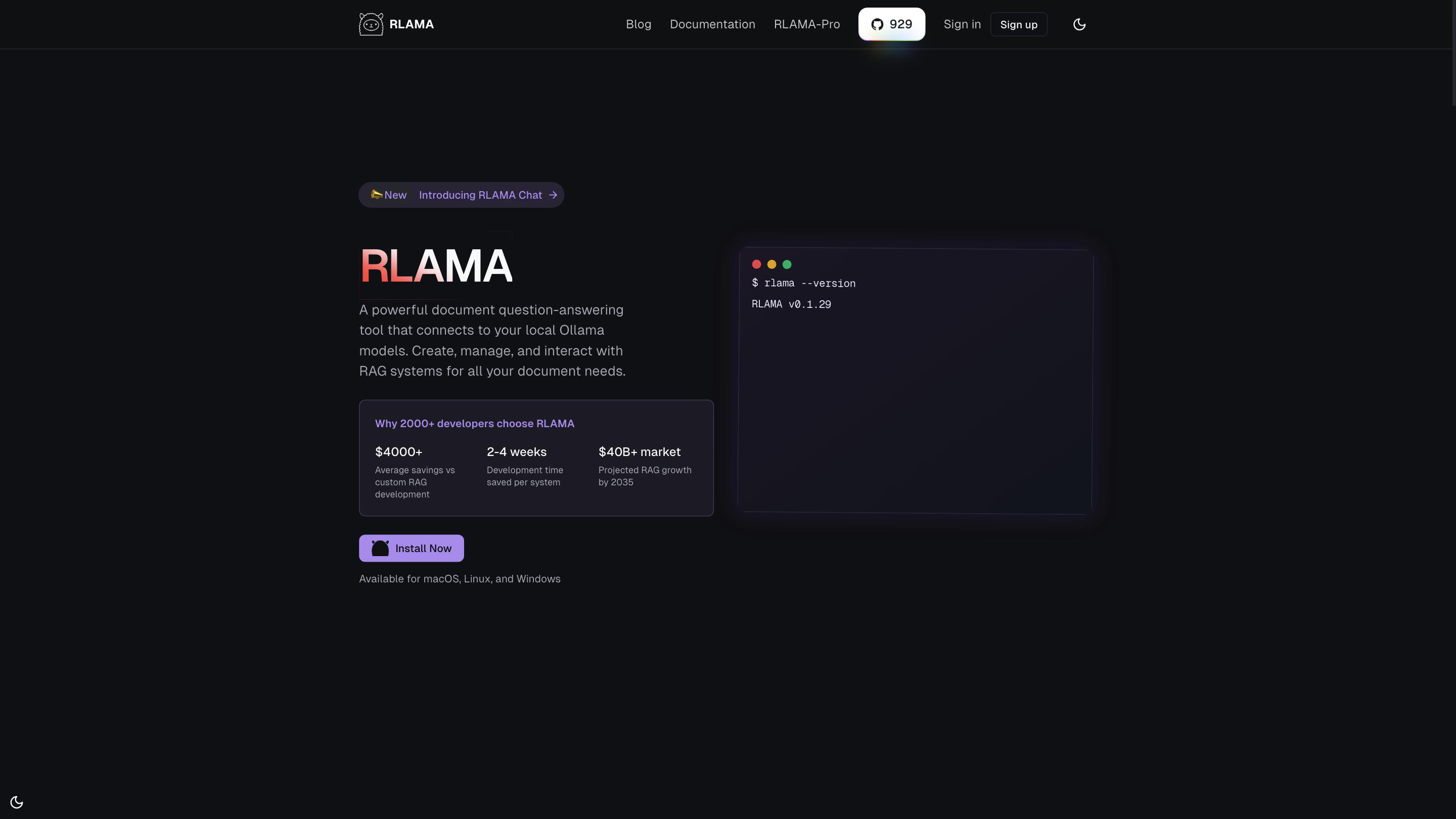

Local assistant for document question answering using RAG.

RLAMA Product Information

RLAMA RLAMA Blog Documentation RLAMA-Pro loading... RLAMA is a powerful open-source document question-answering tool that connects to your local Ollama models. It enables you to create, manage, and interact with Retrieval-Augmented Generation (RAG) systems tailored to your documents. It emphasizes local processing, wide format support, and flexible integration to fit into various workflows.

What is RLAMA?

RLAMA is a complete RAG solution designed for local, private document processing. It supports Connecting to local models (Ollama, llama3, OpenAI, Hugging Face as options), offline-first operation, and semantic document chunking to provide accurate question-answering over your own data. It offers a visual and CLI-based workflow to create and manage RAG systems, agents, and crews that can perform specialized tasks.

Core Capabilities

- Local storage and processing with no data sent externally

- Advanced semantic chunking for optimal context retrieval

- Multiple document formats (.txt, .md, .pdf, etc.)

- Web crawling to create RAGs from websites

- Directory watching for automatic RAG updates

- Hugging Face integration with 45,000+ GGUF models

- Flexible integration options (HTTP API server, cross-platform support, model compatibilities)

- OpenAI model support alongside Ollama AI Agents & Crews

- Agent-based workflows with roles such as researcher, writer, coder

- RAG search, code execution, and web search tools within agents

How RLAMA Works

- Create and configure RAG systems from your documents with simple commands

- Support for PDFs, Markdown, Text files, and more with intelligent parsing

- Offline-first processing ensures privacy and security

- Smart chunking to retrieve the right context from large documents

- Interactive query sessions to ask questions in natural language

- Automatic updates to RAGs when source documents change

How to Use RLAMA

- Install RLAMA (free and open source).

- Create a RAG from a folder of documents: rlama rag [model] [rag-name] [folder-path]

- Start an interactive session: rlama run [rag-name]

- Manage RAGs with commands like list, delete, watch, api, update

- Use the Visual Builder (RLAMA Unlimited) for a no-code experience to build RAGs quickly

Example commands:

- Create a RAG: rlama rag llama3.documentation ./docs

- Run a RAG: rlama run documentation

- List RAGs: rlama list

- Watch for changes: rlama watch documentation ./docs

- Start API: rlama api --port 8080

Supported File Formats

- Text: .txt, .md, .html, .json, .csv, .yaml, .yml, .xml, .org

- Code: .go, .py, .js, .java, .c, .cpp, .h, .rb, .php, .rs, .swift, .kt, .ts, etc.

- Documents: .pdf, .docx, .doc, .rtf, .odt, .pptx, .ppt, .xlsx, .xls, .epub

Features Overview

- Local, private processing with 100% offline support

- Simple setup and configuration of RAG systems

- Support for PDFs, Markdown, Text, and more with intelligent parsing

- Interactive query sessions for natural language questions

- Automatic document watching to keep RAGs up to date

- Visual RAG Builder for quick creation with no coding

- Drag-and-drop document uploads and straightforward configuration

- Supports multiple embedding models and sources (Local Folder, Website)

- Cross-platform: macOS, Linux, Windows

- API server for integration into external apps

- Flexible agent ecosystem with researchers, writers, coders, etc.

Why Choose RLAMA?

- 100% local processing for privacy and data security

- Open-source with a vibrant ecosystem and integrations

- Rapid setup to create powerful document-based Q&A systems

- Suitable for personal projects, research, and enterprise-like workflows

Safety & Privacy Considerations

- All processing can be kept local; data never leaves your environment

- Ensure appropriate handling of sensitive information when integrating with external sources

Quick Start Summary

- RLAMA is a free, open-source CLI tool with a visual builder option (RLAMA Unlimited)

- Create RAGs from your documents, run interactive sessions, and expose an API if needed

- Supports a wide range of document formats and models

Additional Resources

- Documentation and examples in the repository

- Community and support channels for RLAMA

Core Features

- Simple setup: Create and configure RAG systems with minimal commands

- Multiple document formats: PDFs, Markdown, TXT, etc., with intelligent parsing

- Offline-first: 100% local processing without external data transmission

- Intelligent chunking: Optimized context retrieval

- Interactive sessions: Natural language querying

- Document watching: Automatic updates when documents change

- Visual RAG Builder: Create RAGs in minutes with no coding

- Easy drag-and-drop document uploads

- Model flexibility: Ollama, OpenAI, Hugging Face integration

- Cross-platform support: macOS, Linux, Windows