Stable Video Diffusion

Open siteVideo & Animation

Introduction

Transform images into videos with Stable Video Diffusion.

Stable Video Diffusion Product Information

Free Stable Video Diffusion (SVD) – Stable Image to Video Generation is an open-source AI tool by Stability AI that transforms single images into short videos. It offers research-preview style video generation from still photos, with two model variants (SVD and SVD-XT) and supports quick experimentation for educational and creative purposes. The tool emphasizes accessibility (open source), local experimentation (via GitHub/Hugging Face), and rapid prototyping of video content from images.

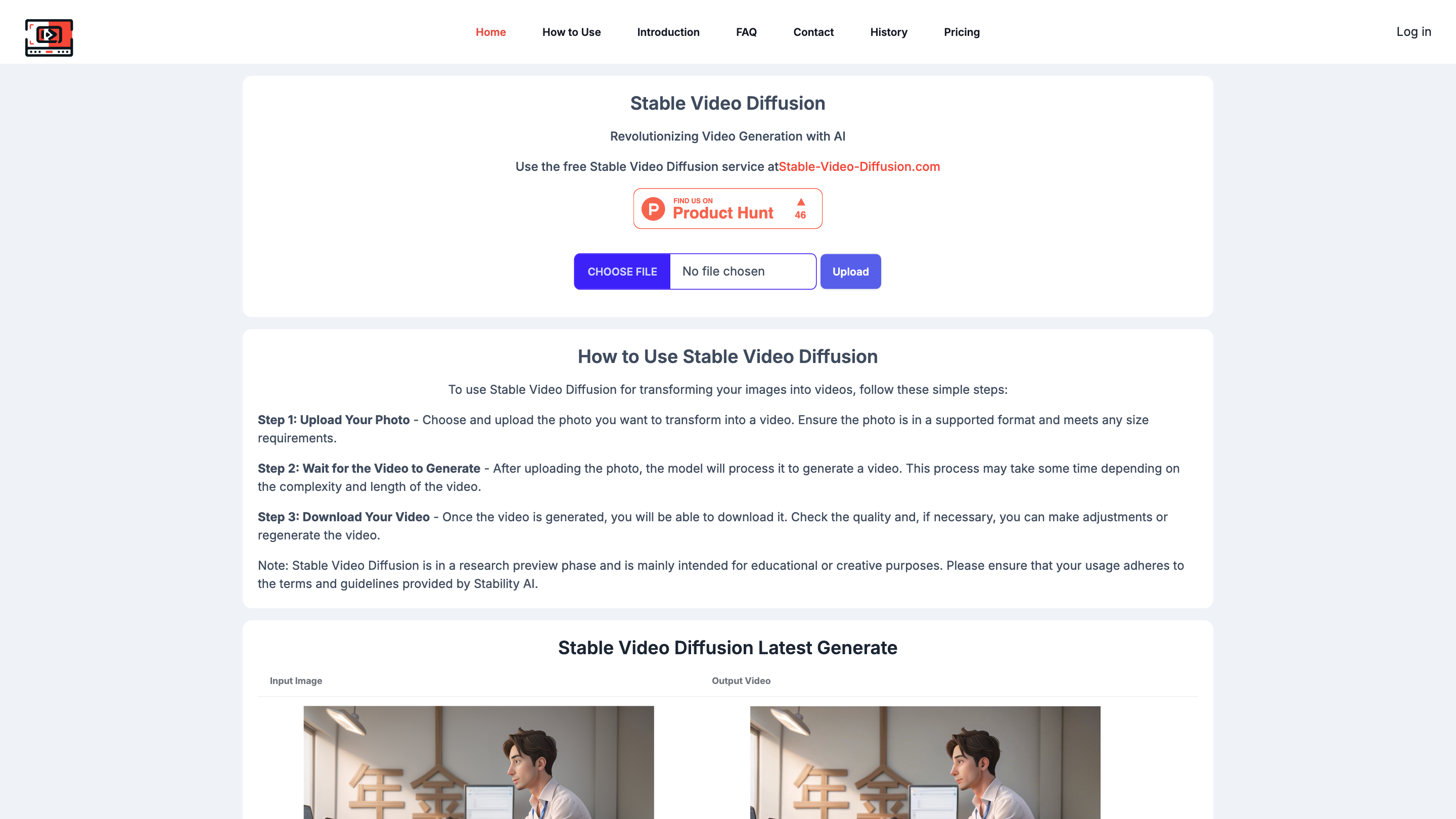

How to Use Free Stable Video Diffusion

- Upload Your Photo – Choose and upload the image you want to animate into a video. Use a supported format and ensure it meets any size constraints.

- Wait for the Video to Generate – The model processes the image to create a video. Duration and quality depend on the chosen settings and hardware.

- Download Your Video – Retrieve the generated video, review the result, and regenerate if adjustments are needed.

Note: Stable Video Diffusion is provided in a research preview phase and is intended for educational or creative exploration. Follow Stability AI's terms and guidelines when using the model.

Model Variants and Capabilities

- SVD: Transforms an input image into a video with 14 frames at 576×1024 resolution.

- SVD-XT: Extends to 24 frames for more motion.

- Frame rates: 3–30 frames per second depending on settings.

- Outputs are short, batchable experiments suitable for concept exploration and rapid prototyping.

Practical Use Cases

- Image-to-video experimentation for art, animation concepts, and creative storytelling.

- Rapid prototyping of video ideas from still imagery for educational or creative projects.

Limitations and Considerations

- This is a research preview; results may vary and may not be suitable for commercial production.

- Requires suitable hardware (GPUs) to run locally or via supported interfaces.

- As an open-source project, users should review licensing and ethical considerations for generated content.

Access and Community

- Code and weights are available on GitHub and Hugging Face, enabling open-source collaboration and local experimentation.

- Community-driven development with opportunities to contribute, report issues, and share results.

Future Prospects

- Potential enhancements include longer video durations, improved motion control, and broader accessibility through user-friendly interfaces (text-to-video workflows).

- Ongoing work aims to expand commercial applicability and ease of use while preserving the open-source ethos.

Safety and Responsible Use

- Use for educational, exploratory, or artistic purposes.

- Be mindful of ethical considerations around generated content, including consent and potential misrepresentation.

Core Features

- Open-source, model weights available on GitHub and Hugging Face

- Two variants: SVD (14-frame, 576×1024) and SVD-XT (24-frame)

- Image-to-video generation with adjustable frame rates (3–30fps)

- Local/offline experimentation possible

- Suitable for educational and creative experimentation