Tiktokenizer

Open siteCoding & Development

Introduction

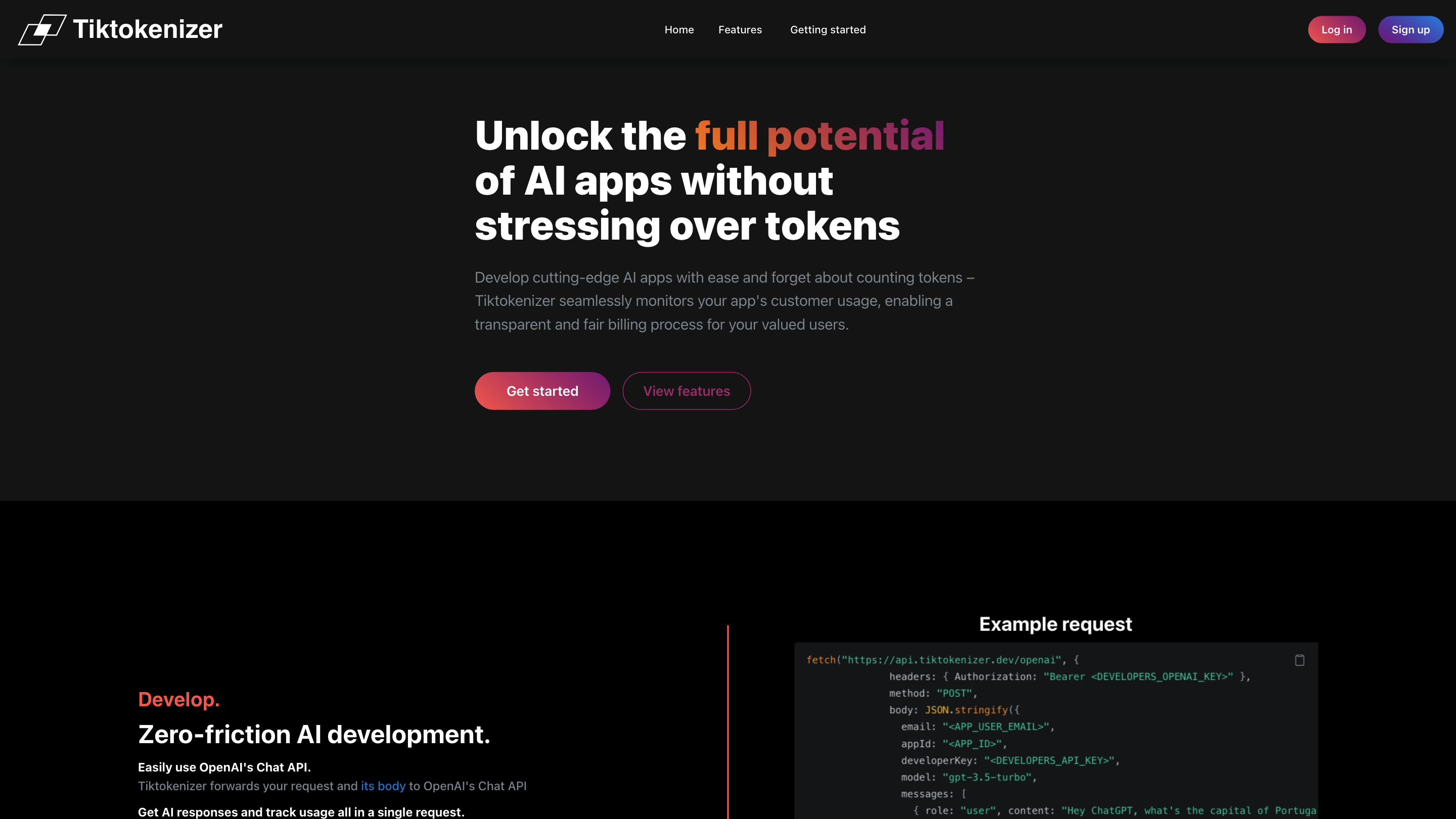

Tiktokenizer is a monitoring platform for developers to track users' AI tokens usage.

Tiktokenizer Product Information

Tiktokenizer is a developer-focused AI tooling platform that lets you integrate OpenAI’s Chat API with zero-friction token usage tracking and real-time usage visualization. It is designed to simplify building AI-powered apps by handling token accounting, moderation checks, and billing-ready usage data in a single request flow. The service forwards requests and their bodies to OpenAI's Chat API, runs pre-request Moderations checks, and exposes handy code snippets and an built-in visualizer to monitor usage and billing per user.

How it works

- Your app sends a request to Tiktokenizer with the desired model, messages, and metadata (user email, appId, developerKey).

- Tiktokenizer forwards the payload to OpenAI's Chat API after running Moderations API checks on the content.

- The response from OpenAI is returned to your app, and usage metrics (tokens, cost estimates) are captured in real time.

- The platform offers a built-in usage API to show token consumption to users and to support billing cycles.

Getting started

- Use pre-built code snippets directly in your project to integrate quickly.

- See a live example request/response flow (provided in the tool’s docs) to understand the payload structure.

- Visualize usage and billing data with the in-app visualizer.

How to use the API (example)

Example request

fetch ( "https://api.tiktokenizer.dev/openai" , {

headers : { Authorization : "Bearer <DEVELOPERS_OPENAI_KEY>" },

method : "POST",

body : JSON . stringify ( {

email : "<APP_USER_EMAIL>",

appId : "<APP_ID>",

developerKey : "<DEVELOPERS_API_KEY>",

model : "gpt-3.5-turbo",

messages : [ { role : "user" , content : "Hey ChatGPT, what's the capital of Portugal?" } ],

} ) ,

} ) ;

Example response

{ "message" : "The capital of Portugal is Lisbon.", "usage" : { "totalTokens" : 25 }, "newUserCreated" : false }

Core capabilities

- Real-time token usage tracking per request and per user

- Built-in Moderations API checks before sending requests to OpenAI

- Easy-to-use, copy-paste code snippets for rapid integration

- Visualizer for usages and billing data across ChatGPT and GPT-4 models

- Simple subscription and billing flow with token-based pricing insights

Safety and best practices

- Ensure you have user consent for processing and billing visibility.

- Use Moderations checks to prevent unsafe or disallowed content from being sent to the OpenAI API.

- Treat usage data as PII where applicable and secure it in transit and at rest.

Core Features

- Zero-friction AI app development: quick start with pre-built code snippets

- Real-time per-user token usage visualization and billing insights

- Pre-request Moderations API integration for safety checks

- Simple API integration with OpenAI’s Chat API

- Built-in visualizer for tracking usage, costs, and requests

- Secure handling of credentials and easy subscription refresh flow

- Detailed payload examples and ready-to-use code templates