Trainkore

Open siteCoding & Development

Introduction

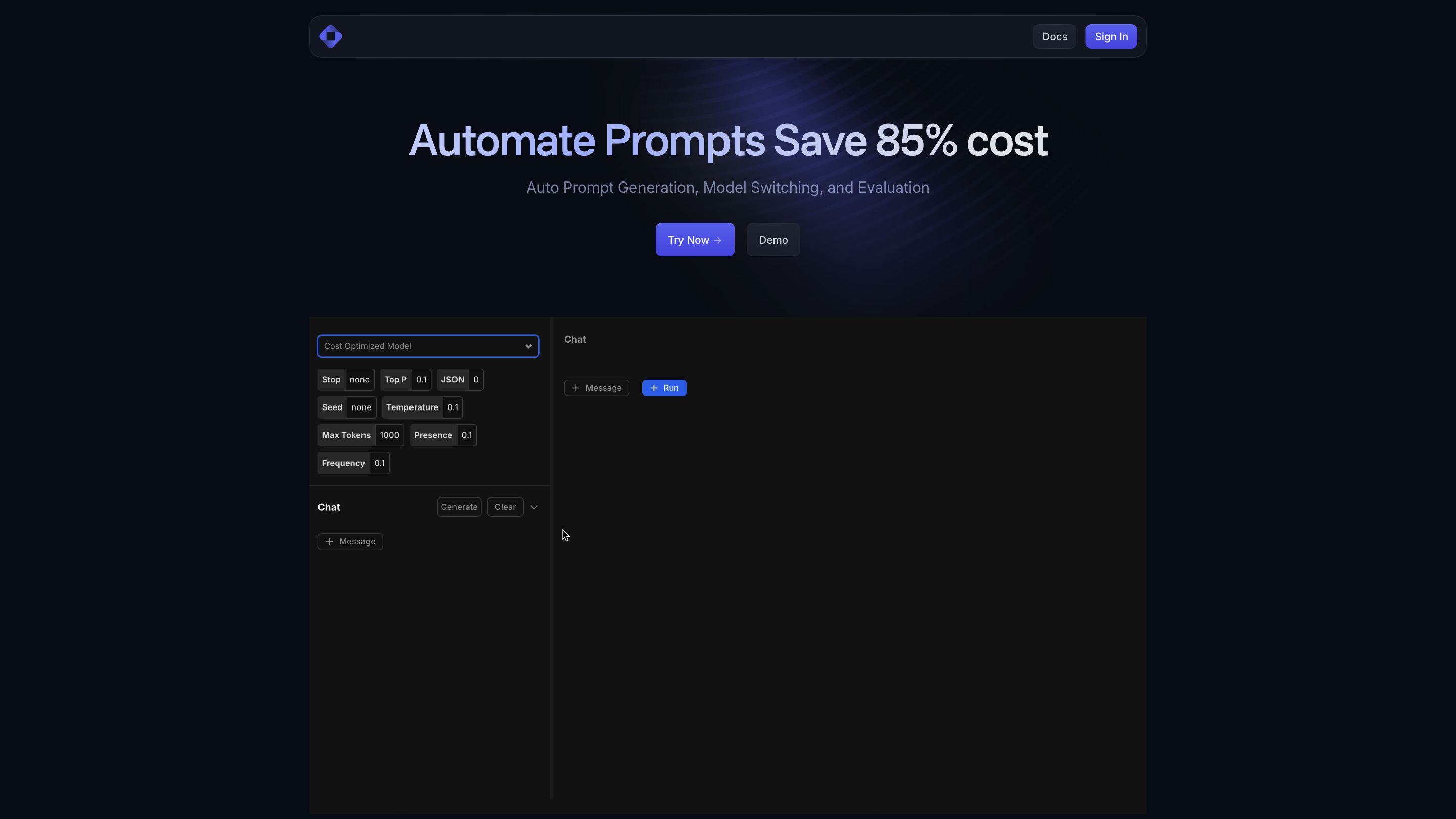

A platform for prompt management and RAG with cost optimization.

Trainkore Product Information

Trainkore — Unified AI Prompting, Model Management and Observability

Trainkore is a unified platform for building, evaluating, and deploying prompts across multiple AI models. It combines auto prompt generation, model switching, cost optimization, versioning, and observability into a single workflow-friendly environment. It works with OpenAI and other providers out of the box and integrates natively with popular tooling like Langchain and LlamaIndex.

Note: The platform includes demo/video content placeholders and showcases coming soon features. It is designed to help teams iterate prompts, compare model behavior, and optimize costs across models and use cases.

How It Works

- Connect models and providers. Trainkore supports OpenAI, Gemini, Coherence, Anthropic, Azure, and more, plus your own models. It can be used natively with Langchain, LlamaIndex, and other ecosystems.

- Prompt Versioning. Manage, compare, and roll back prompts across different model versions to ensure reproducibility.

- Auto Prompt Generation. Generate effective prompts automatically for any supported model, accelerating experimentation.

- Model Switching. Automatically select the best model for a given prompt based on runtime signals, prompts, and metadata.

- Observability. Get insights from key metrics, detailed logs, and structured input/output data to debug and optimize prompts and models.

- Playground & Iteration. Use the best-in-class playground to iterate on prompts across your organization, with support for batch evaluations and performance analysis.

- Usage & Cost Awareness. Monitor usage and optimize cost by selecting the most cost-effective models automatically.

Features Overview

- Auto Prompt Generation for any model

- Model Switching to the best model based on prompts and usage

- Observability with input/output, prompts, metadata, and performance metrics

- Prompt Versioning across OpenAI, Gemini, Coherence, Anthropic, Azure, and more

- Integration with Langchain, LlamaIndex, and external tools

- Interactive Playground for enterprise-grade prompt management

- Unified platform for standard LLMs and your own models

- Cost optimization: cost-aware model selection and usage

- Iterative Logs and Performance Analysis (input, output, rating, prompts, metadata, etc.)

- Natively supports a wide range of providers and models

Use Cases

- Prompt engineering and experimentation across multiple models

- Comparing model responses for consistency, bias, and quality

- Scalable prompt management for large organizations

- Cost-aware routing to the most economical model per task

- Auditable prompt history with versioned deployments

- Integrations with existing AI stacks (Langchain, LlamaIndex, etc.)

Safety and Compliance

- Centralized visibility helps enforce governance, auditing, and compliance for prompts and model usage.

- Use recommended governance practices when deploying prompts to production.

Core Features

- Auto Prompt Generation for any model

- Model Switching to the best model automatically based on prompts and usage

- Observability Suite with metrics, logs, and detailed debugging

- Prompt Versioning across multiple model providers

- Built-in Playground for rapid experimentation

- Natively integrated with Langchain, LlamaIndex and more

- Supports standard LLMs and user-provided models

- Cost optimization: reduced prompt-generation and model-switching costs (targeting efficiency)