16x Prompt

Open siteCoding & Development

Introduction

Streamlined platform for coding prompts.

16x Prompt Product Information

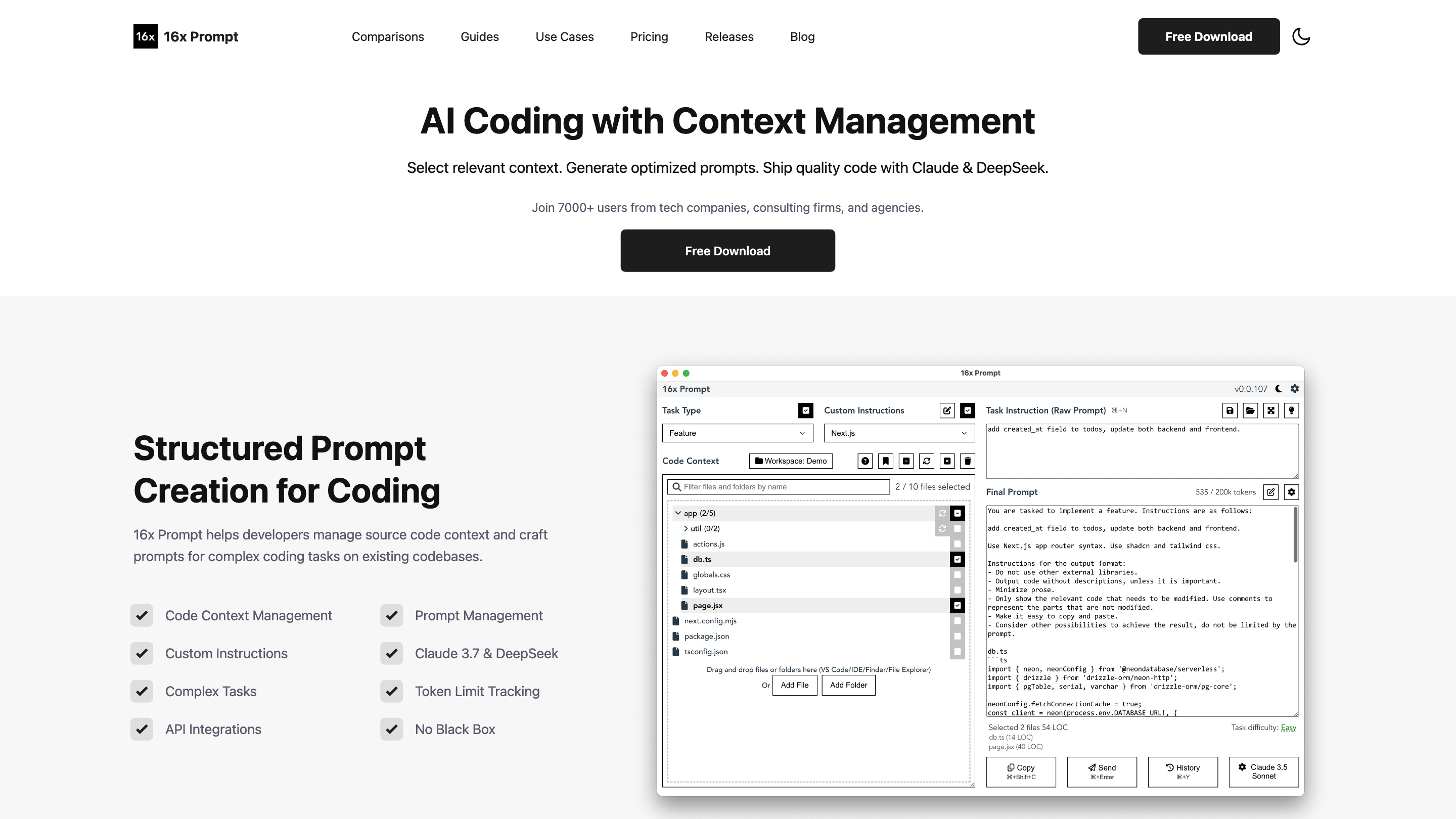

16x Prompt - AI Coding with Advanced Context Management is an AI-assisted coding tool designed to help developers manage source code context and craft optimized prompts for complex coding tasks across existing codebases. It supports API integrations with multiple providers, enabling side-by-side comparison of LLM responses and streamlined prompt generation from code, instructions, and formatting preferences. The application focuses on local execution, prompt customization, and structured workflow to improve coding efficiency for teams and individual developers.

Key Capabilities

- Context Management for multiple repositories and projects in one place

- Prompt Creation from task instructions, source code files, and formatting instructions

- Prompt Optimization and formatting tailored to specific tech stacks (e.g., Next.js, Python, SQL)

- Task templates and reusable prompts for common coding activities (refactoring, writing docs, page creation)

- API integrations with major providers (OpenAI, Anthropic Claude, Google Gemini, DeepSeek, OpenRouter, Ollama, Azure OpenAI) and compatibility with OpenAI API formats

- Compare model outputs side-by-side (e.g., GPT-4o vs Claude 3.7 Sonnet) to pick the best fit for a task

- Local data processing with privacy-focused design (runs on your machine; supports local prompts and code generation)

- Workspace organization to manage multiple repositories and related projects in a single view

- Source code-aware prompt generation that auto-includes formatting instructions and optimizations

- Toggleable models and compatibility with third-party and self-hosted options (e.g., Ollama, Azure, OpenRouter)

How It Works

- Import or reference source code files from your repositories.

- Create or select task instructions and specify desired formatting and target tech stack.

- Generate a final prompt that combines the task, code context, and formatting rules.

- Send the prompt to your chosen LLM (via built-in integration or your own API key) and receive code-ready responses.

- Review, refine, and reuse prompts across projects using saved workspaces and templates.

Core Features

- Local, privacy-conscious operation: runs on your machine with no mandatory cloud data storage

- Multi-repo and multi-project workspaces for organized context management

- Prompt creation from task instructions, source code, and formatting guidelines

- Prompt optimization with customization options for different programming languages and frameworks

- Reusable templates for common coding workflows (refactoring, docs, page creation, etc.)

- Integrated API keys management for OpenAI, Claude, and other compatible providers

- Side-by-side model comparison to evaluate responses from multiple LLMs

- Language and framework support spanning Python, JavaScript/TypeScript, Java, C++, SQL, React, Vue, Next.js, Flask, Django, and more

- Local data privacy: no requirement to upload data to external servers

- Cross-provider compatibility through OpenAI-format prompts and adapters

- Flexible licensing and enterprise options (for teams and organizations)

API Integrations and Providers

- OpenAI

- Anthropic Claude

- Google Gemini

- DeepSeek

- OpenRouter

- Ollama

- Azure OpenAI

- Additional providers that offer OpenAI-compatible APIs

Target Users and Use Cases

- Developers needing structured prompts to code from high-level instructions

- Teams managing multiple codebases requiring centralized context management

- Organizations seeking private, offline-capable coding workflows

- Professionals comparing model outputs to select the best-performing AI for coding tasks

Pricing and Licensing

- Free to download and use with some usage limitations; licenses available for higher usage and enterprise needs

- Local install with optional enterprise licenses and white-label arrangements

Safety and Compliance Considerations

- Promotes local processing to protect IP and sensitive code

- Encourages best practices for prompt reuse and model selection to improve reliability and reduce risk