Berri

Open siteCoding & Development

Introduction

Rapidly create apps with data integration and a shareable web app. Low-code/no-code interface + best-in-class support.

Berri Product Information

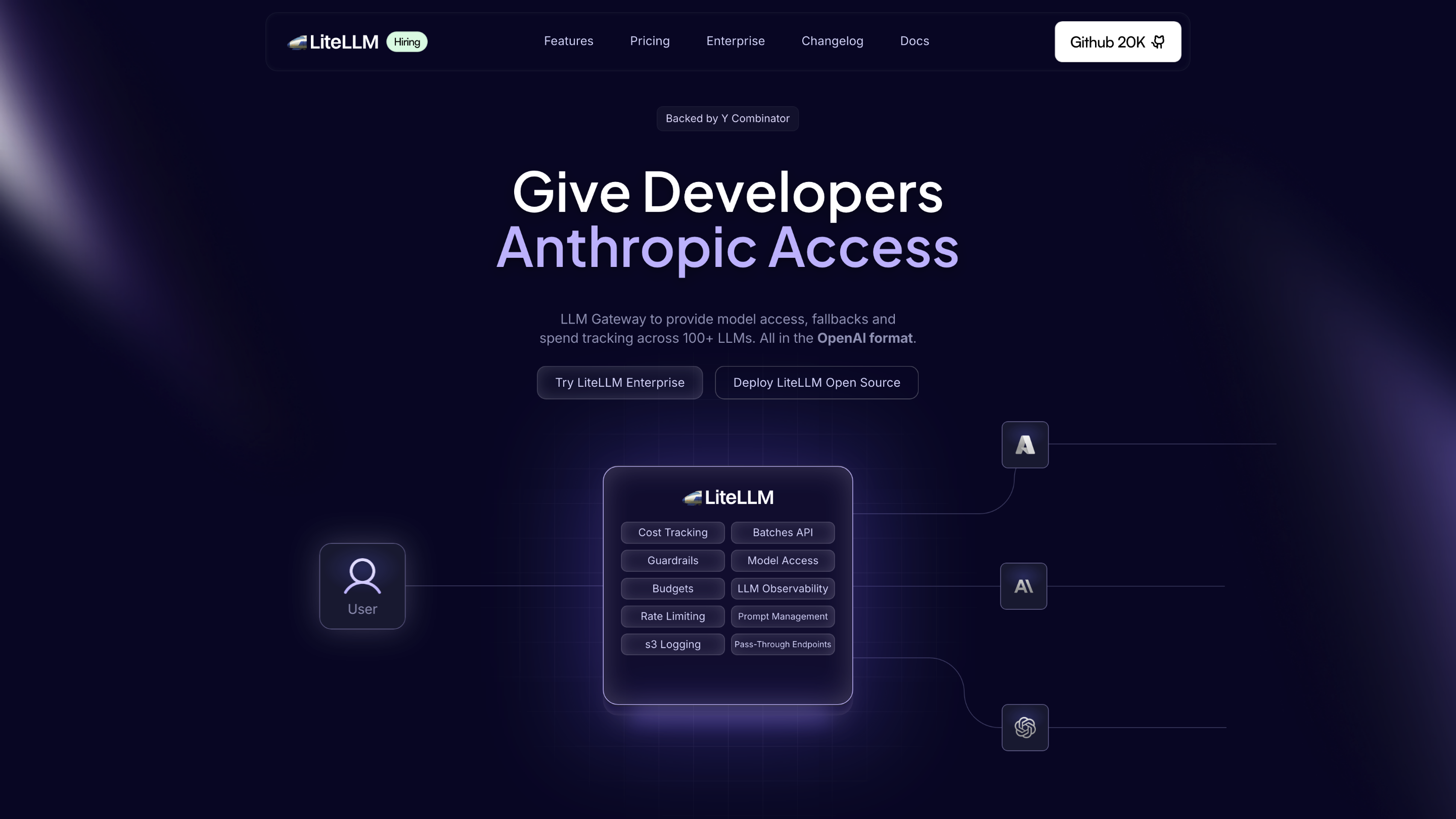

LiteLLM is a platform that simplifies model access, spend tracking, and fallbacks across 100+ LLM providers in an OpenAI-compatible format. It offers both Open Source and Enterprise deployments, with tooling to manage developer access, budgets, rate limits, and observability, while enabling seamless integration with major cloud and AI providers (OpenAI, Azure, Bedrock, GCP, etc.). The solution is designed for platform teams to securely provision LLM access to many developers and projects, with robust cost tracking, governance, and fallback mechanisms.

How LiteLLM Works

- Provides a unified gateway to 100+ LLM providers in the OpenAI-compatible format.

- Centralizes spend tracking, budgets, and rate limits across providers.

- Enables per-key/user/team/org cost attribution and log-based observability.

- Supports OpenAI-compatible prompts and can format prompts for various hosted models (HF models and others).

- Delivers LLM access via deployment modes suitable for OSS or Enterprise environments (cloud or self-hosted).

- Includes governance features like prompts management, guardrails, and audit-ready logging.

Features

- OpenAI-compatible LLM access across 100+ providers (OpenAI, Azure, Bedrock, GCP, etc.).

- Spend Tracking with tag-based attribution to key/user/team/org and automatic logging to S3/GCS/etc.

- Budgets and Rate Limits to enforce usage boundaries and cost control.

- LLM Fallbacks to ensure high availability by switching providers when needed.

- Tightly integrated logging and observability (Langfuse, OTEL, Prometheus, etc.).

- Prompt Management and prompt formatting support for various models.

- Enterprise features: JWT Auth, SSO, audit logs, custom SLAs, and on-prem/self-hosted options.

- OSS and Cloud deployment options for flexible hosting.

- Easy-to-use gateway/proxy layer to simplify developer access across many models.

- Cost attribution down to keys/users/teams/orgs with automatic spend reporting.

How to Get Started

- Choose Open Source or Enterprise deployment.

- Connect your LLM providers (OpenAI, Azure, Bedrock, etc.).

- Define access policies for developers and teams.

- Enable spend tracking and budgets, then monitor usage via the dashboards.

- Use the gateway to route prompts to the appropriate model/provider with uniform telemetry.

Use Cases

- Large organizations needing Day 0 access to the latest LLMs for multiple teams.

- Fintech, insurance, and consumer apps requiring standardized LLM access, cost controls, and governance.

- Teams needing quick onboarding of new models without reworking input/output handling.

Safety and Compliance

- Enterprise-grade authentication, auditing, and compliance features.

- Clear separation of developer access from production data with robust logging.

- Cost governance to prevent unexpected spend and ensure budgets are respected.

Core Benefits

- Centralized, compliant LLM access across many providers.

- Fine-grained cost attribution and spend visibility.

- Robust guardrails and prompt management to maintain quality and safety.

- Flexible deployment options (OSS or Enterprise, cloud or self-hosted).

Pricing & Plans

- Open Source: Free with OSS features.

- Enterprise: Custom pricing with SLAs, security, and support.

Testimonials (illustrative)

- Netflix uses LiteLLM to give developers Day 0 LLM access, reducing time-to-release and avoiding cross-provider data transformation.

- Lemonade highlights streamlined management of multiple LLMs and reduced operational complexity.

- RocketMoney notes standardized logging and authentication across models, enabling rapid adaptation to changing demands.

Related Resources

- LiteLLM Product

- LiteLLM Python SDK

- LiteLLM Gateway (Proxy)

- Documentation

- Getting Started guides

How It Works (Summary)

- Attribute cost to key/user/team/org and automatically track spend across providers.

- Provide OpenAI-compatible access with a gateway that handles routing and observability.

- Offer guardrails, budgets, and fallback mechanisms to ensure reliable, cost-controlled LLM usage.