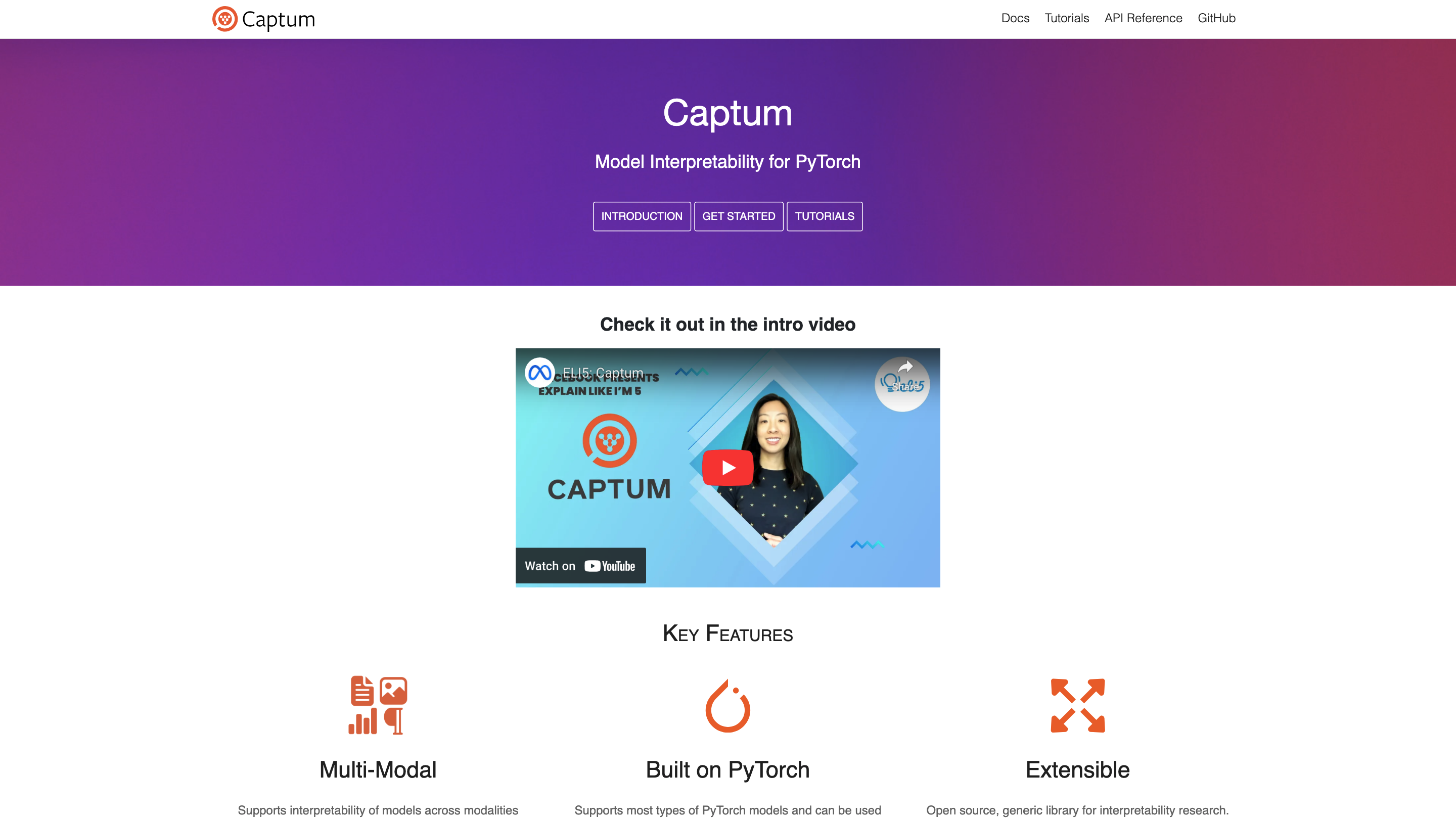

Captum · Model Interpretability for PyTorch

Open siteIntroduction

Interpret models in PyTorch

Featured

Claudekit

Claude Code AI development toolkit

Lovable

AI-powered platform for software development via chat interface.

Chatbase

Chatbase is an AI chatbot builder that uses your data to create a chatbot for your website.

ElevenLabs

The voice of technology. Bringing the world's knowledge, stories and agents to life

Captum · Model Interpretability for PyTorch Product Information

Captum · Model Interpretability for PyTorch is an open-source, generic library for interpretability research that enables researchers and engineers to understand and benchmark neural network predictions across modalities such as vision and text. Built on PyTorch, Captum supports most PyTorch models with minimal modification and provides a flexible API to implement and evaluate attribution algorithms. The project emphasizes extensibility, reproducibility, and ease of integration into existing PyTorch workflows.

Overview

- Multi-Modal interpretability: supports interpretability across different data modalities (e.g., vision, text).

- PyTorch-based: designed to work seamlessly with PyTorch models and workflows.

- Extensible and open source: generic library that enables researchers to implement and benchmark new attribution algorithms.

- Reproducible examples: includes tutorials and runnable code snippets to help users get started quickly.

How to Get Started

- Install Captum (recommended via conda):

conda install captum -c pytorch- or via pip:

pip install captum

- Create and prepare a model in PyTorch and switch it to evaluation mode: use a simple example like a small feed-forward network or any custom model.

- Define input and baseline tensors to compare model outputs against a baseline (e.g., zeros).

- Choose and apply an attribution algorithm (e.g., Integrated Gradients) to compute attributions.

- Inspect results to understand which features contributed most to the prediction and analyze convergence behavior.

Example (Integrated Gradients)

- Create a toy model and set it to eval mode.

- Fix randomness for deterministic results.

- Define input and baseline tensors.

- Instantiate the attribution algorithm (e.g.,

IntegratedGradients). - Compute attributions and convergence delta.

- Print or visualize the results.

Tutorials and Docs

- Introduction

- Getting Started

- Tutorials

- API Reference

- Legal, Privacy, Terms

- Community and license: © 2025 Facebook Inc.

Core Features

- PyTorch-based interpretability: integrates with existing PyTorch models and training code

- Supports multiple attribution algorithms (e.g., Integrated Gradients, others) to attribute predictions to input features

- Works with various modalities (vision, text, etc.)

- Deterministic workflows with seed control for reproducible results

- Lightweight API designed for easy experimentation and benchmarking of new methods

- Comprehensive tutorials and API reference for quick onboarding

- Open-source and extensible: easily implement and benchmark new attribution methods