ClearML

Open siteIntroduction

Develop, integrate, ship, and improve machine learning models at any scale

ClearML Product Information

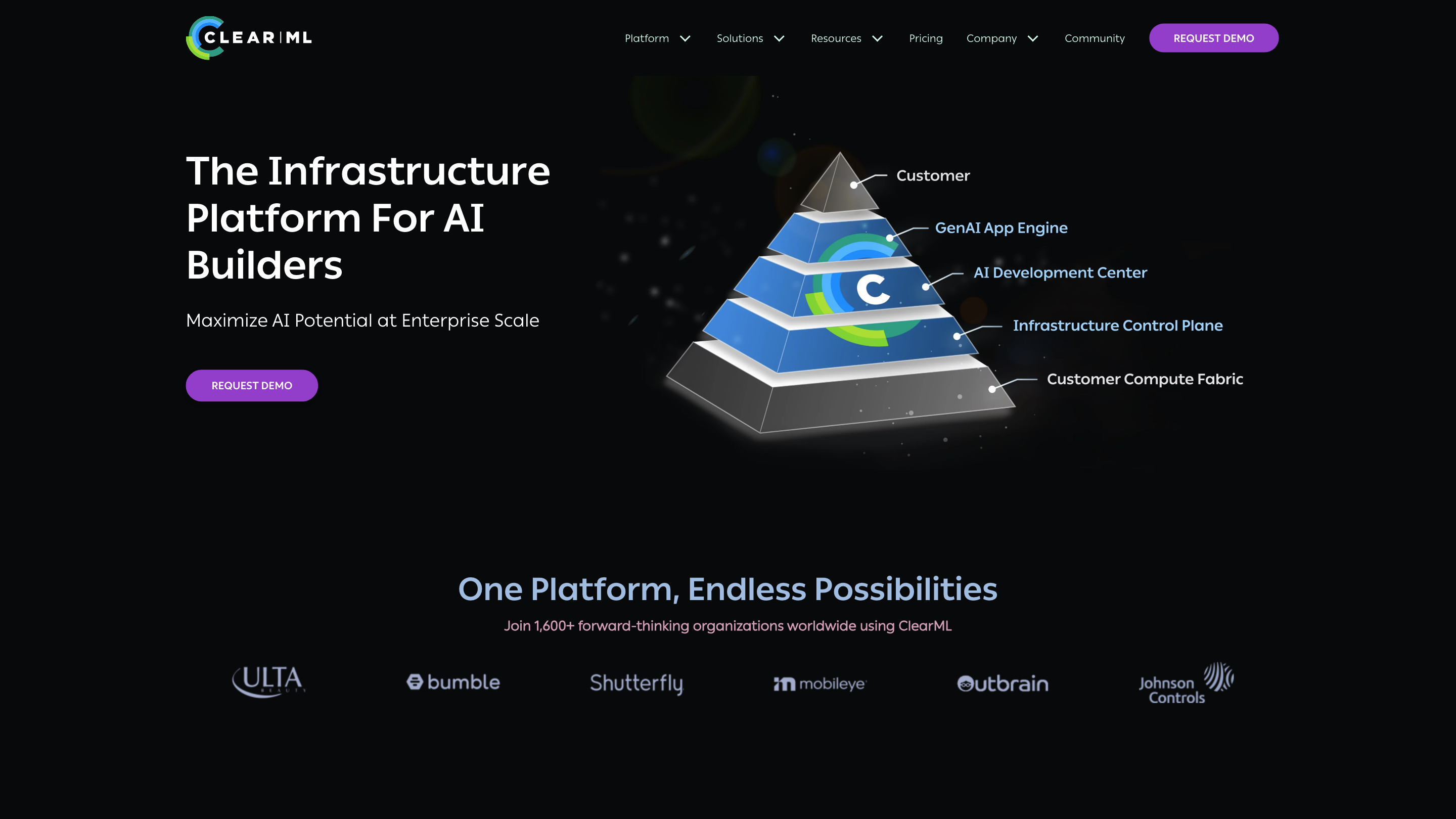

ClearML AI Infrastructure Platform is an end-to-end AI infrastructure solution that unifies development, deployment, and management of AI workloads at enterprise scale. It provides a three-layer stack: Infrastructure Control Plane for GPU resource management across on‑premises, cloud, and hybrid environments; AI Development Center for a robust, cloud-like IDE and MLOps tooling; and GenAI App Engine for rapid GenAI deployment with secure, scalable LLM hosting. The platform emphasizes multi-tenancy, access control, cost optimization, and streamlined workflows from model development to production deployment.

How ClearML Works

- Infrastructure Control Plane connects and manages GPU clusters across environments, offering multi-tenant access, quota management, and dynamic fractional GPUs. It enables one‑click GPU as a Service (GPUaaS) and policy-driven scheduling to maximize compute utilization while controlling costs.

- AI Development Center provides an integrated development environment (IDE) with data integration, monitoring, automation, dashboards, pipelines, model repository, and CI/CD integration for rapid experimentation and deployment.

- GenAI App Engine lets you deploy GenAI workloads, expose secure LLM APIs, and iterate on GenAI projects with built‑in access control and monitoring. It supports using custom models or fine-tuning off‑the‑shelf LLMs, with tooling that simplifies data ingestion, vector DB creation, and feedback collection.

The platform is silicon/cloud/vendor-agnostic and aims to help IT teams deliver multi-tenant, scalable AI workflows with predictable ROI.

Core Capabilities

- Unified control plane for on‑prem, cloud, and hybrid GPU resources

- Multi-tenant security with role-based access control and isolated networks/storage

- Dynamic, quota-based resource governance and fractional GPU provisioning

- One‑click GPUaaS and optimized scheduling across clusters

- Comprehensive AI development environment with data integration, monitoring, pipelines, and CI/CD

- Model repository, dashboards, automation, and self-serve compute

- GenAI App Engine for rapid deployment of secure LLM APIs

- Secure networking, authentication, and monitoring for GenAI workloads

- Flexible deployment options for enterprise-scale AI workloads

Feature Highlights

- Multi-tenant GPU resource management across on‑prem, cloud, and hybrid environments

- One‑click GPUaaS with dynamic fractional GPU provisioning

- Quota management and usage-based billing for cost control

- Priority-based, scalable job scheduling across diverse clusters

- Secure isolation of networks and storage per tenant

- Integrated AI development center with IDE, pipelines, and CI/CD

- Comprehensive model repository, monitoring, and dashboards

- GenAI App Engine for secure, scalable LLM deployment with built-in access control

- Silicon, cloud, and vendor agnostic platform to fit existing infrastructure

- End-to-end workflow support from development to production

How It Helps

- Accelerates AI project delivery by consolidating infrastructure, development, and deployment tools

- Improves resource utilization and reduces total cost of ownership through centralized control and scheduling

- Enhances security and compliance with multi-tenant isolation and robust access controls

- Enables rapid GenAI experimentation and production with managed endpoints and monitoring

Suitable For

- Enterprises needing scalable, secure AI infrastructure across multi-cloud and on‑prem environments

- Teams requiring centralized governance, reproducible experiments, and scalable GenAI deployment

- IT and DevOps groups aiming to streamline AI workflows and optimize GPU usage