Clips AI

Open siteIntroduction

Automatically create social media clips from long videos. Save time and increase social metrics.

Clips AI Product Information

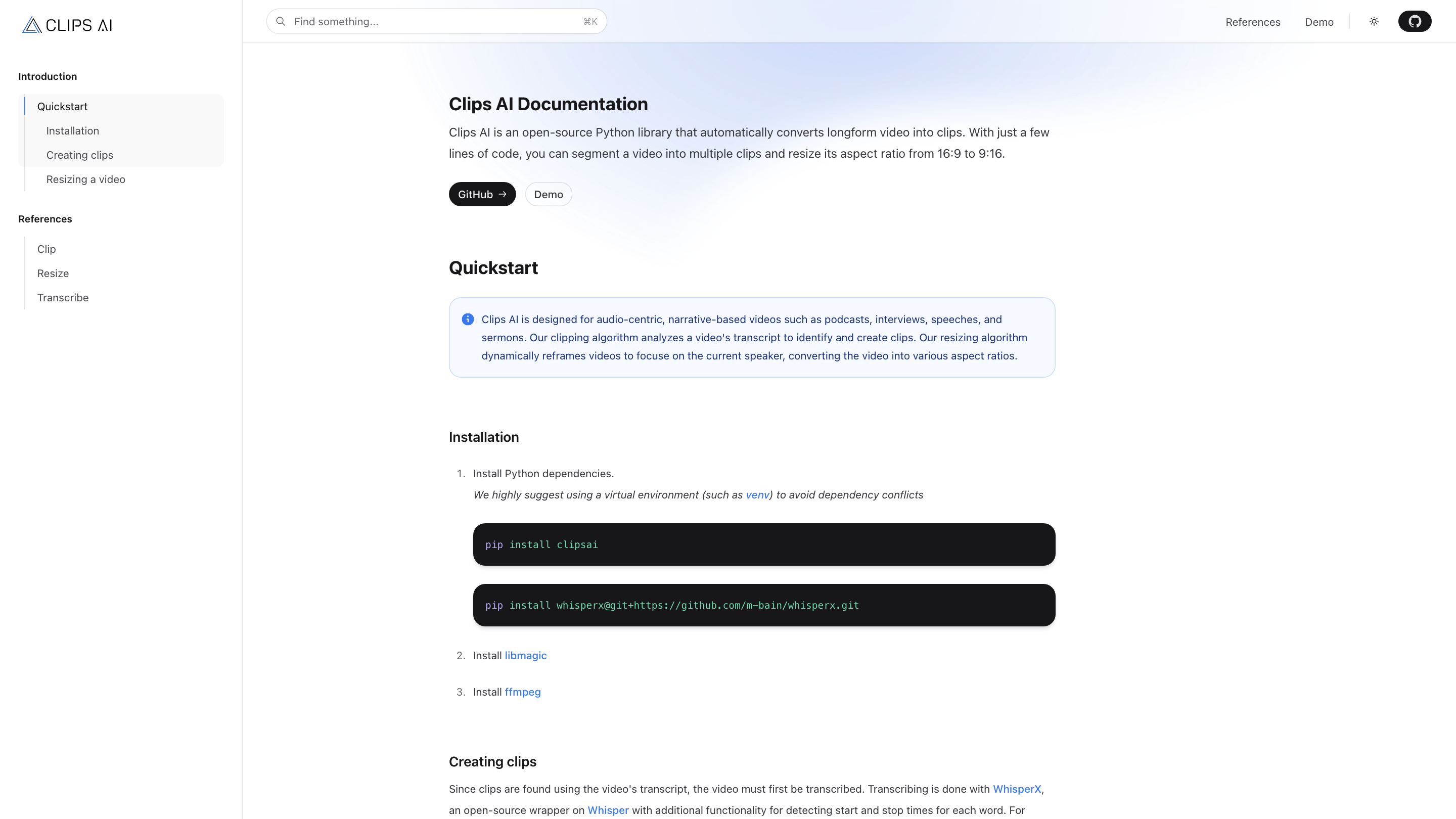

Clips AI | AI Video Repurposing for Developers is an open-source Python library that automatically converts longform video into clips. With just a few lines of code, you can segment a video into multiple clips and resize its aspect ratio from 16:9 to 9:16. The tool is designed for audio-centric, narrative-based videos such as podcasts, interviews, speeches, and sermons. Its clipping algorithm analyzes a video's transcript to identify and create clips, while its resizing algorithm dynamically reframes videos to focus on the current speaker, converting the video into various aspect ratios.

Quickstart

- Install Python dependencies. We highly suggest using a virtual environment (such as venv) to avoid dependency conflicts.

- Install Clips AI:

pip install clipsaipip install whisperx@git+https://github.com/m-bain/whisperx.git

- Install libmagic and FFmpeg.

How to Use

Creating clips

Since clips are found using the video's transcript, the video must first be transcribed. Transcribing is done with WhisperX, an open-source wrapper on Whisper with additional functionality for detecting start and stop times for each word.

- Example flow:

- Transcriber → transcribe(audio_file_path = "/abs/path/to/video.mp4")

- ClipFinder → find_clips(transcription = transcription)

- Access clip start_time and end_time from the resulting clips list

Resizing a video

To resize the original video to the desired aspect ratio, a Hugging Face access token is required to use Pyannote for speaker diarization. You won’t be charged for using Pyannote; instructions are available on the Pyannote HuggingFace page.

- Example flow:

- resize(video_file_path = "/abs/path/to/video.mp4", pyannote_auth_token = "pyannote_token", aspect_ratio = (9, 16))

- The function returns crops with segments that indicate how the video has been resized

Installation Details

- Install Python dependencies in a virtual environment

pip install clipsaipip install whisperx@git+https://github.com/m-bain/whisperx.git- Install libmagic

- Install FFmpeg

What It Analyzes

- Transcript-based clipping using WhisperX to detect word timestamps

- Speaker diarization (for resizing) using Pyannote (requires HuggingFace token)

Outputs

- A set of clips with start and end times derived from transcript analysis

- Cropping/resized video crops aligned to speaker focus for 9:16 or other aspect ratios

How It Works

- Import:

from clipsai import ClipFinder, Transcriber - Transcribe:

transcriber = Transcriber()thentranscription = transcriber.transcribe(audio_file_path = "/abs/path/to/video.mp4") - Clip detection:

clipfinder = ClipFinder()thenclips = clipfinder.find_clips(transcription = transcription) - Resize:

crops = resize(video_file_path = "/abs/path/to/video.mp4", pyannote_auth_token = "pyannote_token", aspect_ratio = (9, 16))

Safety and Legal Considerations

- Ensure you have rights to process and repurpose the video content. Respect privacy and licensing when distributing clips.

Core Features

- Open-source Python library for automatic video clipping from transcripts

- Clips based on transcript timing (word-level start/stop detection via WhisperX)

- Aspect-ratio resizing (e.g., 16:9 to 9:16) with speaker-focused cropping using Pyannote

- Simple, code-first interface for developers to integrate into pipelines

- Support for longform videos such as podcasts, interviews, speeches, and sermons

- Dependency installation via pip; virtual environment recommended

- FFmpeg and libmagic requirements for media processing