Coval

Open siteIntroduction

Platform for building reliable voice and chat agents quickly.

Coval Product Information

Coval — Self-Driving Style Simulation for AI Voice & Chat Agents

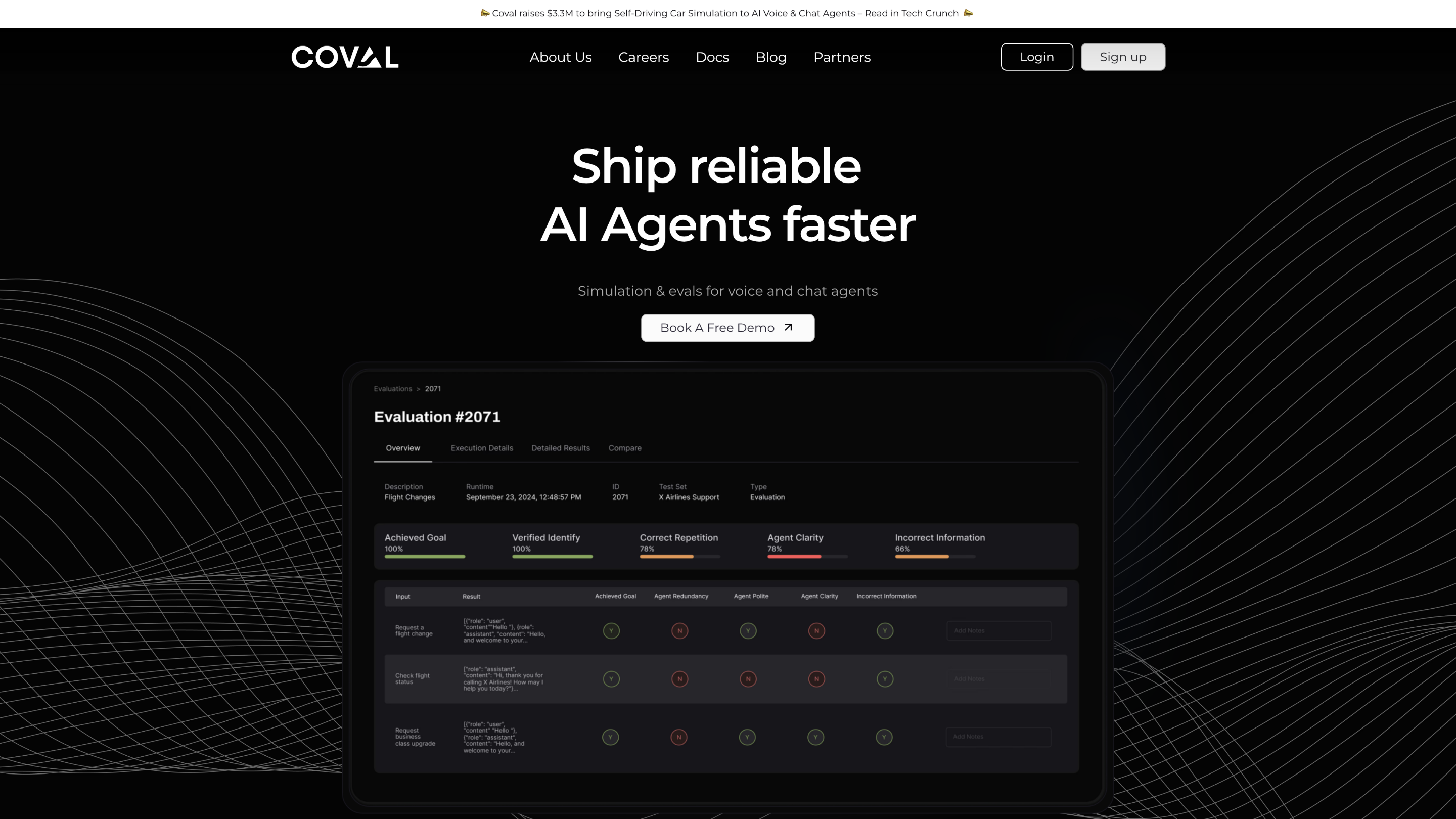

Coval is a simulation and evaluation platform designed to accelerate the development and testing of AI voice and chat agents by streaming thousands of scenarios and conversations. Built on the foundation of autonomous testing expertise, Coval enables you to generate high-volume, realistic test cases from your prompts and environments, then run them against your agents to observe performance, reliability, and behavior under varied conditions.

Key capabilities include voice-enabled simulations, transcript and audio replay-based evaluations, regression tracking, and production-level observability. The platform emphasizes developer-friendly workflows, robust metrics, and the ability to define custom evaluation criteria that align with business outcomes.

How Coval Works

- Simulate Conversations – Use scenario prompts, transcripts, workflows, or audio inputs to generate diverse test conversations. Environments, voices, and prompts are customizable to stress-test agents from all angles.

- Launch Evaluations – Evaluate agent performance with built-in metrics (latency, accuracy, tool-call effectiveness, instruction compliance) or your own custom metrics.

- Track Regressions – Compare results across runs with transcripts and audio replays, re-simulate prompt changes, and set performance alerts. Human-in-the-loop labeling is supported when needed.

- Production Observability – Monitor production calls, log all interactions, and evaluate live performance to ensure ongoing reliability.

- Alerts & Optimization – Define instant alerts for thresholds or off-path behavior and analyze performance to optimize workflows and agent behavior.

Why Coval

- Built on proven foundations from years of autonomous testing and scalable testing infrastructure (backed by experience from Waymo).

- Metrics that matter: collaborate with you to define evaluation metrics that drive business outcomes.

- Developer-first design: seamless integrations and intuitive workflows to ship reliable agents faster.

- Comprehensive lifecycle: from development-time simulations to production observability and optimization.

Use Cases

- AI voice agents testing and validation across thousands of simulated conversations.

- End-to-end evaluation of agent prompts, tool usage, and instruction compliance.

- Regression testing to catch performance regressions after updates.

- Production monitoring to ensure live agent reliability and quick alerting.

Core Features

- Scenario-based conversation simulation with customizable voices and environments

- Text, transcript, and audio inputs for flexible test generation

- Built-in and custom evaluation metrics (latency, accuracy, tool-call effectiveness, instruction compliance)

- Regression tracking with transcripts and audio replays

- Re-simulation of prompts and automated test re-runs for drift detection

- Production observability: log production calls and evaluate live performance

- Instant alerts and performance thresholds to catch off-path behavior

- Human-in-the-loop labeling support for nuanced evaluations

- Developer-friendly integrations and workflows tailored for AI voice/chat pipelines

How It Works (Summary)

- Create scenario prompts or upload transcripts/workflows to generate simulated conversations.

- Run evaluations against your AI agent with configurable voices and environments.

- Review results via metrics, replays, and alerts; iterate to improve agent reliability.

- Monitor production activity to maintain performance and quickly address issues.

Disclaimer: This description summarizes Coval's capabilities based on publicly available information and is intended for overview purposes.