Future AGI

Open siteCoding & Development

Introduction

Automated QA solutions for AI models with customizable metrics.

Featured

Future AGI Product Information

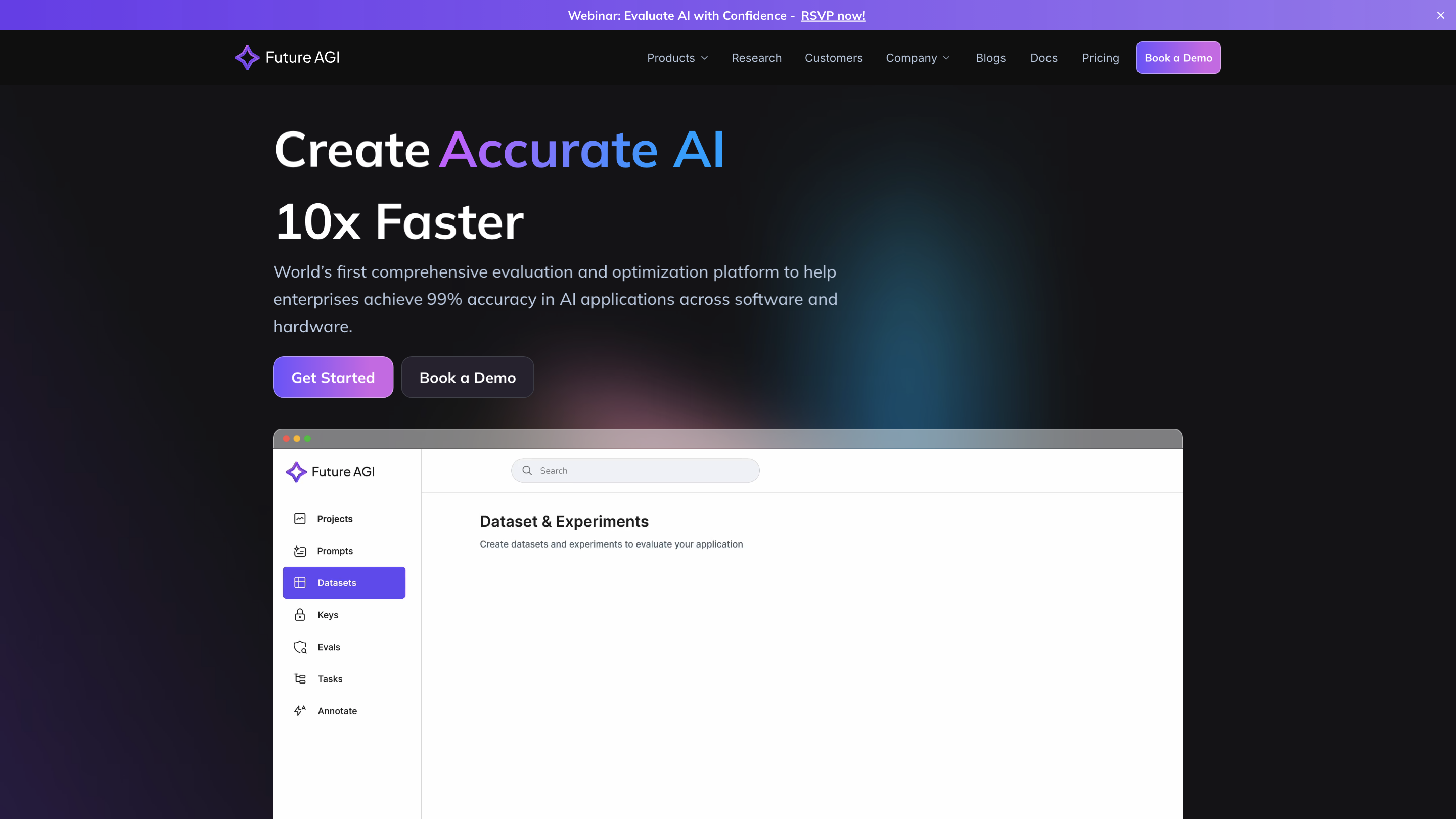

AI Model Evaluation & Optimization Platform by Future AGI

Future AGI offers the world’s first comprehensive evaluation and optimization platform designed to help enterprises achieve up to 99% accuracy in AI applications across software and hardware. The platform emphasizes reliability, safety, and performance for production-grade AI systems, with a developer-first approach and seamless integration into existing workflows.

Overview

- An end-to-end platform for building, evaluating, and improving AI systems across multiple modalities (text, image, audio, video).

- Emphasis on accuracy, reliability, and safety with built-in evaluation metrics and real-time production monitoring.

- Integrations and developer-friendly tooling to minimize disruption to current engineering workflows.

How It Works

- Datasets: Generate and manage diverse synthetic and real-world datasets, including edge cases, to train and test AI models.

- Experiment: Define and run multiple agentic workflow configurations to compare performance using built-in or custom evaluation metrics without writing code.

- Evaluate: Measure agent performance, pinpoint root causes, and close the loop with actionable feedback using proprietary evaluation metrics.

- Improve: Incorporate feedback and automatically refine prompts or models to boost overall performance.

- Monitor & Protect: Track applications in production with real-time insights, diagnose issues, and enforce safety thresholds to block unsafe content with low latency.

Core Use-Cases

- Multimodal AI evaluation across text, image, audio, and video.

- Iterative prompt optimization and model refinement guided by objective eval metrics.

- Robust production monitoring to sustain accuracy and safety in live deployments.

- Integration into existing development workflows with minimal disruption.

Why It Matters

- Helps enterprises achieve higher accuracy in AI applications, reducing risk and increasing trust in automated decisions.

- Provides visibility into production performance, enabling proactive improvement and faster time-to-value.

- Supports safety and governance through built-in safety metrics and real-time content blocking.

Getting Started

- Explore diverse datasets (synthetic and real-world) tailored to your domain.

- Run no-code experiments to compare agentic configurations against built-in or custom metrics.

- Evaluate results to identify root causes and iterate improvements.

- Deploy with confidence using monitoring and protective features to maintain safety and reliability.

Feature Highlights

- End-to-end evaluation and optimization platform for AI apps across software and hardware

- Generate and manage diverse synthetic datasets, including edge cases

- No-code experimentation to compare multiple agentic workflows using built-in or custom metrics

- Comprehensive evaluation to pinpoint root causes and provide actionable feedback

- Automated improvements to prompts and models based on evaluation results

- Real-time monitoring of production AI systems with diagnostics and safety metrics

- Multimodal evaluation support (text, image, audio, video)

- Seamless integration with existing developer workflows and tools

- Safety and governance features to block unsafe content with minimal latency

- Case studies, blogs, and industry testimonials illustrating impact and ROI