GiGOS

Open siteCoding & Development

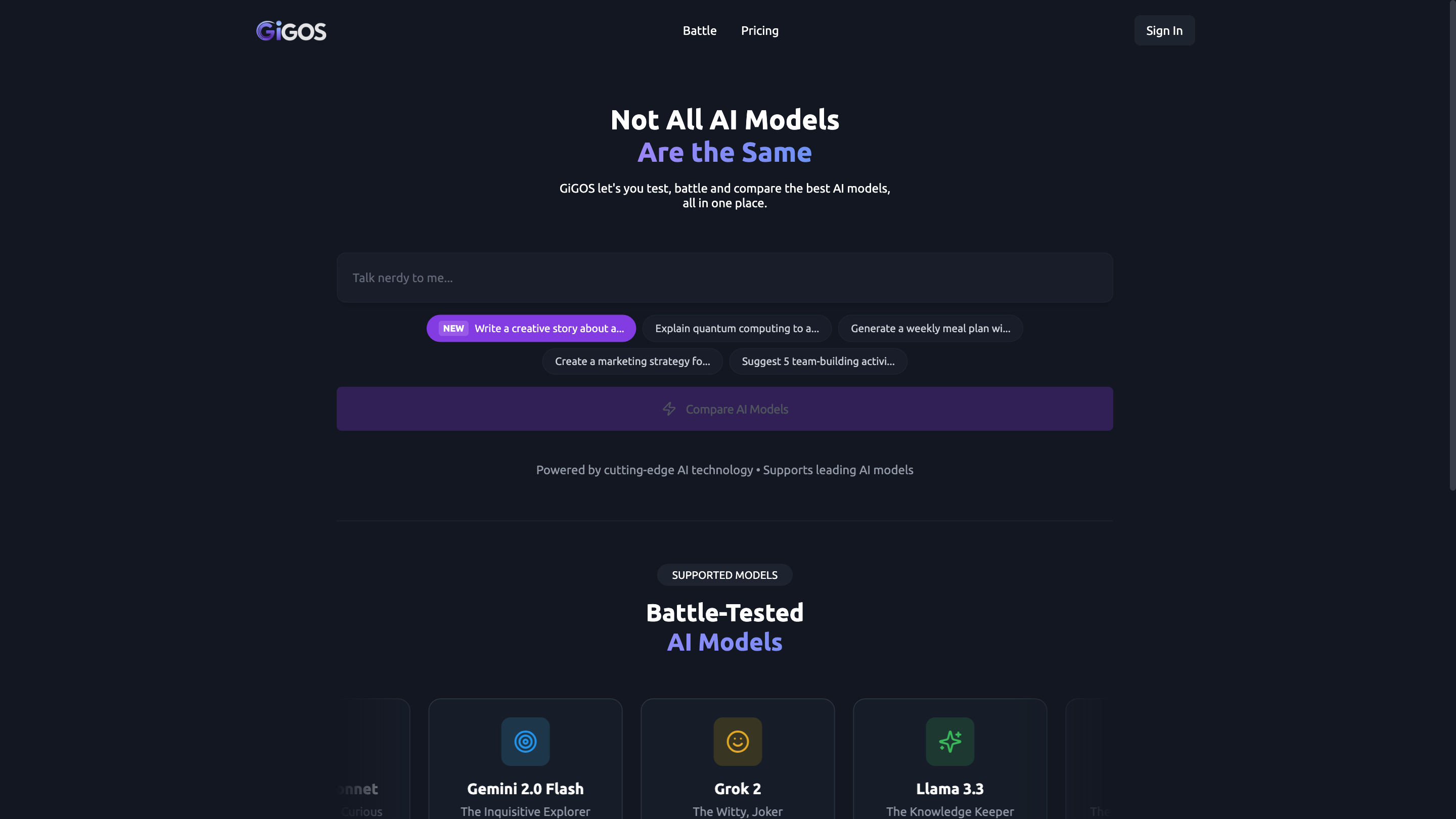

Introduction

Unified platform for accessing various AI models seamlessly.

GiGOS Product Information

GiGOS Battle Pricing & AI Model Playground is a multi-model AI evaluation and experimentation hub that lets you test, battle, and compare leading AI models in one place. It combines a marketplace-like catalog of AI models with a simple Pay-As-You-Go credit system, enabling flexible usage, side-by-side comparisons, and rapid experimentation across diverse AI capabilities.

How It Works

- Credit-based access: Start with a base amount (e.g., $10) in your account. Credits never expire.

- Pay-Per-Use: Credits are deducted only when you run a model or request an operation.

- Top Up: Add more credits anytime at your preferred tier to continue testing and benchmarking.

- Model Catalog: Browse and select from battle-tested AI models to run prompts, tests, or tasks.

- Compare & Battle: Execute identical prompts across multiple models to compare outputs, latency, and performance in a controlled setting.

Supported AI Models

- Claude 3.7 Sonnet (The Thoughtfully Curious)

- Gemini 2.0 (The Inquisitive Explorer)

- Grok 2 (The Witty, Joker)

- Llama 3.3 (The Knowledge Keeper)

- GPT-4o (The Adaptive Collaborator)

- The Thoughtfully Curious

- The Inquisitive Explorer

- The Witty, Joker

- The Knowledge Keeper

- The Adaptive Collaborator

- DeepSeek R1 (The Quiet Titan)

Note: The platform features battle-tested models and a consistent interface to evaluate and compare model behavior, outputs, and capabilities side-by-side.

Pricing & Billing

- Pay-As-You-Go Credits: Start with a deposit (e.g., $10+) and spend credits per use.

- No Expiration: Credits never expire, so you can accumulate and test at your own pace.

- Top Up Anytime: Add credits when needed, at your preferred tier.

- Simple, Transparent: Clear per-use costs aligned with model and task complexity.

Getting Started

- Create or sign in to your GiGOS account.

- Load initial credits (minimums as offered).

- Browse the Supported Models and pick one to run your task.

- Run your prompts, compare outputs, and decide on next steps.

Use Cases

- Side-by-side model benchmarking

- Quick prototyping across multiple AI backends

- Cost-aware experimentation for product features

- Research comparisons and reproducible testing

Safety & Compliance

- Ensure you have rights to any data you submit.

- Use responsibly and in accordance with each model’s terms.

- Be mindful of data privacy and model usage policies when comparing outputs.

Core Features

- Centralized platform to test, battle, and compare multiple AI models in one place

- Wide range of leading models available for evaluation

- Simple Pay-As-You-Go credit system with non-expiring credits

- Immediate usage without long-term commitments

- Side-by-side benchmarking to compare outputs, latency, and behavior across models

- Flexible top-up options to scale experiments

- Clear visibility into per-use costs and model capabilities