Hume AI

Open siteResearch & Data Analysis

Introduction

Measure, understand, and improve human emotion with AI

Hume AI Product Information

Hume AI Platform Overview

Hume AI offers a comprehensive suite of AI-driven voice and emotion analytics tools designed for developers and businesses. The platform centers on expressive, empathic voice generation and analysis, enabling you to build emotionally intelligent audio experiences across applications such as TTS, voice agents, and real-time conversations.

Core Tools and Capabilities

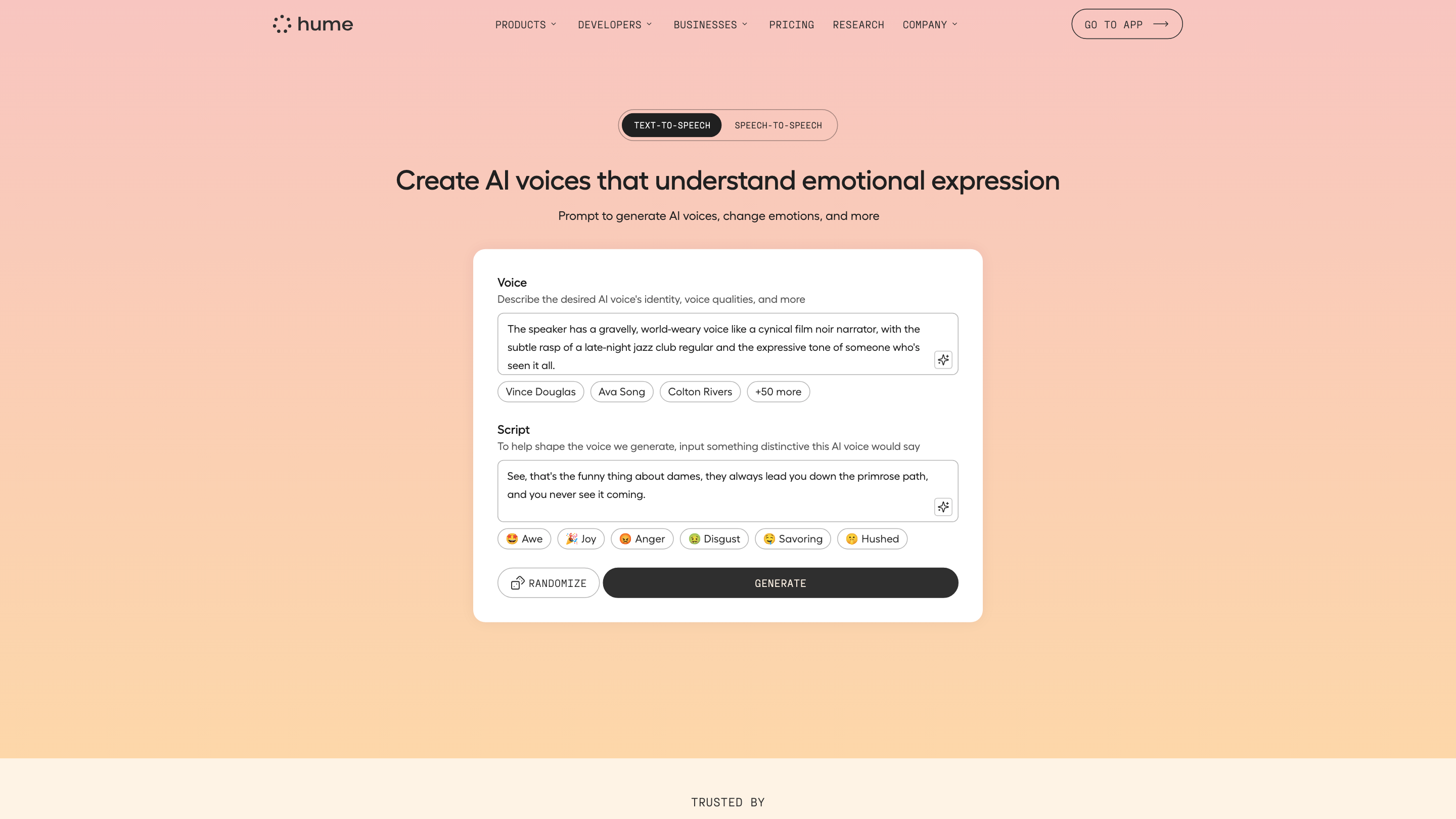

- Text-to-Speech (TTS): Create AI voices with nuanced emotional expression using Octave, a voice-based LLM that understands contextual meaning to predict cadence, emotion, and delivery.

- Empathic Voice Interface (EVI): Real-time, conversational voice agent capabilities with adaptive tone and personality emulation. Includes EVI 2 for rapid, emotionally aware interactions.

- Expression Measurement API: Analyze expression across face, voice, and language to quantify emotional states.

- Conversational Voice: Full developer platform for deploying emotionally intelligent voice agents with flexible prompts and voice modulation.

- TTS Creator Studio: Tools to generate and edit long-form audio content.

- Developer Platform & API: Access to APIs, documentation, playground, and a community for integrating Hume’s voice capabilities into applications.

- Research & Ethos: Emphasis on human well-being with The Hume Initiative guidelines for empathic AI.

- Multi-Modal & Multilingual: Support for diverse voices, tones, and accents, with dynamic emotion control and multilingual capabilities (EVI 2).

- Real-Time Voice Modulation: Fine-grained control over voice properties such as pitch, femininity, nasality, and cadence.

- Prompts & Voices Library: A gallery of predefined voices and prompts for rapid prototyping and customization.

- Studio-Grade Outputs: Generate high-quality audio suitable for podcasts, voiceovers, audiobooks, and more.

- Integrations & API Access: API keys, usage monitoring, documentation, and developer resources to embed capabilities into your apps.

- Safety & Alignment: Guidelines and best practices to ensure responsible use of empathic voice technologies.

How to Use (High-Level)

- Create an API key and access the Developer Platform.

- Choose the tool (TTS, EVI, or Creator Studio) based on your use case.

- Provide prompts or scripts to generate AI voices with the desired emotion and style.

- Integrate via API into your app for real-time or batch processing.

- Use the Expression Measurement API to analyze and monitor emotional cues where needed.

Practical Use Cases

- Build emotionally adaptive voice agents for customer support.

- Generate expressive narration for podcasts or audiobooks.

- Create interactive experiences with real-time voice emotion modulation.

- Analyze audience reactions through expression measurement for content optimization.

Safety and Ethical Considerations

- Follow The Hume Initiative guidelines for empathic AI.

- Use responsibly to avoid manipulation or deception; ensure user consent where required.

What’s Inside

- TTS (Octave): Emotion-aware, context-aware text-to-speech with variable delivery.

- EVI 2: Empathic, real-time voice interface capable of emulating diverse personalities and accents.

- Expression Measurement API: Quantify emotional cues across face, voice, and language.

- Conversational Voice Platform: End-to-end solution for deploying emotionally intelligent voice agents.

- TTS Creator Studio: Tools to create and edit long-form audio content.

- Developer Platform & API: Keys, usage monitoring, docs, playground, and community.

- Multilingual & Personalization: Broad language support and adjustable voice characteristics.

- Real-Time Voice Modulation: Fine-grained control over tone, pace, and delivery.

- Research-Driven: Ongoing publications and adherence to responsible AI guidelines.