Jan

Open siteChatbots & Virtual Companions

Introduction

Offline ChatGPT alternative

Jan Product Information

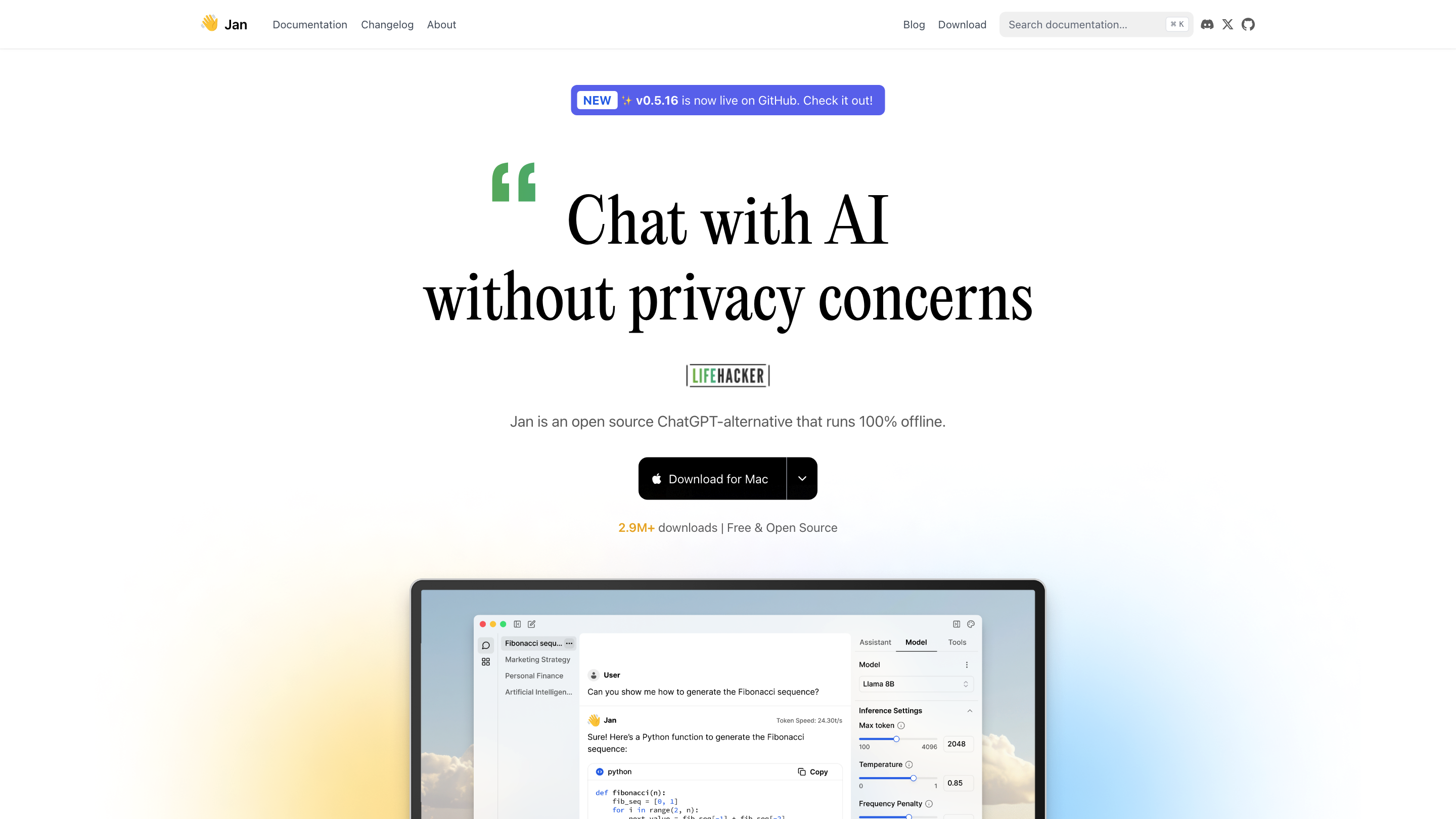

Jan — Open source offline ChatGPT-alternative

Jan is an open source AI assistant that runs 100% offline on your device. It emphasizes local-first design, user ownership of data, and full customization. You can chat with AI locally, load and run powerful models on your computer, and optionally connect to cloud-based AIs when needed.

How it works

- Local AI: Run conversations with AI models directly on your machine for privacy-conscious interactions.

- Model Hub: Download and run models like Llama3, Gemma, or Mistral locally.

- Cloud Connectors: Route requests to more capable cloud models (e.g., OpenAI, Groq, Cohere) when desired.

- Local API Server: Set up an OpenAI-compatible API server powered by local models with a single click.

- Extensible: Extend Jan with 3rd party extensions, data connectors, and cloud AI connectors.

Key principles

- Local-first: Conversations and files stay on your device; privacy and ownership are prioritized.

- User-owned: Your data remains under your control and portable across systems.

- Fully customizable: Tailor alignment, moderation, and censorship levels; install extensions to fit your workflow.

Core strengths

- Offline operation: Use Jan without an internet connection.

- Open source: Transparent development with public repositories and community contributions.

- Cross-ecosystem support: Deploy local models or connect to cloud AIs as needed.

- Active development: Ongoing updates, models, and features (e.g., memory/assistance features coming soon).

How to use Jan

- Download and install on macOS (or other supported platforms) from the official repository.

- Choose your mode: run local models, or connect to cloud AIs for enhanced capabilities.

- Start chatting with the AI, or set up a local API server for programmatic access.

- Customize with extensions and alignment/moderation settings to fit your needs.

Note: Jan is designed to be highly configurable and privacy-respecting, with all critical data processed locally unless you opt-in to cloud services.

Feature list

- Local-first AI: Conversations and data stay on your device by default

- 100% offline operation: Run models locally without requiring internet access

- Model Hub: Download and run models like Llama3, Gemma, Mistral locally

- Cloud AIs integration: Route to OpenAI, Groq, Cohere, and other cloud providers when needed

- Local API server: One-click setup for an OpenAI-compatible API

- Extensions: Extend Jan with 3rd party connectors, tools, and data integrations

- Highly customizable: Adjust alignment, moderation, and censorship levels

- Open Source: Transparent development with public repositories and community involvement

- Memory and assistants (coming soon): Create personalized assistants that remember conversations and tasks

Safety and privacy considerations

- Local-first design prioritizes privacy; data remains on your device by default.

- You can opt to connect to cloud providers if you need capabilities beyond local models.

- As with all AI tools, manage how and where you deploy AI to align with your privacy and security requirements.

Technical details

- Platform: macOS download available; ongoing cross-platform support

- Licensing: Free & Open Source

- Community: Active ecosystem with multiple contributors and ongoing updates

Quick start (summary)

- Download Jan for your platform

- Run local models or connect to cloud AIs

- Optional: set up Local API server for programmatic use

- Customize with extensions and settings to suit your workflow