LangWatch

Open siteIntroduction

Improve GenAI with quality control & user analytics.

Featured

Chatbase

Chatbase is an AI chatbot builder that uses your data to create a chatbot for your website.

n8n

Workflow automation for technical people

ElevenLabs

The voice of technology. Bringing the world's knowledge, stories and agents to life

Lovable

AI-powered platform for software development via chat interface.

LangWatch Product Information

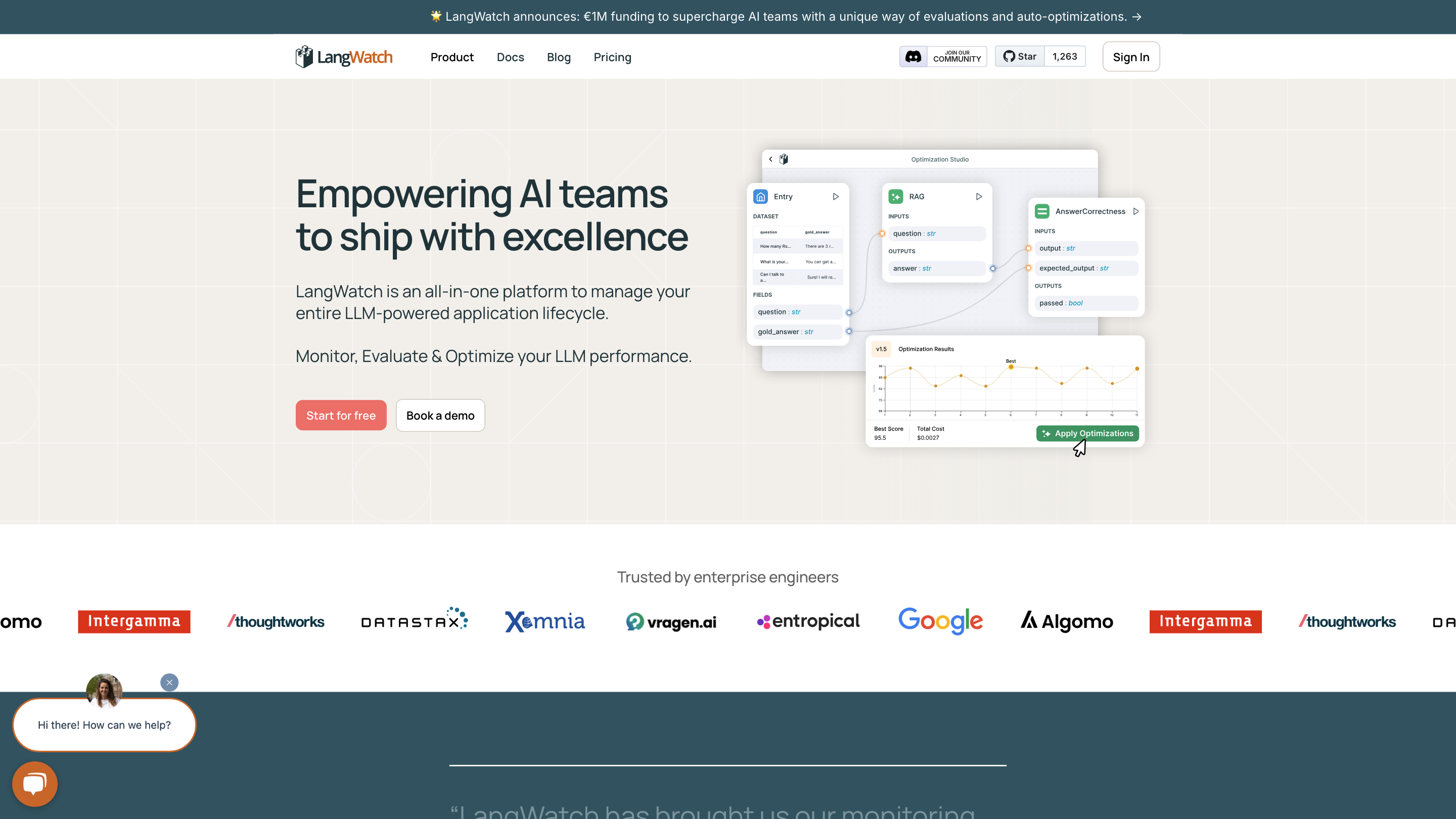

LangWatch is an all-in-one platform to manage the entire LLM-powered application lifecycle: monitor, evaluate, and optimize AI models and pipelines. It targets enterprise teams by providing observability, quality control, and automated optimization to move from PoC to production with confidence. LangWatch emphasizes ease of integration, domain expert collaboration, and strong security/compliance options, including self-hosted or hybrid deployments.

Overview

LangWatch helps you measure performance, build reliable prompts and models, and continuously optimize your LLM workflows. It offers full dataset management, versioned experiments, and a suite of off-the-shelf evaluations to establish quality gates across your AI systems. The platform is model-agnostic and designed to fit into any tech stack, enabling domain experts to contribute to the AI development process.

How LangWatch Works

- Measure performance across your LLM pipeline (on/offline) beyond just prompts, treating the stack like a unit-testable system.

- Use DSPy-driven optimizers to automatically discover the best prompts and demonstrations, reducing manual trial-and-error.

- Build, evaluate, and iterate with versioned experiments to track what works best across prompts, models, and datasets.

- Integrate with your existing tech stack and deploy trusted flows as APIs or through your preferred interfaces.

Core Capabilities

- Observability: Monitor performance, latency, cost, and reliability with comprehensive dashboards and alerts.

- Evaluations & Guardrails: Create and apply quality evaluations, jailbreak guards, RAG quality checks, and safety/compliance rules.

- Optimization Studio: Measure, experiment, and optimize prompts, models, and demonstrations to maximize quality and efficiency.

- Data & Experiment Management: Full dataset management, custom evaluators or 30+ off-the-shelf evaluations, and versioned experiments.

- Deployment Flexibility: Self-hosted, hybrid, or cloud deployment options to meet enterprise data control requirements.

- Model Interoperability: Supports all LLMs and integrates with a wide range of models and frameworks; easy to plug into existing pipelines.

- Collaboration: Bring domain experts (legal, sales, HR, finance, etc.) into the workflow for human-in-the-loop evaluation.

- Security & Compliance: Enterprise-grade controls, data residency options, and compliance certifications (e.g., GDPR, ISO 27001).

- Integrations & API: API access, LangChain, DSPy tooling, Vercel AI SDK, LiteLLM, OpenTelemetry, LangFlow, and more.

- Observability & Debugging: End-to-end observability including metrics, traces, logs, and debugging aids for AI workloads.

Use Cases

- Optimize RAG performance: improve retrieval accuracy and reduce hallucinations by tuning prompts and demonstrations.

- Improve routing and categorization: enhance decision paths for agents and document classification.

- Build reliable evaluations: create custom or use ready-made evaluations to guarantee quality and compliance.

- Data-driven ROI: measure business impact and ROI from LLM applications through integrated metrics and dashboards.

How It Helps Teams

LangWatch enables developers and domain experts to collaborate effectively, reducing the friction of moving from PoC to production. Its evaluation-driven approach and automated optimization accelerate delivery while maintaining governance and security.

Availability & Deployment Options

- Self-hosted or Hybrid deployment for full data control and security.

- Cloud option available with data residency choices.

- Enterprise-grade role-based access control and multi-project management.

- Works with your existing models and tools via API integrations.

Safety and Compliance

- Implement safety guards, guardrails, and compliance reporting to satisfy regulatory needs.

- Transparent evaluations and auditable experiment histories.

Key Benefits

- Rapidly identify the best prompts and models with automated optimization.

- Build reliable, production-grade LLM pipelines with end-to-end observability.

- Collaborate with domain experts while maintaining governance and security.

Feature Spotlight

- End-to-end LLM app lifecycle management (monitor, evaluate, optimize)

- Model-agnostic support with broad interoperability

- Observability dashboards, alerts, and cost tracking

- Evaluation framework and guardrails for safety/compliance

- Optimization Studio to automatically refine prompts and demonstrations

- Versioned datasets and experiments for reproducible results

- Self-hosted, hybrid, or cloud deployment options

- Role-based access control and multi-project management

- Domain-expert collaboration workflows

- Extensive integrations (API, LangChain, DSPy, LangFlow, etc.)