LlamaChat

Open siteChatbots & Virtual Companions

Introduction

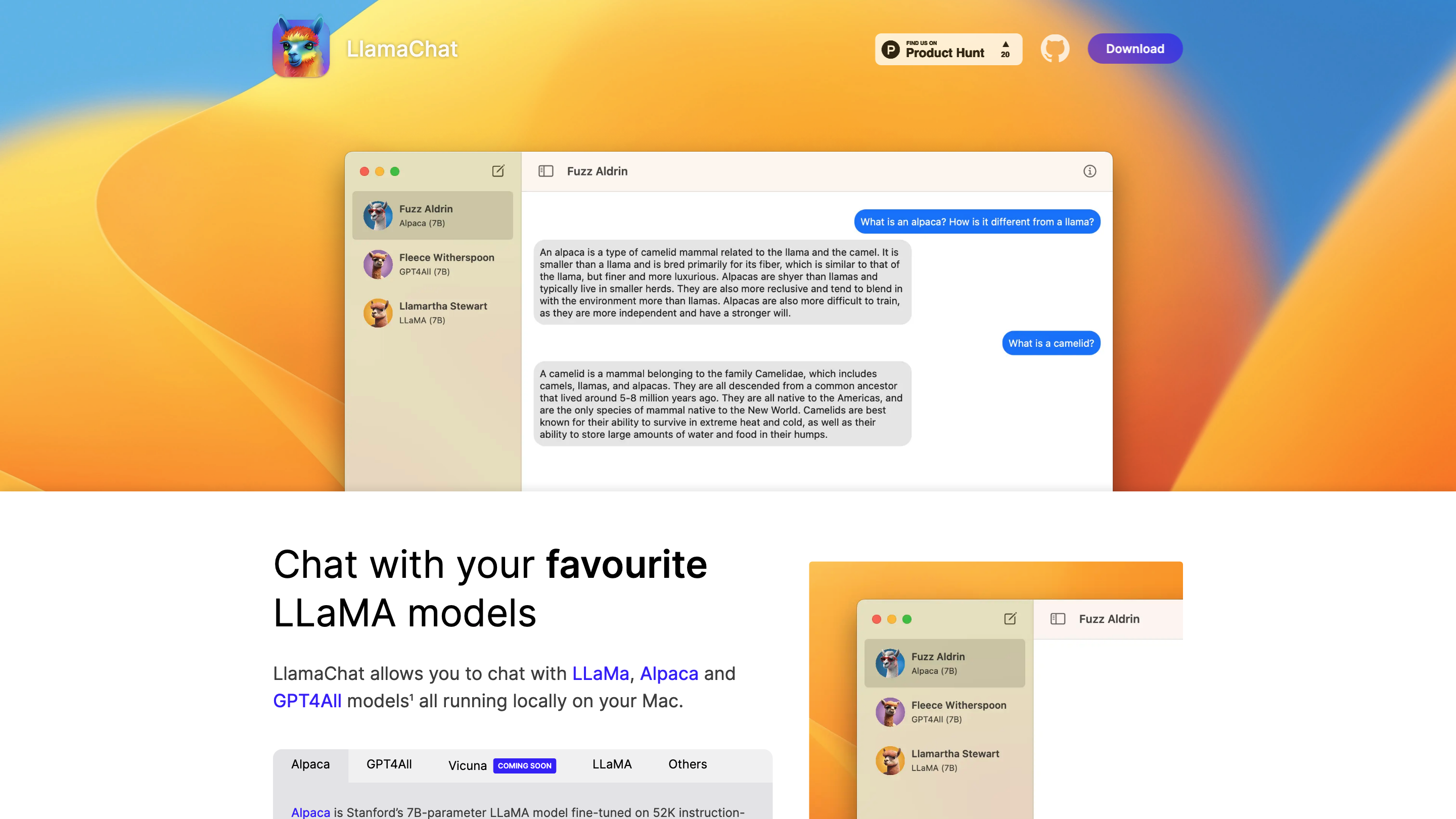

Chat with your favorite LLaMA, Alpaca, and GPT4All models on LlamaChat.

LlamaChat Product Information

LlamaChat is a locally-run chat application for macOS that lets you interact with open-source LLaMA-family models (LLaMA, Alpaca, Vicuna, GPT4All, etc.) directly on your Mac. It supports importing raw PyTorch model checkpoints or pre-converted .ggml model files, running entirely offline with no cloud dependency. The project emphasizes open-source accessibility, model interoperability, and local processing.

How to Use LlamaChat

- Install on macOS. Download the app or install via Homebrew:

brew install --cask llamachat(requires macOS 13 and a compatible processor). - Choose a model. Select from available locally-presented models (e.g., LLaMA, Alpaca, Vicuna, GPT4All) that you have integrated or imported.

- Import model files. Import raw published PyTorch checkpoints or pre-converted .ggml model files into LlamaChat.

- Chat locally. Start chatting with the selected model directly on your Mac.

Note: LlamaChat does not include any model files by default. You are responsible for acquiring and integrating the appropriate model files in accordance with each provider’s terms.

Features

- Local-first chat with LLaMA-family models (LLaMA, Alpaca, Vicuna, GPT4All, and more coming soon)

- Import support for raw PyTorch checkpoints and .ggml model files

- Fully open-source foundation (llama.cpp and llama.swift) and free to use

- Cross-processor compatibility (Apple Silicon and Intel; macOS 13+)

- Independent of any model provider partnerships or cloud services

- Clear licensing and attribution handling for models used

How It Works

- LlamaChat leverages open-source libraries (llama.cpp, llama.swift) to run large language models locally.

- Users bring their own model files; the app provides a UI to load and interact with these models.

- The software is designed to be100% free and open-source, with ongoing community contributions.

Safety and Legal Considerations

- You are responsible for obtaining and using models in compliance with their licenses and terms.

- As an independent application, LlamaChat is not affiliated with or endorsed by Meta Platforms, Stanford, Nomic AI, or other entities mentioned in the project notes.

Core Features

- Local, offline execution of LLaMA-family models on macOS

- Import both PyTorch checkpoints and .ggml model files

- Open-source, freely available under the project licenses

- Compatibility with Apple Silicon and Intel Macs (macOS 13+)

- No bundled models; user-provided models only

- Simple, self-contained setup with community-driven development