LM Studio

Open siteOther

Introduction

User-friendly AI model software

LM Studio Product Information

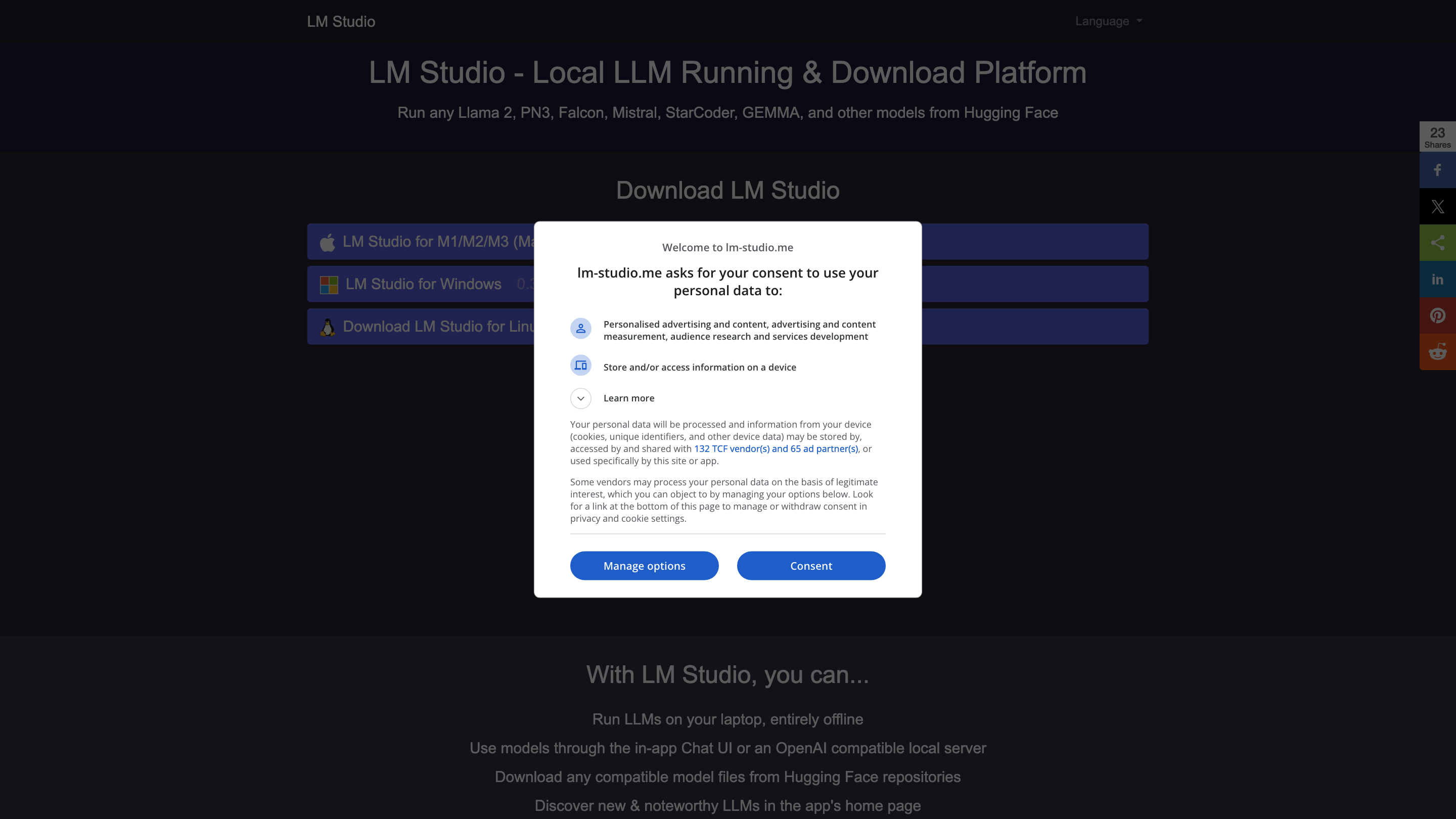

LM Studio - Local LLM Running & Download Platform is a user-friendly software application that simplifies the use of open-source large language models (LLMs). It lets you explore, download, and run various AI models directly on your computer, completely offline, through an intuitive interface. It supports running models like Llama 2, PN3, Falcon, Mistral, StarCoder, GEMMA, and other Hugging Face-hosted models. Available for Mac (M1/M2/M3), Windows, and Linux, LM Studio enables in-app chat interactions or connections to an OpenAI-compatible local server. The platform emphasizes accessibility, offline operation, and ease of model experimentation without requiring programming knowledge.

Key Capabilities

- Run LLMs locally on your laptop entirely offline

- In-app Chat UI or OpenAI-compatible local server integration

- Browse, download, and run models from Hugging Face repositories

- Support for multiple models and easy switching

- Playground mode to run multiple models simultaneously (advanced use)

- Hardware-aware model compatibility guidance and estimates

- Free for individual users (subject to current terms)

- Cross-platform availability: macOS, Windows, Linux

- Regular updates to discover noteworthy LLMs in-app

How to Use LM Studio

- Install LM Studio: Download the version for your OS from the official LM Studio site and run the installer.

- Browse and Download Models: Access Hugging Face repositories within the app, choose compatible models, and download them locally.

- Run Models: Use the in-app Chat UI to interact with a selected model or enable an OpenAI-compatible local server for external tooling.

- Experiment with Playgrounds: If available in your version, run multiple models simultaneously to compare outputs and leverage combined capabilities.

Requirements & Compatibility

- Apple Silicon Mac (M1/M2/M3) with macOS 13.6+ or a Windows/Linux PC with AVX2 support

- 16GB+ RAM recommended; 6GB+ VRAM for PCs

- NVIDIA/AMD GPUs supported for acceleration

- No programming required; you can operate via the GUI

Safety, Licensing & Support

- Free for individual users as of the latest update; verify current pricing on the official site

- Review licensing terms for commercial use within LM Studio’s agreements

- Hardware compatibility guidance helps reduce trial-and-error when selecting models

Core Features

- Local offline execution of LLMs

- GUI-based interaction with models (no coding required)

- Download and manage models from Hugging Face repositories

- Run multiple models simultaneously (Playground mode)

- OpenAI-compatible local server option for integration

- Cross-platform support (macOS, Windows, Linux)

- Hardware-aware compatibility estimates to guide model selection

- Free for individual users (subject to current terms)