MagicAnimate

Open siteIntroduction

Create animated videos from images and motion videos.

Featured

ElevenLabs

The voice of technology. Bringing the world's knowledge, stories and agents to life

Hailuo AI

It's like a Hollywood studio in your pocket!

Wan AI

Video & Image Generation Model from Alibaba Cloud

Chatbase

Chatbase is an AI chatbot builder that uses your data to create a chatbot for your website.

MagicAnimate Product Information

MagicAnimate: Temporally Consistent Human Image Animation using Diffusion Model

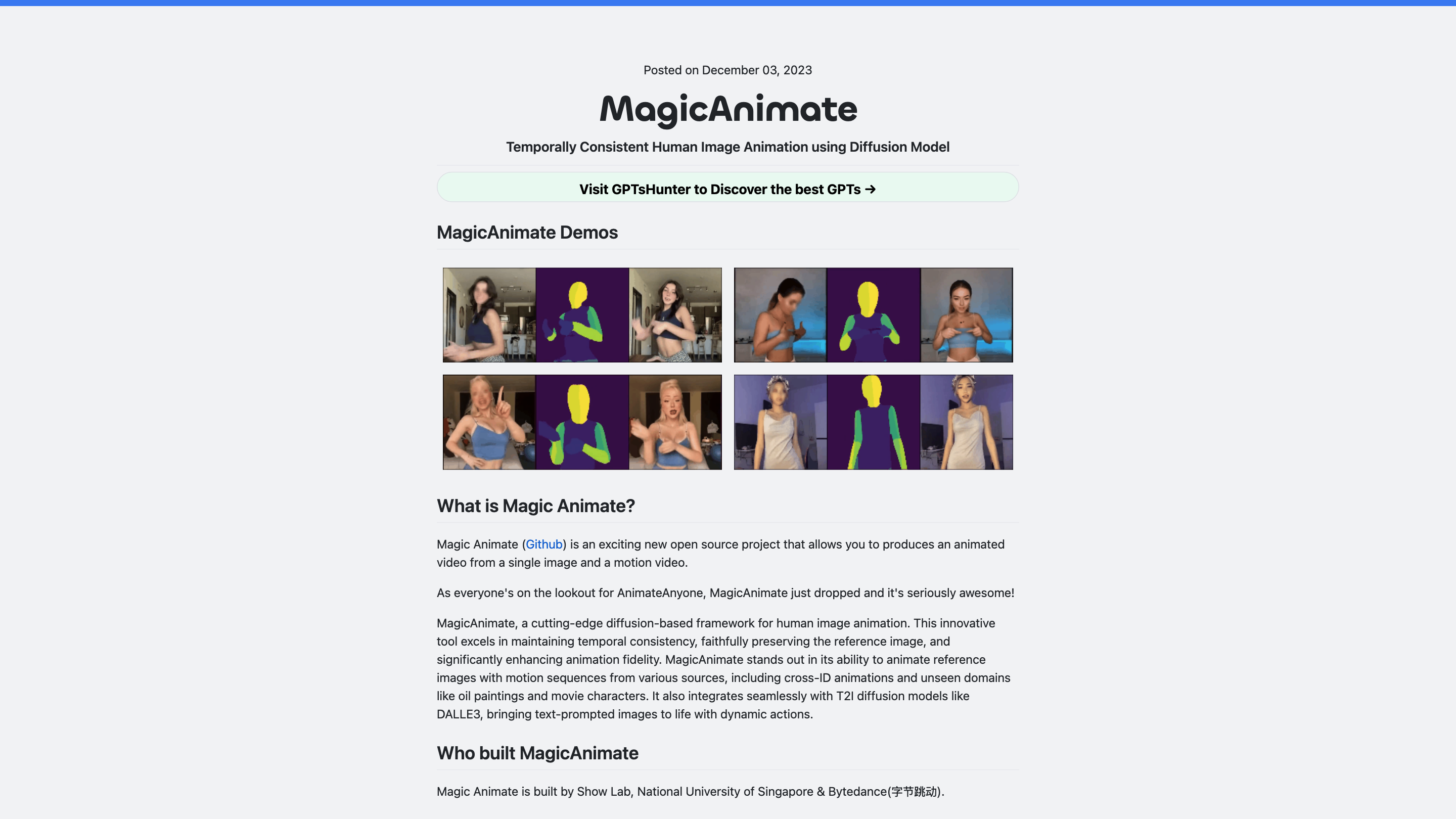

MagicAnimate is an open-source diffusion-based framework that turns a single image into an animated video guided by a motion video or pose data. It emphasizes temporal consistency, faithful preservation of the reference image, and high animation fidelity. It supports animating reference images with motion sequences from diverse sources, including cross-ID animations and unseen domains such as oil paintings and movie characters. It also integrates with text-to-image diffusion models (e.g., DALLE3) to bring text-prompted concepts to life as dynamic actions.

What it is

- A cutting-edge tool developed by Show Lab, National University of Singapore & Bytedance that enables animating a static image using motion input.

- A diffusion-model-based pipeline focused on maintaining temporal coherence across frames while preserving the reference identity.

- Compatible with various motion sources (video, keypoints, OpenPose data) and capable of stylizing outputs to fit different domains or aesthetics.

Getting Started

- Prerequisites: Python >= 3.8, CUDA >= 11.3, FFmpeg

- Installation (conda):

- conda env create -f environment.yml

- conda activate manimate

- Models: download pretrained base models for Stable Diffusion v1.5 and MSE-finetuned VAE, along with MagicAnimate checkpoints

- Online demos: Try MagicAnimate on HuggingFace, Replicate, Colab, or other provided demo links

- API access: Replicate API available for generating animated videos

How it works (high level)

- Start from a single reference image and a motion video (or pose data)

- Use diffusion-based generation to propagate motion cues across frames while constraining the output to resemble the original reference

- Optional: convert input motion to OpenPose keypoints to drive animation

- Supports OpenPose-based workflows via integration models (magic-animate-openpose) and OpenPose-based motion extraction

How to generate animations

- Prepare inputs: a reference image and a motion video or pose data

- Load base models and checkpoints

- Run the diffusion-based animator with appropriate parameters (num_inference_steps, guidance_scale, seed)

- Export the resulting animated video

Platforms and Demos

- Replicate API examples: run magic-animate models with inputs including image, motion video, and inference parameters

- HuggingFace and Colab demos available for quick experimentation

Limitations and tips

- Distortion may occur in faces and hands in some outputs; may require checkpoint tuning

- Default configuration may shift styling (e.g., from anime to realism) depending on the DensePose-driven pipeline; adjust the checkpoint to change the style

- Anime styling can alter body proportions due to base model behaviors; consider model adjustments for desired aesthetics

- OpenPose-based workflows exist to convert motion streams into compatible guidance data

Safety and Ethics

- Use responsibly: respect privacy and consent when animating individuals or public figures; avoid misrepresentations or harmful use cases

How to Use MagicAnimate

- Install dependencies and download required models

- Prepare image and motion input

- Run the animation pipeline with your chosen parameters

- Retrieve the generated animated video

Use Cases

- Animate portraits or characters from films, games, or artwork

- Create stylized motion videos from static images

- Explore cross-domain animation (e.g., bring movement to oil paintings)

Considerations

- Computationally intensive; requires GPU acceleration

- Quality depends on model checkpoints and input data quality

Core Features

- Temporally consistent human image animation using diffusion models

- Supports motion inputs from video, pose data (OpenPose), and text-guided prompts

- High fidelity preservation of the reference image/identity

- Cross-domain animation capabilities (e.g., oil paintings, movie characters)

- Integration with Stable Diffusion v1.5 and MSE-finetuned VAE checkpoints

- Open-source with online demos (HuggingFace, Replicate, Colab)

- API access via Replicate for programmatic use

- OpenPose workflow support via dedicated models (magic-animate-openpose)

- Configurable via inference steps, guidance scale, and seeds to control fidelity and variation