ManagePrompt

Open siteCoding & Development

Introduction

Streamline AI development and deployment

ManagePrompt Product Information

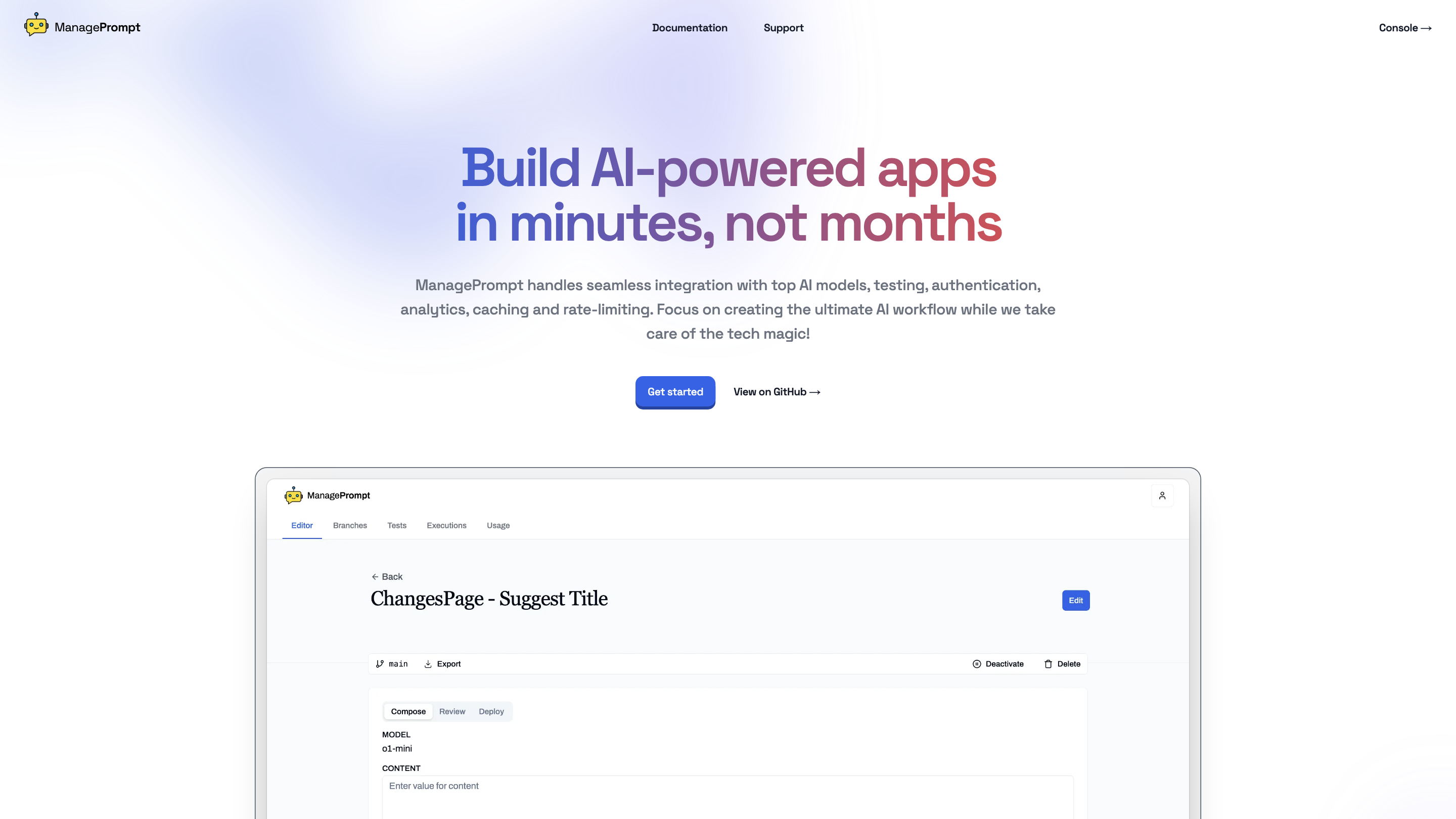

ManagePrompt is a platform designed to accelerate the creation and deployment of AI-powered applications. It provides seamless integration with top AI models, robust workflow management, testing, authentication, analytics, caching, and rate-limiting to help developers build AI products faster and more securely.

Overview

- Build AI-powered apps in minutes, not months

- Integrates with multiple AI models via a single API surface (OpenAI, Meta, Google, Mixtral, Anthropic, and more)

- Handles infrastructure concerns while you focus on your core logic

- Supports rapid iteration with branching and testing for prompts and models

- Security controls include single-use tokens and rate limiting to filter malicious requests

Key Capabilities

- Prompt and model management: tweak prompts, swap models, and deploy changes instantly

- Testing and iteration: branches and tests allow evaluation of several prompt-model variants

- Deployment: instantly deploy changes to end users with minimal downtime

- Security and governance: single-use tokens, rate limiting, and request filtering for safer operations

- Analytics: insights into usage, performance, and model effectiveness

- Caching: performance optimizations to reduce latency and cost

- Model variety: access to multiple models across major providers from a single API

How It Works

- Connect your AI models (OpenAI, Meta, Google, Mixtral, Anthropic, etc.)

- Define prompts and workflows for your applications

- Use branches to experiment with different prompts and models

- Run tests, review results, and deploy winning configurations

- Monitor and optimize with built-in analytics and security controls

How to Get Started

- Get started with ManagePrompt to build and deploy AI workflows quickly

- View the project source and contributions on GitHub

Use Cases

- Rapid prototyping of AI-powered chatbots, assistants, or automation tools

- Testing multiple AI models and prompts to find optimal configurations

- Deploying AI workflows with built-in security and monitoring

References

- View on GitHub

- Build faster and iterate quickly

- Security features: single-use tokens, rate limiting, and request filtering

Core Features

- Seamless integration with multiple AI models (OpenAI, Meta, Google, Mixtral, Anthropic, and more)

- Centralized prompt and model management for rapid experimentation

- Instant deployment of prompt/model changes to production

- Branching and tests to evaluate multiple prompt-model variants

- Security controls including single-use tokens and rate limiting

- Analytics and monitoring for usage, performance, and reliability

- Caching to improve response times and reduce costs

- Support for building AI-powered apps and workflows with minimal infrastructure hassle