OpenRouter

Open siteOther

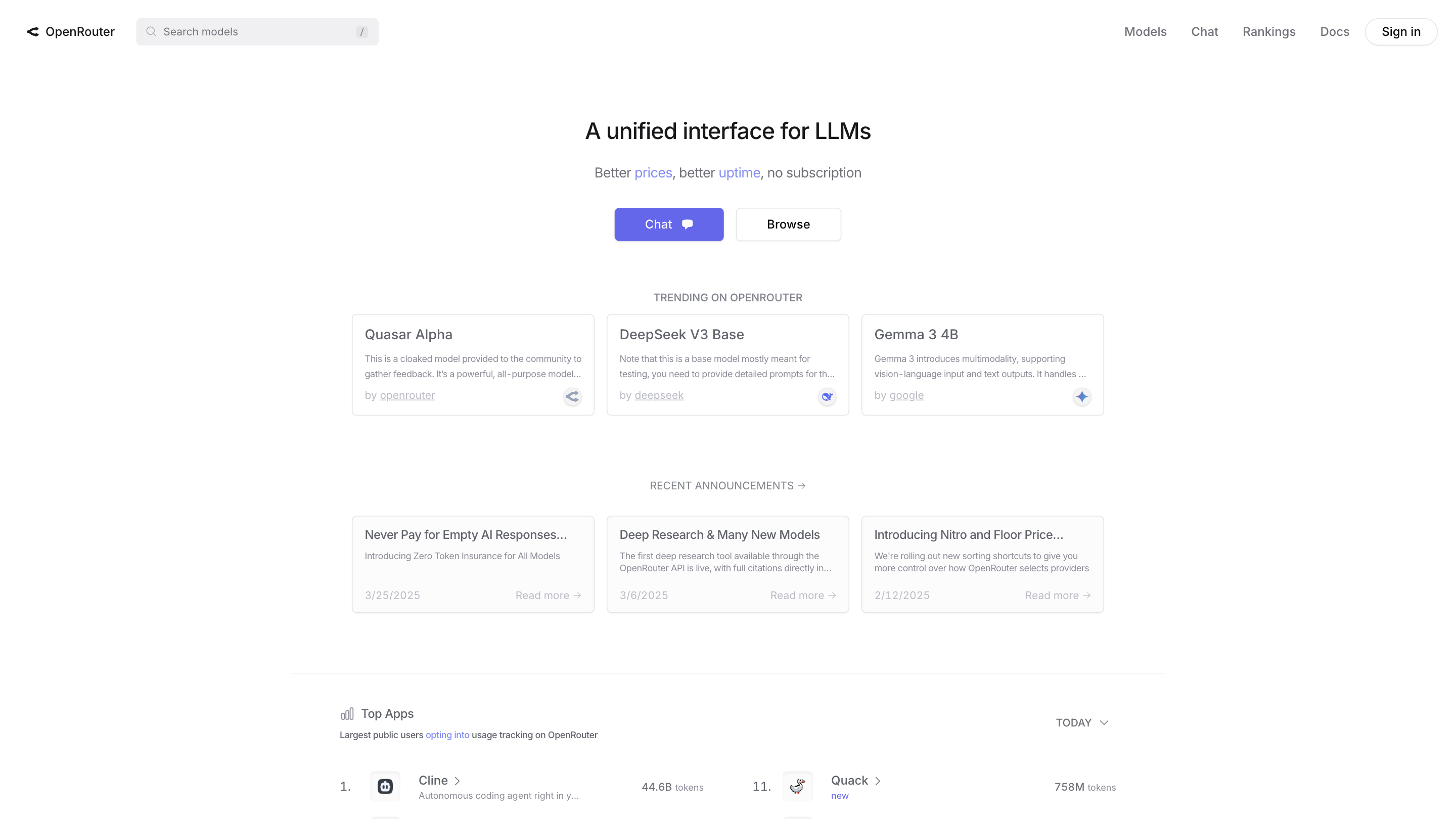

Introduction

A router for AI models and LLMs.

OpenRouter Product Information

OpenRouter — The Unified Interface For LLMs is a multiprovider AI access platform that lets you reach major language models through a single, unified API. It emphasizes low latency, high availability, cost efficiency, and fine-grained data policies, enabling developers to route requests across multiple providers without vendor lock-in. The platform showcases a broad ecosystem of models, real-time routing visuals, and tooling to manage access, keys, and credits from a centralized place.

How OpenRouter Works

- Sign up and set up: Create an account, optionally configure an organization for team use.

- Get credits and API key: Purchase credits and generate an OPENROUTER_API_KEY to authenticate requests.

- Unified access: Use a single API surface that can route requests to various providers (OpenAI, Claude, etc.) via one SDK or endpoint.

- Routing & availability: The system automatically routes around outages and balances latency and cost by choosing the optimal provider per request.

- Policies & data control: Apply fine-grained data policies to ensure prompts/outputs go only to trusted models/providers.

OpenRouter supports OpenAI-compatible endpoints, making integration straightforward for developers familiar with OpenAI's API, while expanding access to additional providers and models.

Core Capabilities

- Access a wide range of models from multiple providers through a single API

- Real-time model routing visualization and performance data

- High availability with automatic failover to maintain uptime

- Edge-based deployment to minimize latency (~30ms added per user)

- Simple credits-based pricing system

- Fine-grained data policies to control data flow to providers

- Easy integration with OpenAI SDKs and compatibility with existing codebases

How to Use OpenRouter

- Create an account and, if needed, an organization for your team.

- Purchase credits and generate your API key (OPENROUTER_API_KEY).

- Choose a provider/model or rely on automatic routing for each request.

- Integrate using the OpenRouter API/SDK as you would with OpenAI, but with access to multiple models.

- Monitor performance with routing visualizations and adjust policies as needed.

Data Policies and Safety

- Custom Data Policies: Define which providers can handle your prompts and data.

- Privacy-forward: Route data only to trusted models; configurable to align with compliance needs.

- Usage guidelines should follow best practices and provider terms to avoid misuse.

Use Cases

- Multimodel experimentation and A/B testing across different providers

- Global teams requiring reliable access without vendor lock-in

- Cost-optimized routing by selecting providers with favorable latency/cost profiles

- Compliance-heavy applications needing strict data routing controls

Related Tools & Ecosystem

- OpenRouter integrates with major model hosts and offers a unified interface compatible with existing OpenAI workflows

- Leadership in routing, availability, and data governance for enterprise-scale AI deployments

Feature Highlights

- Unified access to major AI models through a single API

- Auto-routing and high-availability to minimize downtime

- Edge latency optimization (~30ms extra per request)

- OpenAI-compatible API with extended provider support

- Credits-based pricing and centralized API key management

- Fine-grained data policies for prompt and data routing control

- Developer-friendly SDKs and tooling for quick integration