PromptLayer

Open siteCoding & Development

Introduction

The first platform for prompt engineers to streamline the engineering process.

PromptLayer Product Information

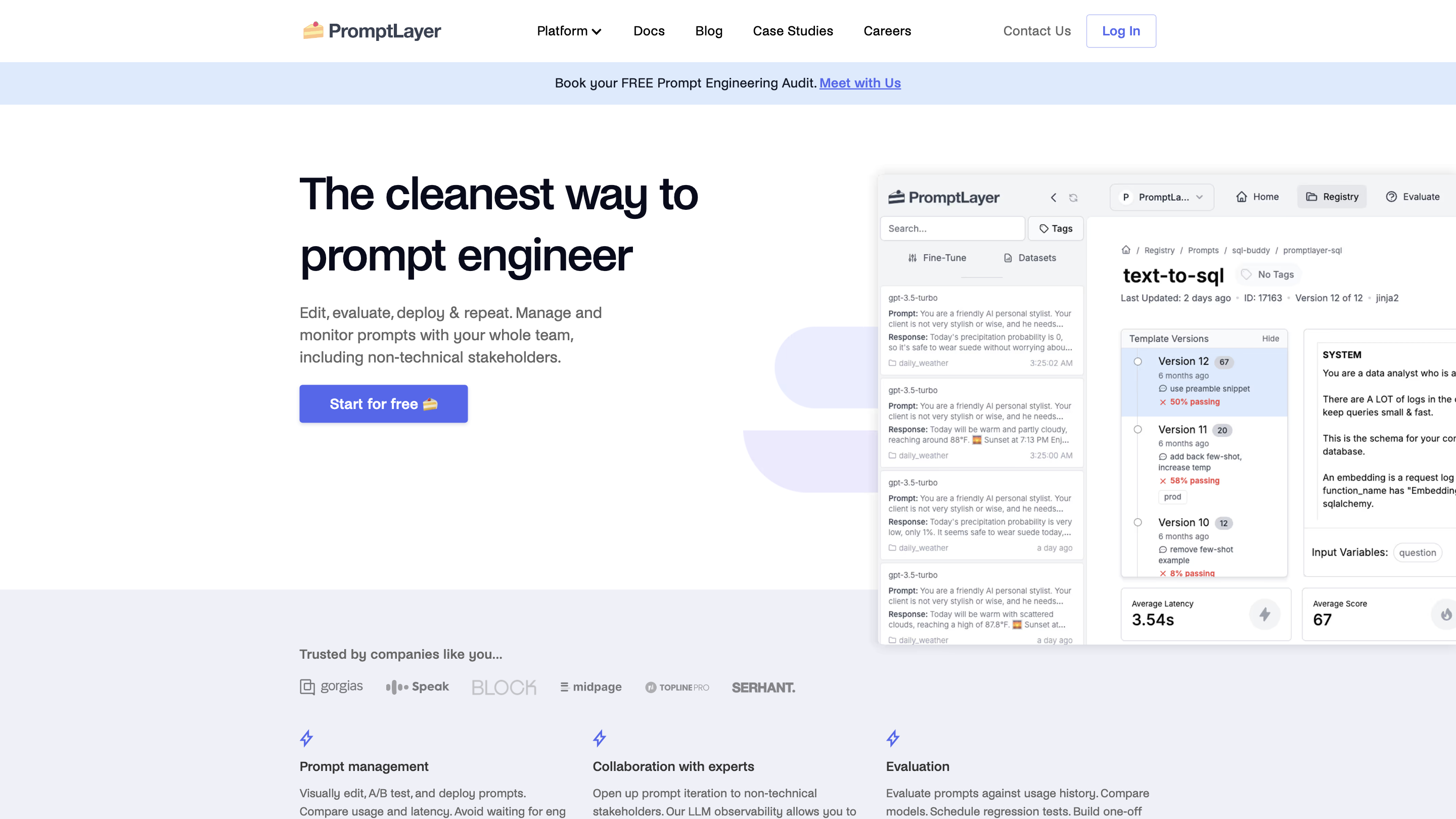

PromptLayer: The cleanest way to prompt engineer

PromptLayer is a platform for prompt management, evaluations, and LLM observability. It enables teams to edit, evaluate, deploy, and monitor prompts with a focus on collaboration, non-technical participation, and end-to-end prompt lifecycle management.

How it works

- Visually edit, evaluate, and deploy prompts from a centralized Prompt Registry.

- Perform A/B testing and compare usage, latency, and model performance across prompt variants.

- Collaborate with non-technical stakeholders by sharing prompts, running evaluations, and iterating in a team-friendly environment.

- Leverage LLM observability to read logs, identify edge cases, and improve prompts over time.

- Run one-off batch jobs and historical backtests to validate prompts against historical data.

What you can do with PromptLayer

- Prompt management and collaboration with a CMS-style interface

- No-code prompt editor for quick edits and experimentation

- Versioned prompts: edit, diff, annotate, and roll back changes

- Deploy prompts to prod and dev environments with interactive publishing

- A/B test prompts and compare model performance

- Historical backtests to evaluate new prompts against past data

- Regression testing and automated evals triggered on prompt updates

- Model comparison to see how prompts perform across different models

- One-off bulk prompt pipelines for batch testing on inputs

- Usage monitoring and analytics without needing to jump between tools

- Privacy and security: SOC 2 Type 2 compliant

Why teams choose PromptLayer

- Collaborate with experts and non-technical stakeholders without sacrificing control

- Decouple prompt deployments from engineering releases

- Centralized prompt governance reduces scattered prompts across codebases

- Empowers product, marketing, and content teams to directly edit and test prompts

Core Features

- Visual prompt editor and CMS for non-technical collaboration

- Version control for prompts with diffing and notes

- Deploy prompts interactively to prod and dev environments

- A/B testing and metrics comparison (latency, usage, model performance)

- Historical backtests to validate prompts against past data

- Regression tests and one-off batch evals

- Model-agnostic prompt testing across multiple models

- Usage analytics and observability integrated in the dashboard

- SOC 2 Type 2 compliant privacy and security