Token Counter for OpenAI Models

Open siteResearch & Data Analysis

Introduction

Summary: Count tokens to stay within limits and manage costs effectively in OpenAI models.

Token Counter for OpenAI Models Product Information

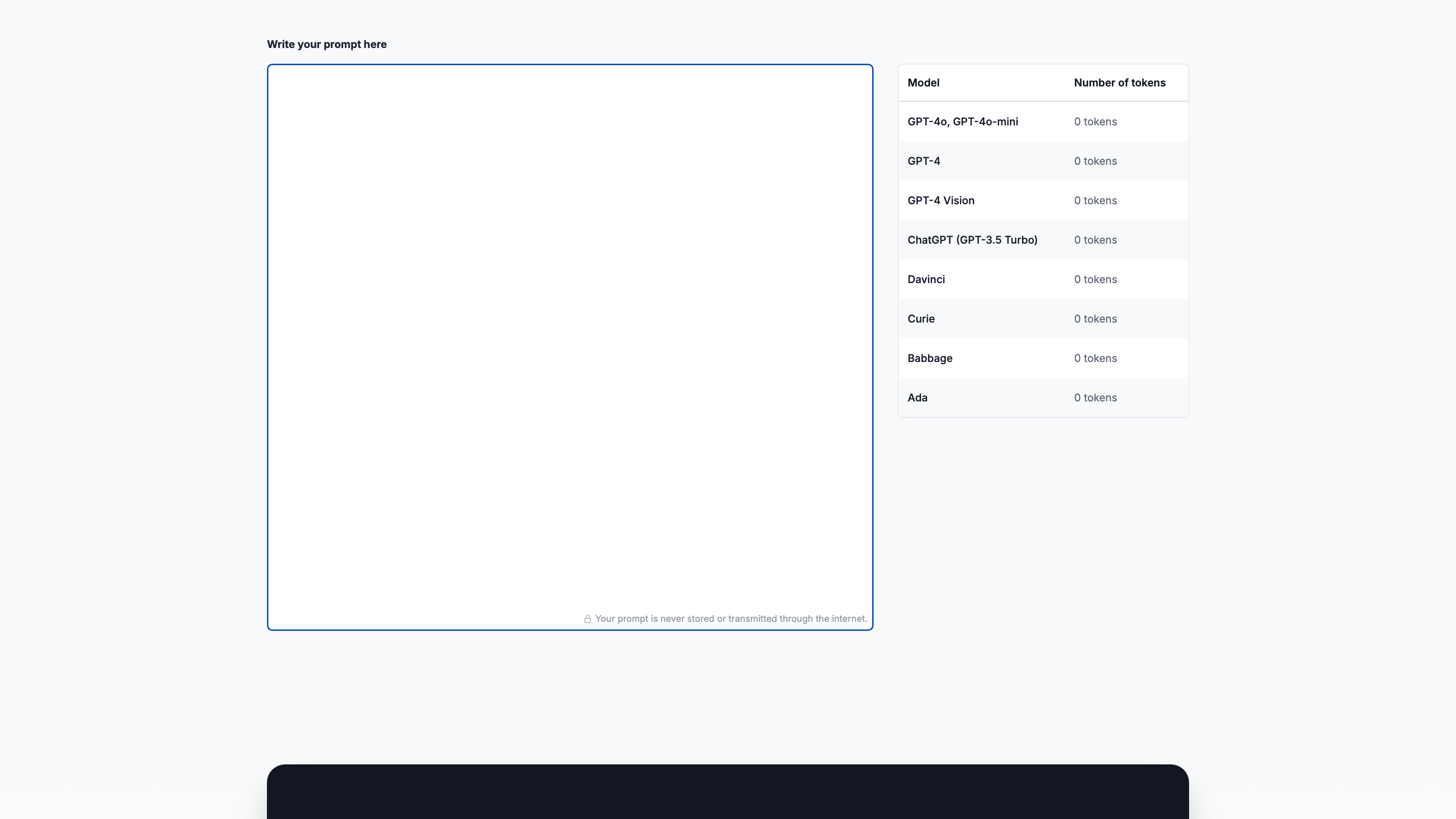

Prompt Token Counter for OpenAI Models is a web-based tool designed to help users count and manage tokens in prompts and model responses when working with OpenAI language models. It emphasizes staying within model token limits, controlling costs, and crafting concise, effective prompts. The tool explains token concepts and provides practical guidance for predicting and adjusting token usage across various OpenAI models (e.g., GPT-4o, GPT-4o-mini, GPT-4, GPT-3.5 Turbo).

How to Use Prompt Token Counter

- Enter your prompt. Paste or type the prompt you intend to send to the model.

- Choose the model. Select the target OpenAI model (e.g., GPT-4o, GPT-4o-mini, GPT-4, GPT-3.5 Turbo).

- Count tokens. View the computed token count for the prompt and estimate the required tokens for the expected response.

- Adjust as needed. Reduce prompt length or restructure content to fit within model limits and cost considerations.

Why Token Counting Matters

- Stay within model limits: Ensures the combined prompt and response do not exceed maximum tokens.

- Cost control: Token usage correlates with pricing; managing tokens helps avoid unnecessary expenses.

- Response management: Anticipates how many tokens the model will need to generate a complete answer.

- Efficient communication: Encourages concise prompts that clearly convey intent.

How Token Counting Works (Key Concepts)

- A token is the smallest unit of text processed by a model; it can be a word, a subword, or a character depending on the tokenizer.

- Models have a maximum token limit per interaction (e.g., GPT-3.5-turbo has a typical 4096-token limit).

- Prompt tokens plus expected response tokens must fit within the model’s limit.

Practical Tips

- Preprocess prompts to estimate token usage before sending to the model.

- Use tokenization libraries or the model’s tokenizer to count tokens accurately.

- Plan for the response by reserving tokens for the model’s output.

- Iteratively refine prompts if they exceed token limits.

What You Can Count On

- Understanding token limits for your chosen model.

- Steps to preprocess, count, and adjust prompts to stay within limits.

- Guidance on balancing prompt length, cost, and response length.

- Explanations of what tokens are and how tokenization affects processing.

Safety and Best Practices

- Use token counting to avoid unnecessary costs and prevent overly long or misinterpreted responses.

- Be mindful of data sensitivity; avoid sending confidential information when testing prompts.

Core Features

- Clear explanation of token concepts and model limits

- Model selection for accurate token estimation across GPT-4o, GPT-4o-mini, GPT-4, and GPT-3.5 Turbo

- Prompt token counting with guidance on response token estimation

- Practical steps to preprocess, count, and optimize prompts

- Tips for cost management and efficient prompting

- Educational content on what tokens are and how they affect processing