Wavify

Open siteVoice Generation

Introduction

On-device speech AI platform for embedding voice features.

Wavify Product Information

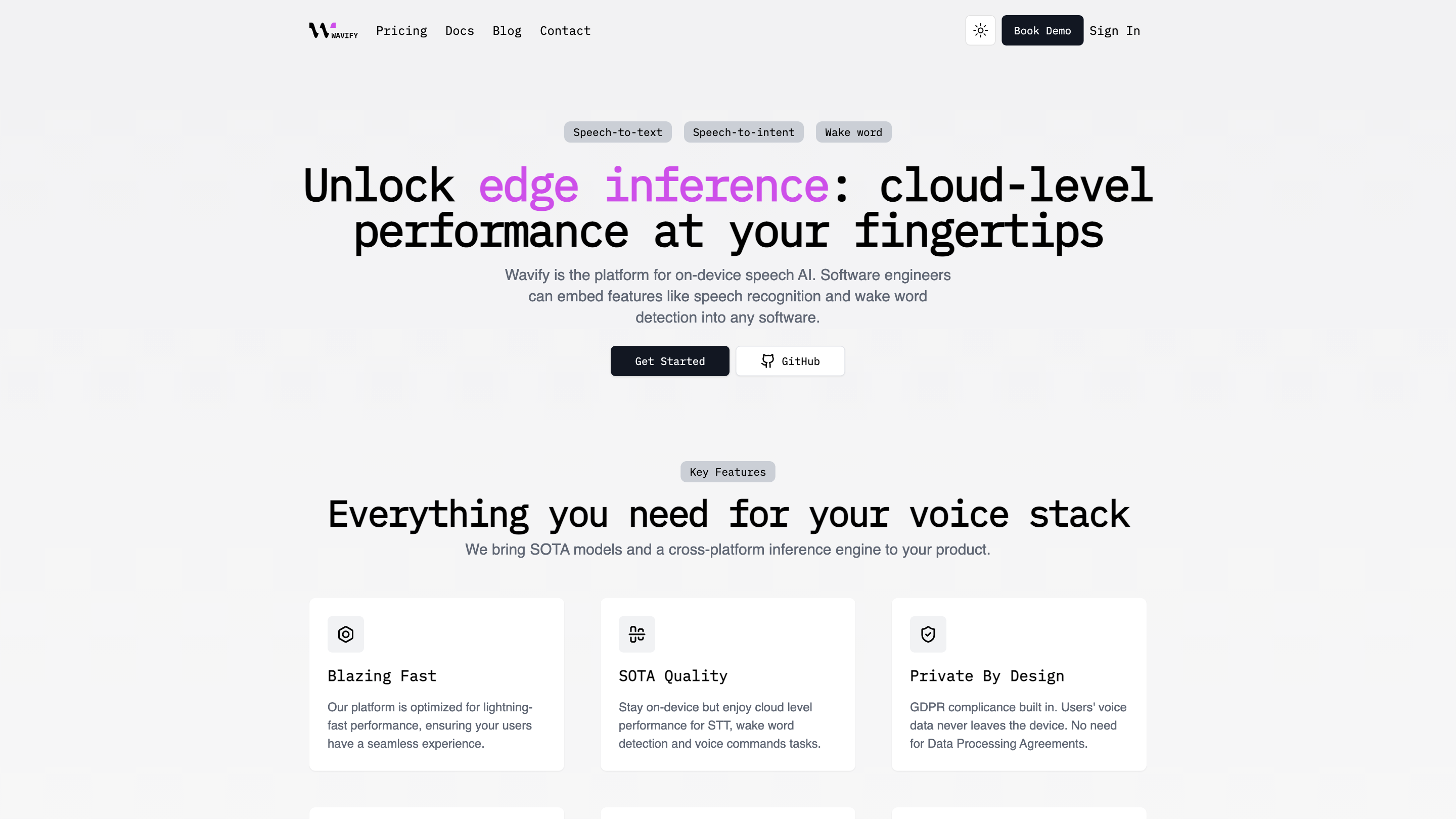

Wavify: On-device Speech AI Platform

Wavify is an on-device speech AI platform that enables software engineers to embed speech recognition, wake word detection, and voice command capabilities directly into any application. It emphasizes blazing-fast performance, privacy by design, and cross-platform compatibility, allowing developers to deliver cloud-level speech features without sending user data to the cloud.

Key Features

- On-device inference for speech-to-text (STT), wake word detection, and voice commands

- SOTA-quality performance with cloud-level capabilities while keeping data on-device

- Privacy by design: user voice data never leaves the device; GDPR-friendly

- Cross-platform support: Linux, macOS, Windows, iOS, Android, Web, Raspberry Pi, and embedded systems

- Multilingual support: 20+ languages

- Lightweight runtimes suitable for edge devices (example runtimes include Whisper.cpp and Wavify’s own engine)

- Quick integration with minimal code changes

How to Use Wavify

- Choose your integration approach: pick from the provided SDKs and demos that fit your tech stack (Python, Rust, etc.).

- Initialize the SttEngine with your model path and API key (if required by your setup).

- Run STT on audio files or streams: convert speech to text locally on the device.

Example (Python):

import os

from wavify.stt import SttEngine

engine = SttEngine(

"path/to/your/model",

os.getenv("WAVIFY_API_KEY")

)

result = engine.stt_from_file("/path/to/your/file")

print(result)

- Extend with wake word and commands as needed for your product, leveraging the same on-device inference pipeline.

Supported Use Cases

- Human voice as UI for various industries

- Healthcare: documentation, transcription, AI-assisted therapy

- Automotive: hands-free vehicle control, real-time navigation, in-car entertainment

- Legal: automate case documentation, transcriptions of court/meetings

- Consumer electronics: smart home, AI companions, voice control in apps and games

- Customer support: transcriptions for accurate record-keeping and faster issue resolution

- Education: interactive learning experiences with voice interaction

SDKs and Developer Experience

- Cross-language support with ready-to-use SDKs

- Developer experience designed for fast integration and iteration

- Clear examples and demos to accelerate adoption

How It Works

- Run on-device inference for STT, wake word detection, and voice commands

- Uses lightweight, efficient models suitable for edge devices

- Privacy-centric: no data leaves the user’s device

Safety and Privacy Considerations

- Data never leaves the device; no need for Data Processing Agreements for basic on-device usage

- Ensure compliance with local privacy laws when deploying voice-enabled features

Core Features

- On-device STT, wake word detection, and voice commands

- Lightning-fast performance and low memory footprint on edge devices

- Cloud-level quality while preserving user privacy

- Broad cross-platform support (Linux, macOS, Windows, iOS, Android, Web, Raspberry Pi, embedded)

- 20+ languages supported

- Simple integration with minimal code changes